AI vs. Everything we care about

Two reports speak of the stark attitudes towards AI's impact on society, culture and the economy. Time to dive into some AI summer reports, the human kind. 🚨

I never intended to become an AI report sleuth 🦎, but unbridled curiosity takes you to some funny places. This week I’ve been reading a new batch of AI studies a bit off the beaten track.

🏃 Research from Seismic Foundation is a large-scale effort to answer how ordinarily people view AI risks. Download the entire report.

🗽 The other that caught my attention is by The Autonomy Institute: Download the entire report.

Meanwhile, more than 40 researchers from these rival labs co-authored a new paper arguing that the current ability to observe an AI model’s reasoning — via step-by-step internal monologues written in human language — could soon vanish. (see video explanation).

With the emergence of Amazon Kiro and Reflection AI’s Asimov agent, this State of AI Code Generation Survey report by Stacklok is worth checking out.

AI risks to ordinary people and what they care about and public U.S. markets are things I think about nearly every day in my coverage of AI and exploration of the space from multiple angles.

I’m going to mix insights and infographics from the two reports because I found them so stimulating.

This might be of interest to you if you consider AI risk seriously and want a view of how actual people on the street view AI and its impact.

Articles you might have missed

Deep Dive on Thinky's Seed Round (Part II)

The Pentagon just went all in on AI

Will AI Agents really Automate Jobs at Scale?

How People see AI’s Impact 🌱

If you care about the future as it relates to AI, you might want to go over this report with your teenagers. 🤯

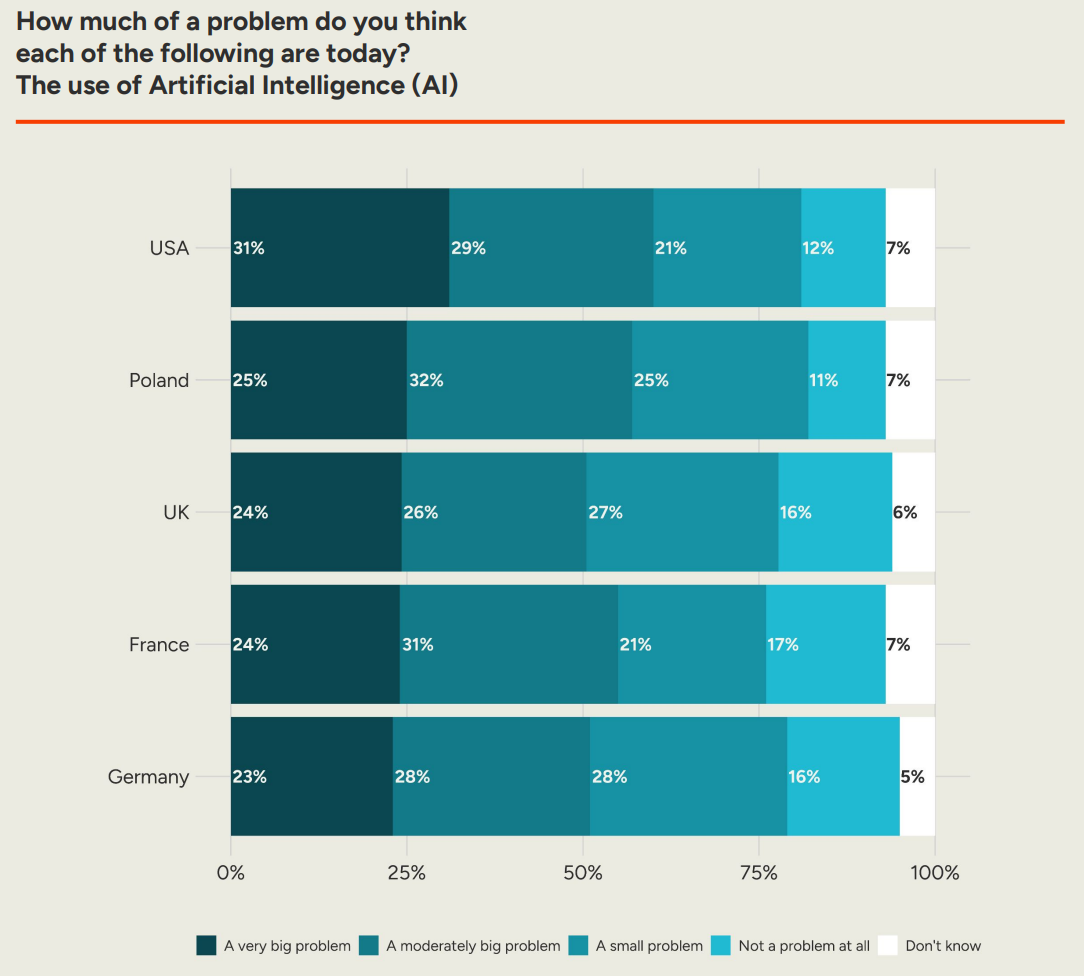

This debrief of reports will mostly focus on AI risks to ordinarily people (survey was skewed to Europe)

It’s one of the best depictions of how ordinary citizens view AI and how sentiment towards Generative AI might be changing I’ve ever seen.

Get Orientated

Check out my AI reddit where I own some small real-estate there praising the ecosystem including the work of others.

Sections of Note 📝

AI Tool Guides

Robotics & Physical AI

While I have no affiliations with either of these organizations the Seismic Foundation or The Autonomy Institute (see their reports), I feel like AI risk isn’t being talked about in the media in a proper journalistic manner. The hype is drowning out how actual people on the street feel about AI and it’s not just unfortunate, it’s giving a skewed image of the sentiment around AI from actual citizens. Which differs a lot with what you might see on social media or read in the media.

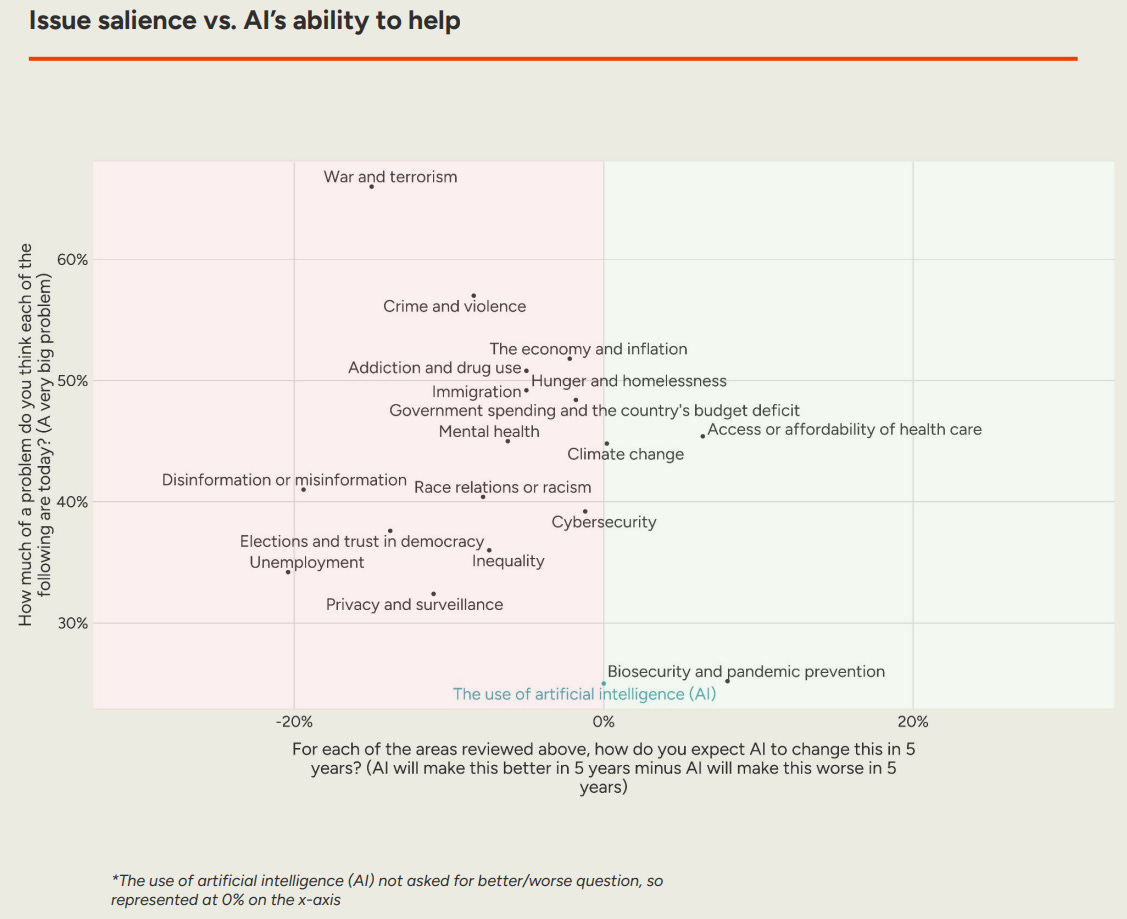

So to put it plainly, this huge new study finds: people think AI will worsen *almost everything they care about*.

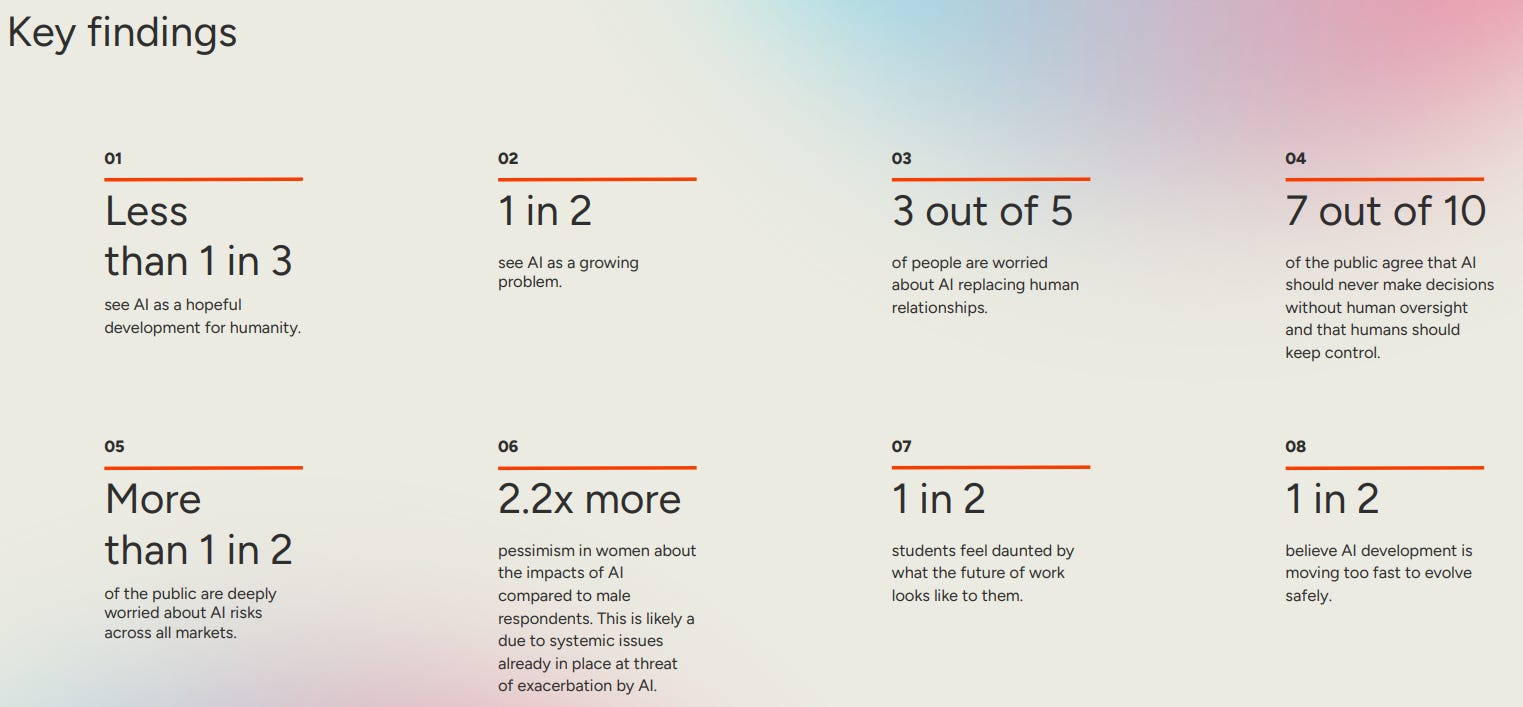

Here are some of the main findings of this survey of 10,000 people across the US and Europe :

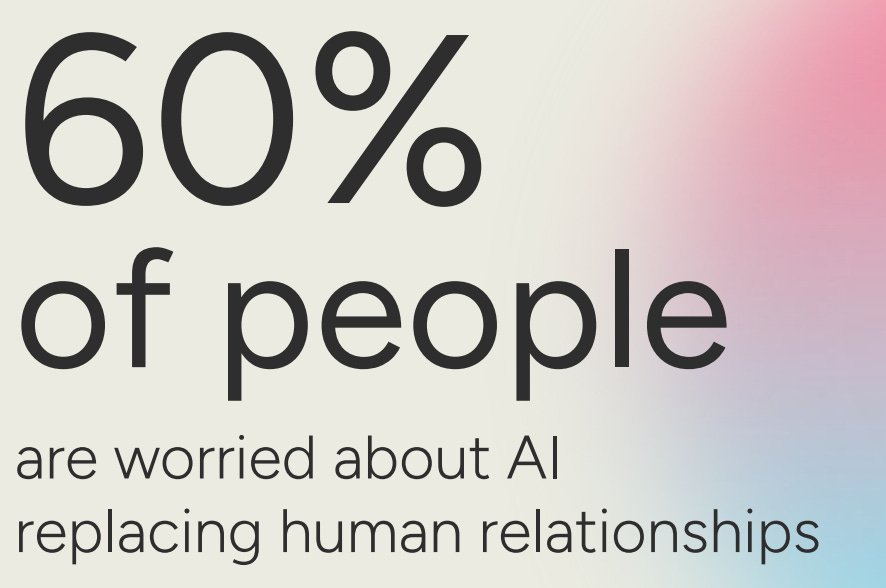

⚫ 60% worry AI will replace human relationships 🤷♂️

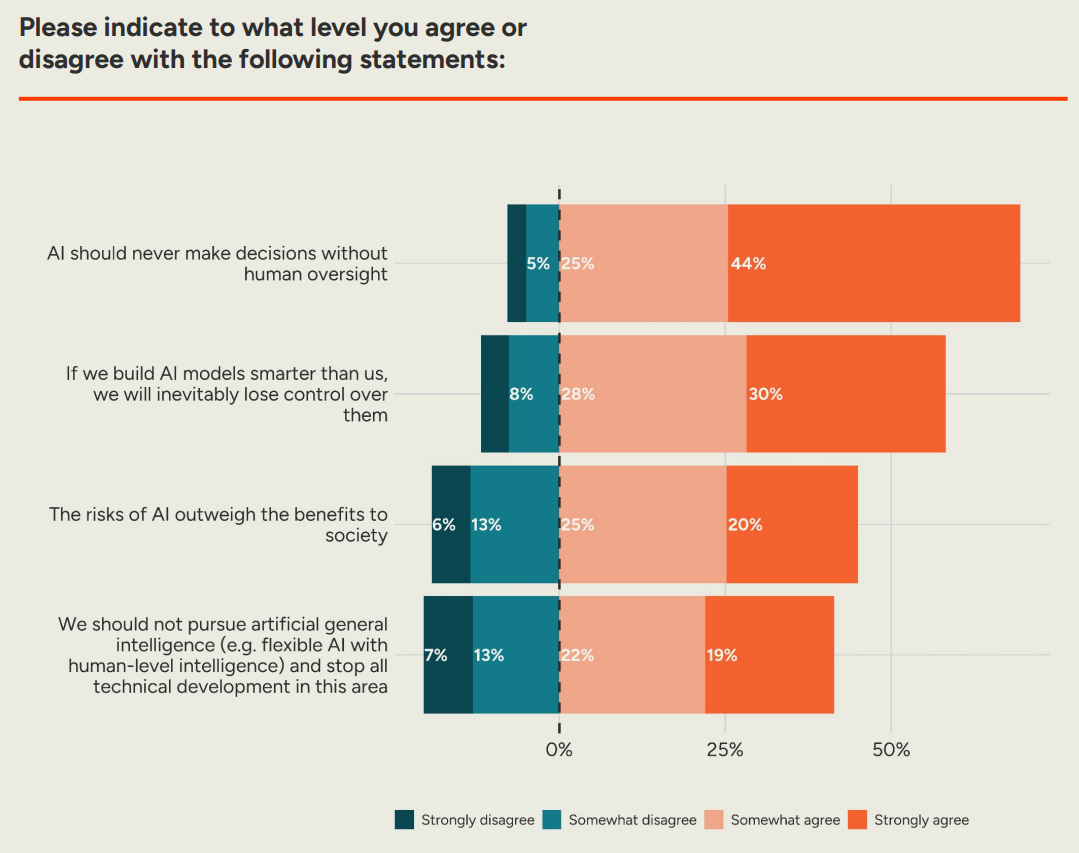

⚫ 70% say AI should never make decisions without human oversight ⚖️

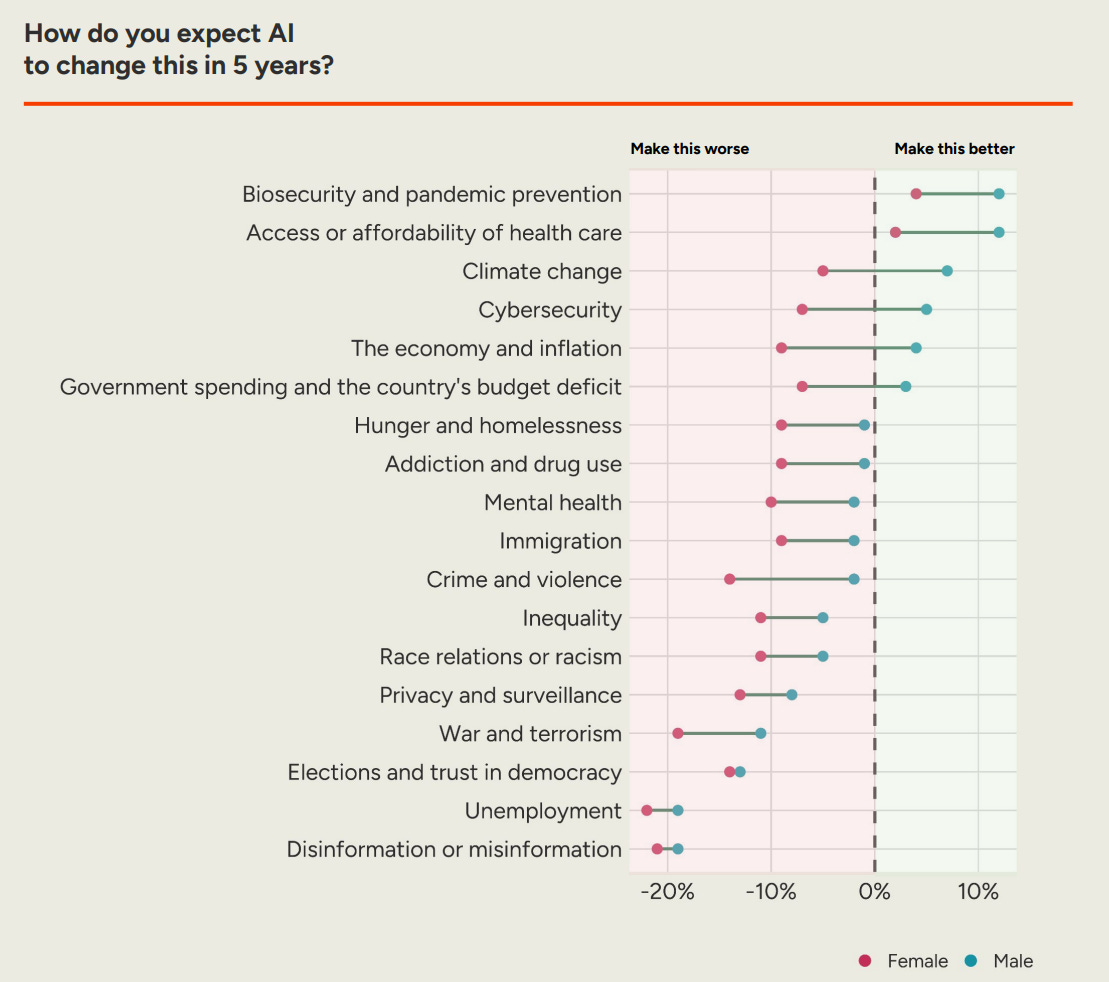

⚫ Women are more than twice as concerned about AI as men 🙋🏻♀️

⚫ Half think AI development is moving too fast to remain safe 🚨

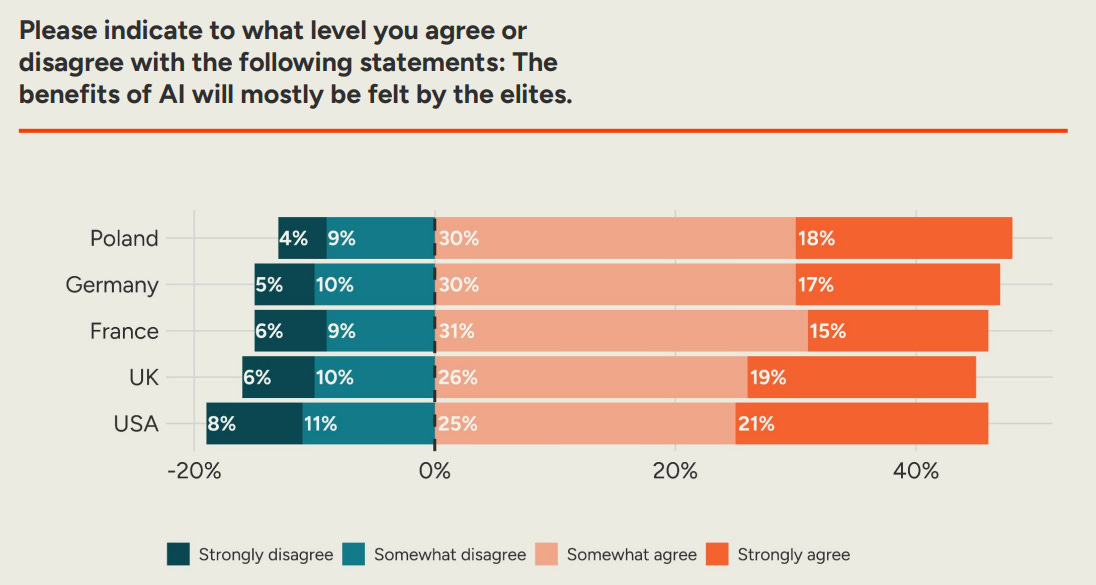

⚫ And nearly half believe its benefits will mostly go to the wealthy 💰

A European view of AI’s Impact on Human Well-Being and Humanity

So what I like about the study is it’s also very European. That is, they polled 10,000 people across the U.S., U.K., France, Germany, and Poland to understand how AI fits into their broader hopes and fears for the future.

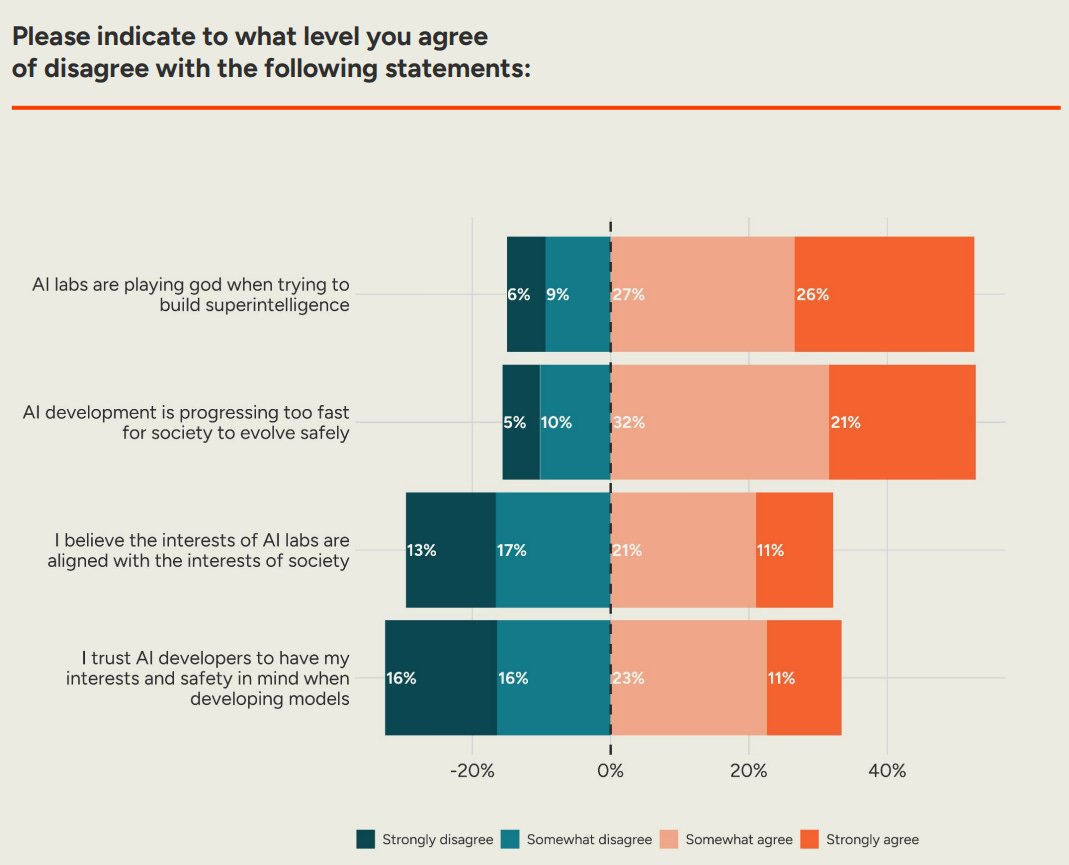

People want by a 3-to-1 margin, they want more regulation, not less. And they deeply distrust the big AI labs that are 'playing God' with little regard for public opinion or the public good.

“From relationships and mental health to jobs, democracy, and inequality: AI is way too important to be left to Silicon Valley.”

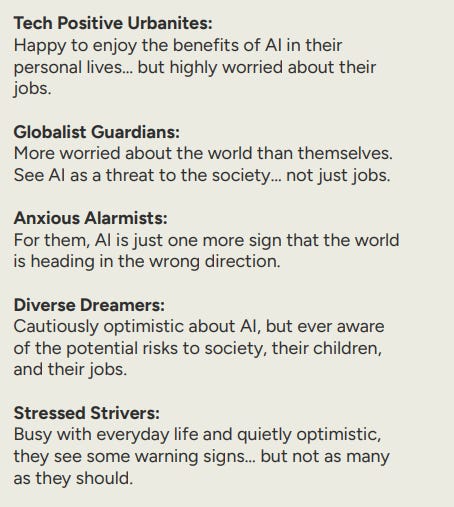

5 Social Groups

The researchers identified 5 social groups that differ in how they see and respond to AI.

Tech Positive Urbanites: Happy to enjoy the benefits of AI in their personal lives… but highly worried about their jobs.

Globalist Guardians: More worried about the world than themselves. See AI as a threat to the society… not just jobs.

Anxious Alarmists: For them, AI is just one more sign that the world is heading in the wrong direction.

Diverse Dreamers: Cautiously optimistic about AI, but ever aware of the potential risks to society, their children, and their jobs.

Stressed Strivers: Busy with everyday life and quietly optimistic, they see some warning signs… but not as many as they should.

Which sounds most like you?

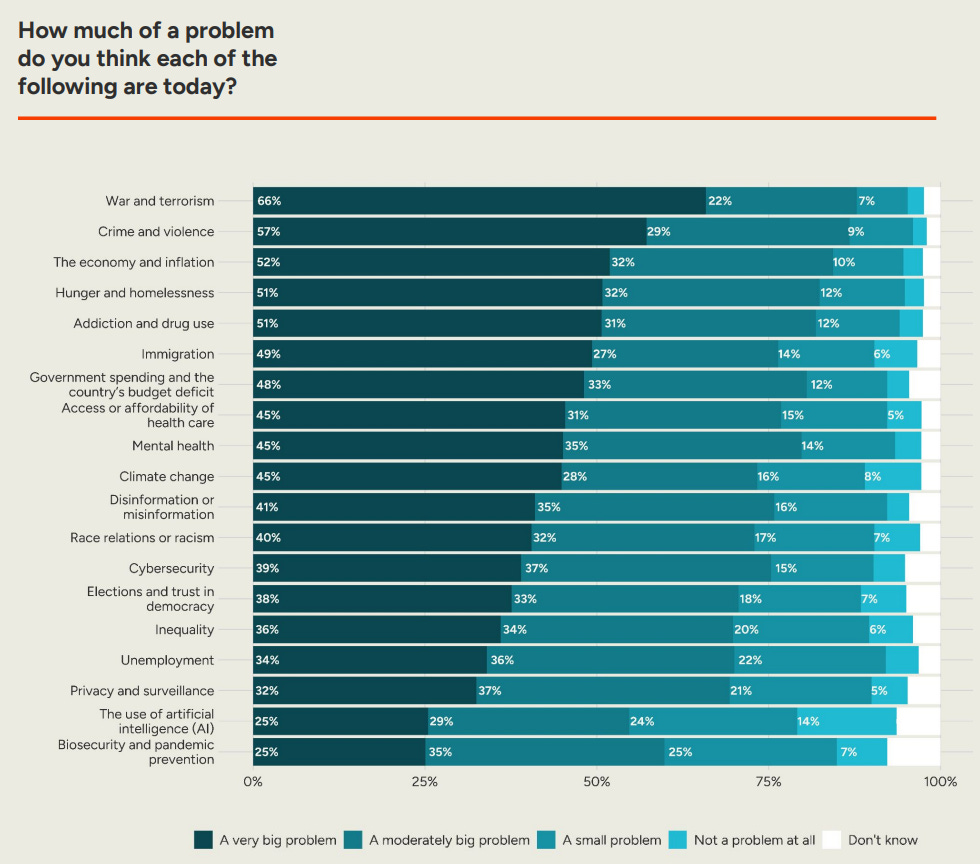

People continue to rank AI low in their list of overall concerns

However in some places in the world, it feels like we’re nearing a social tipping point: how long until the backlash against Big AI begins?

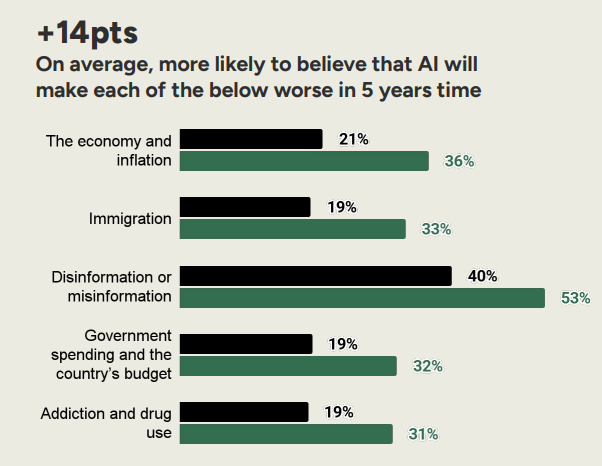

Overwhelmingly, over the near term, people think AI will worsen almost everything they care about.

We live in a world full of urgent concerns: war, climate change, unemployment. More people see negative impacts of AI as overwhelming the positive ones.

A Turning Point in public sentiment towards AI is consolidating: a new consensus

Previous polls have shown general anxiety about AI, though most of us still rank it low among our social priorities. But that’s only part of the story. The reports findings show that we already care about AI — we just don’t always realise it. A deeper public understanding is emerging. We’re starting to feel how AI might affect our lives.

From relationships and mental health to jobs, democracy, and inequality - people (especially in Europe) are becoming aware to how AI might impact their lives moving forwards.

Meanwhile AI Risks in Public Markets are Flashing Warning Signals

This report is written by Sean Greaves, based in London, published on July 17th, 2025.

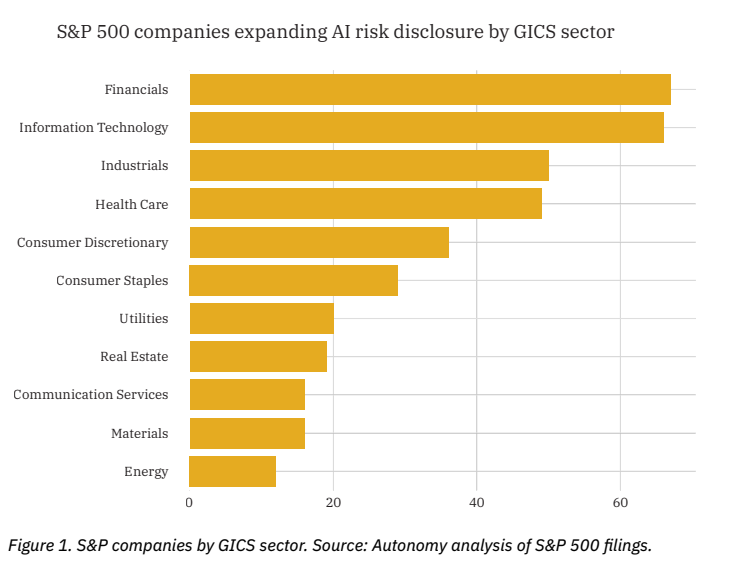

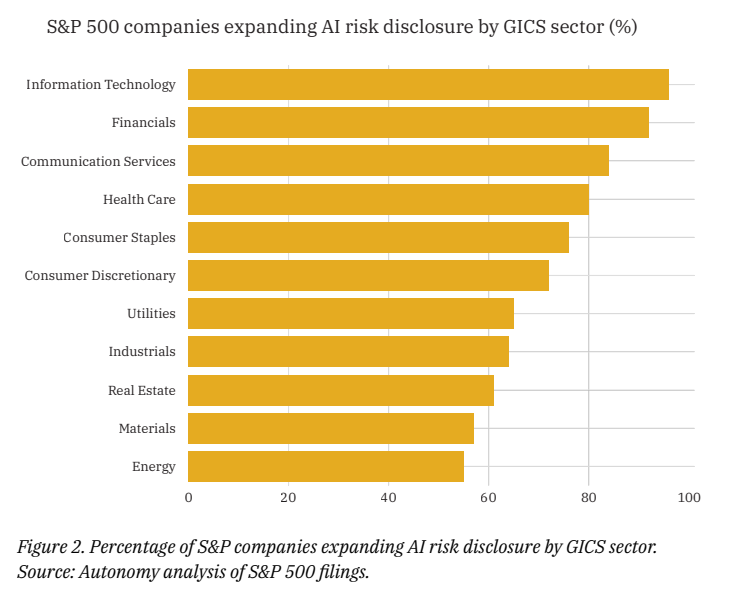

1 in 3 companies (168 total) have added or expanded upon competitive risk relating to AI.

3 in 4 companies (380 total) have added or expanded upon risk concerning AI, indicating a widespread concern with AI related risk.

1 in 3 utilities firms (10 total) have added references to AI’s increasing energy requirements (more on this in a separate article).

The number of companies citing AI bias risk has doubled, from 70 to 146. (and the list goes on).

Both citizens and companies are worried about the disruption period that AI might usher in.

Women are twice as likely as men to worry about AI. And with cause; see for example this UN report that found that women are three times more likely to have their jobs disrupted by AI than men.

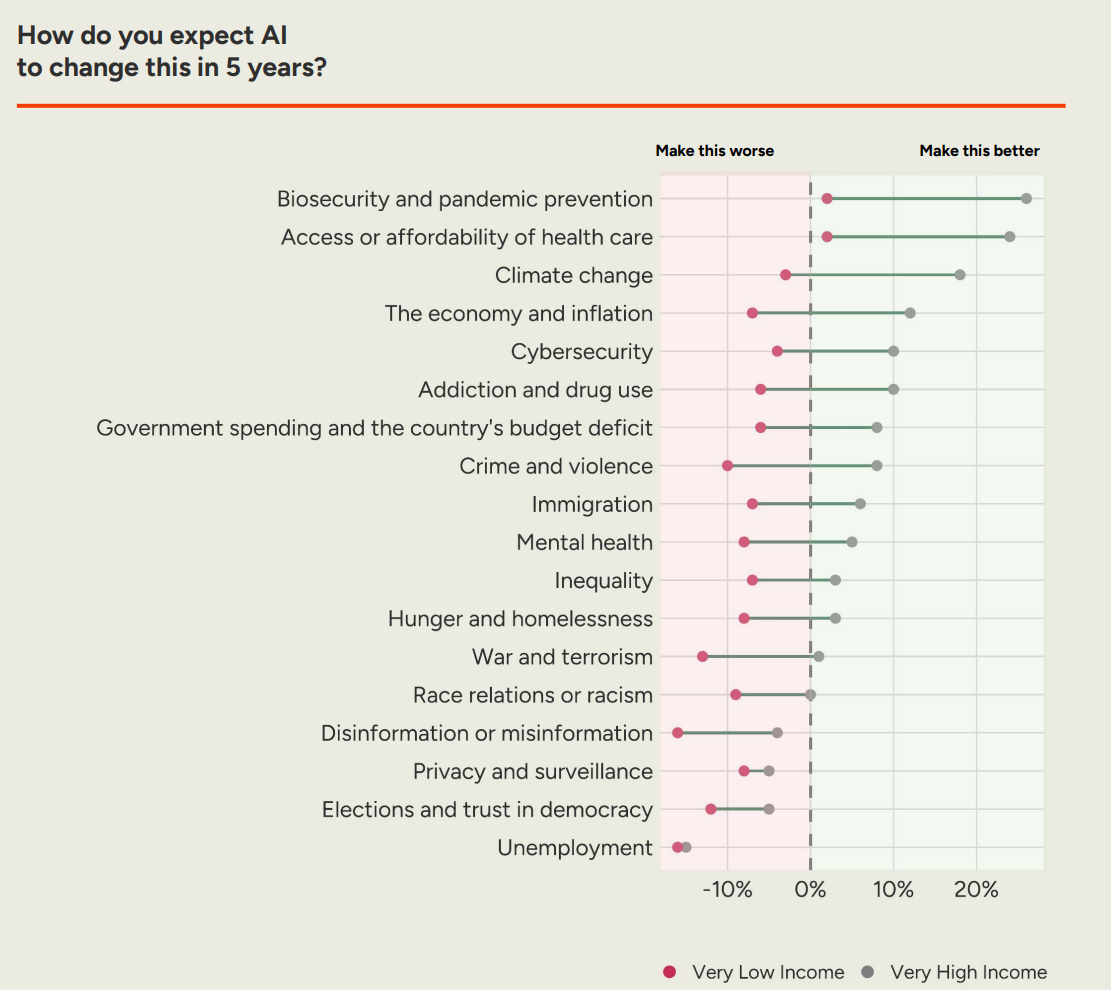

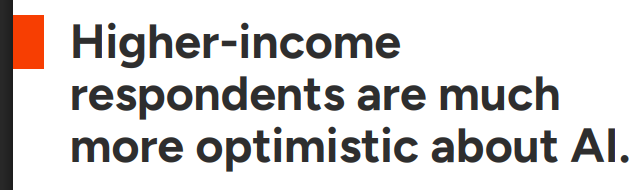

The same divide shows up across income levels. The higher your income, the more optimistic you are about AI. It’s a clear and predictable pattern:

The higher your income, the more optimistic you are about AI.

The relationship between AI, wealth inequality and social stability its acceleration globally is a big problem.

This is an economic issue. In Europe, only 15% of people think there is enough regulation around AI, while 45% of us think there should be more.

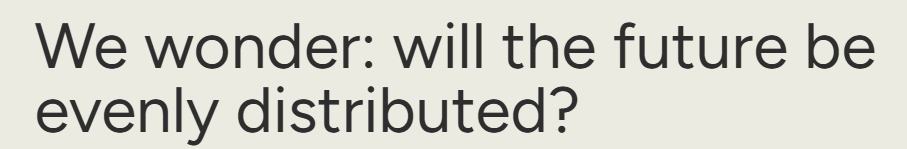

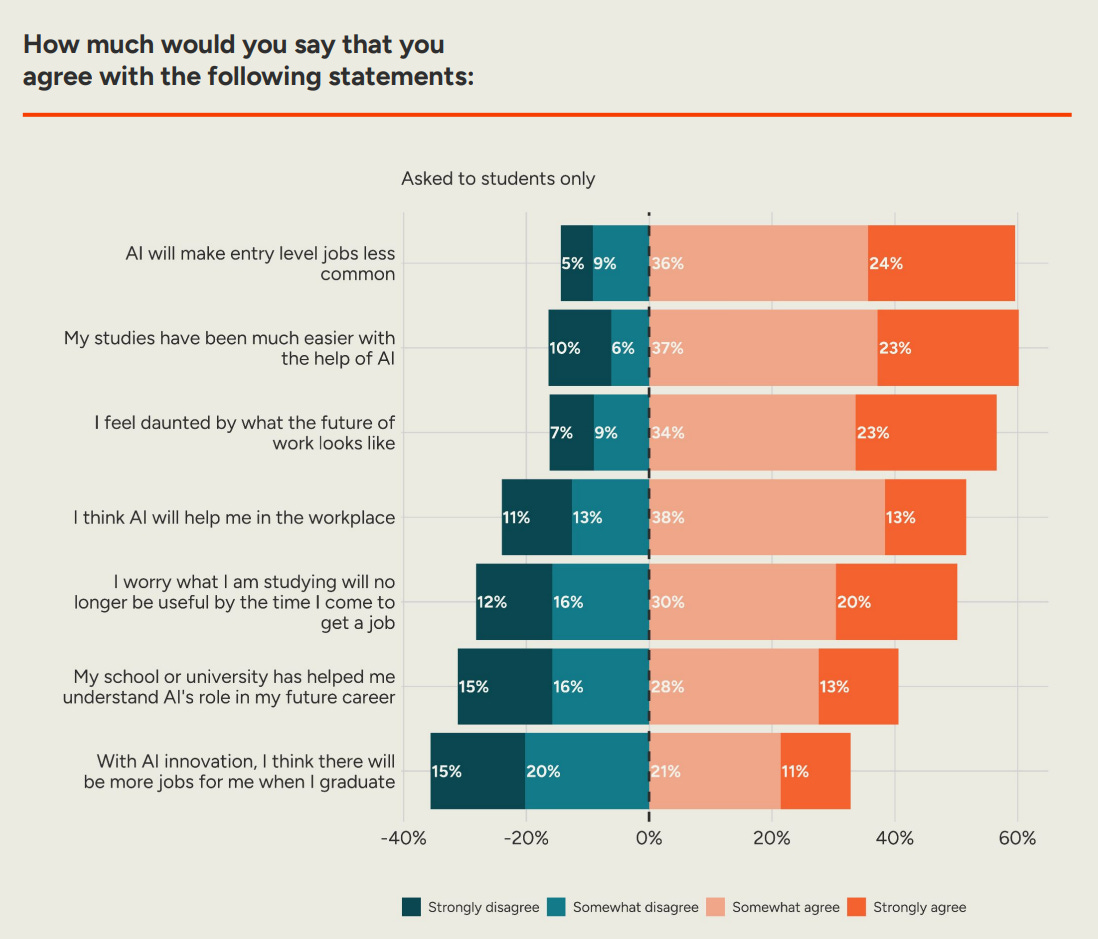

AI is Decreasing the Well-Being of Students

Students and recent graduates especially feel like they’re in a hard place.

In some places, graduating in the Gen AI era means higher youth unemployment and a harder time finding a job.

They're daunted by the future of work, and most feel their schools aren't helping them figure it out. A majority of students worry that what they're studying will no longer be useful by the time they come to get a job.

Forget FOMO, FOTF with AI is a real concern (fear of the future).

But corporations are increasingly shocked by what an AI native world means:

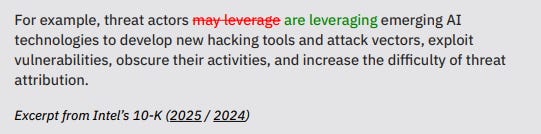

Consider the following sentence from Intel’s latest filing:

Risks in the marketplace and to humanity intersect and reflect a negative potential outcome of AI’s proliferation in society and business.

Considering that we are talking about how Europeans primarily see American AI, there’s a clear mistrust of American products around Generative AI:

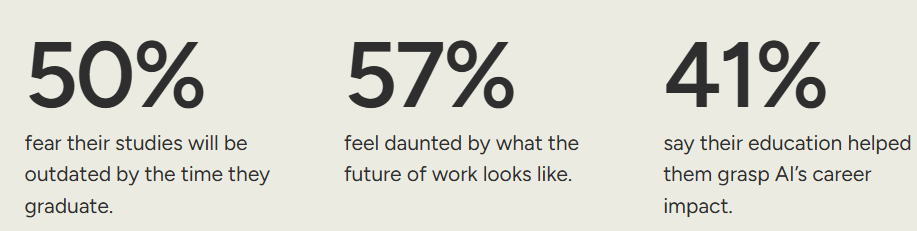

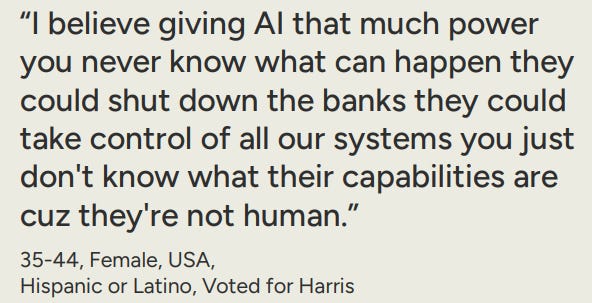

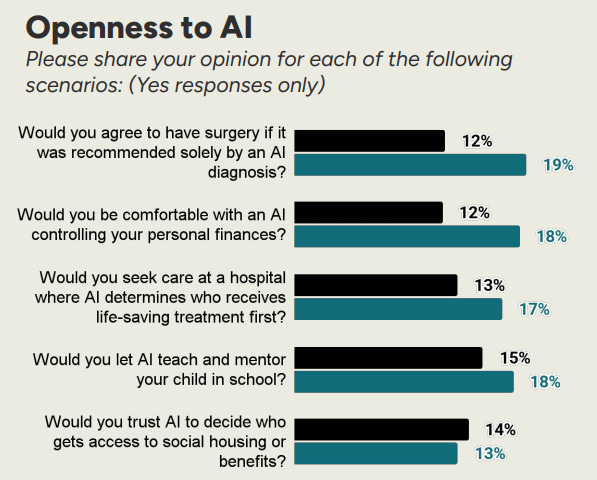

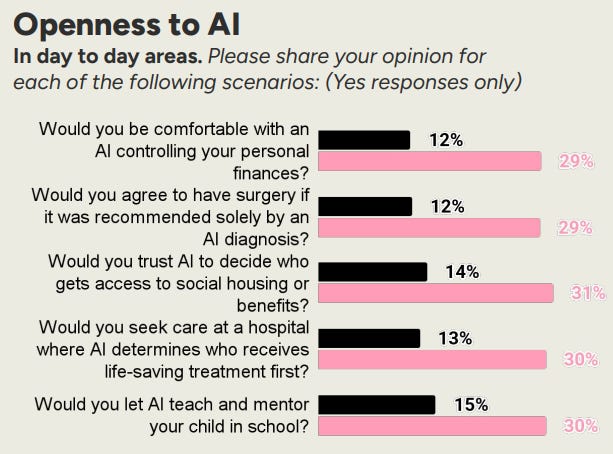

Overwhelmingly, people wouldn't trust an AI to decide who gets welfare support, would not accept health care decisions made by AI, and would not leave their finances in charge of an AI. People are against AI teachers and AI money managers... but they're more likely to let an AI teach their kids than manage their money.

In general, the wealthier and more developed a country, the more skeptical they are of AI’s recommendations.

Young people all over the world already suffering from issues around well-being related to, for example, the cost of housing affordability, inflation vs. wage gains and hope for the future now have another worry with AI:

Not so much FOMO as FOTF (Fear of the Future)

We’re more anxious about losing love than losing jobs. More people fear AI replacing relationships (60%) than triggering mass unemployment (57%).

People are worried about changes to employment, financial stability and relationship uncertainty caused by AI and the proliferation of Generative AI systems and tools.

2025 might be the first time people surveyed are linking AI to a decline in relationship satisfaction:

People are highly concerned about products like ChatGPT, Character AI and others being used for therapy, companionships, emotional support - instead of other people, replacing human relationships.

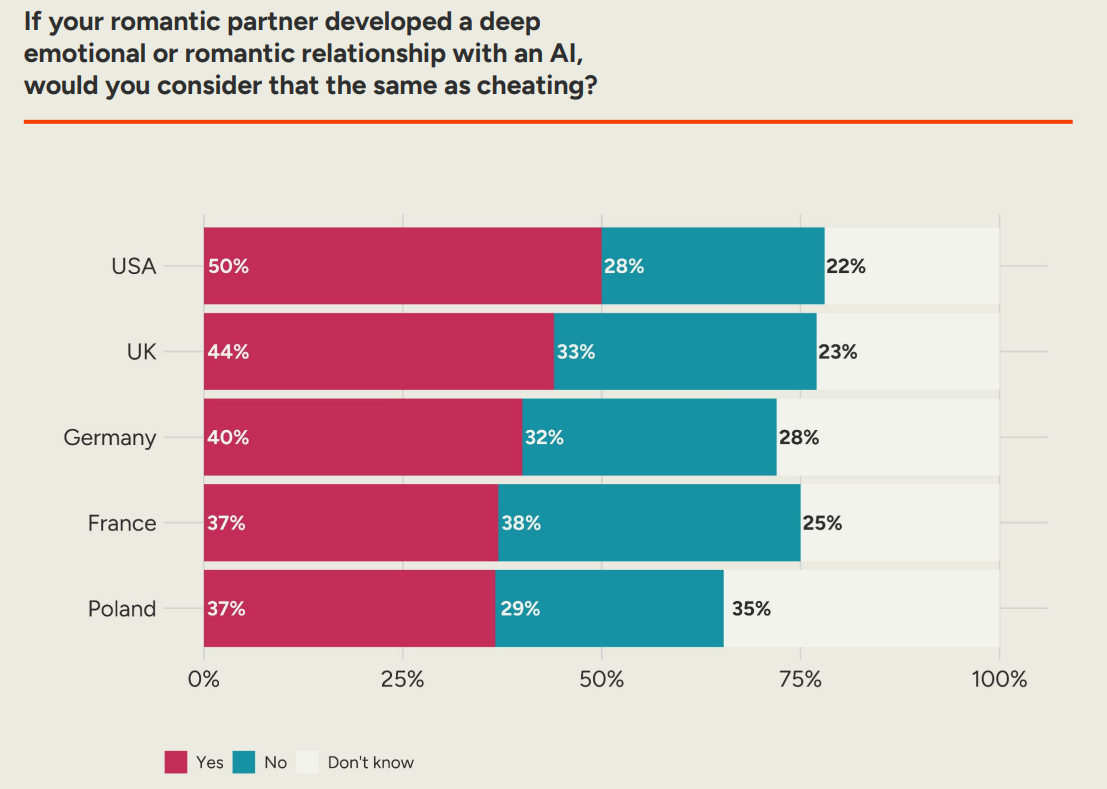

Overwhelmingly, most people are worried about the effects of AI on human relationships, with 60% moderately or extremely worried, and only 10% not worried at all. As in many parts of their report, the authors noticed here how culture shapes how we adopt AI. For example, Americans are twice as likely as the French to consider a romantic relationship with AI cheating.

60% worry AI could replace human relationships

67% of parents are uneasy of their children falling for an AI.

Only 10% aren’t concerned with AI impacting or affecting relationships.

What Industries in the Corporate World are Expending AI Risks?

People and companies are concerned of the impact of AI on their relationships and business models, margins and their very shareholder value. That is, society is starting to see AI as a risk to what they value the most. Core institutions - like the family, marriage, the opportunity to have children and the livelihoods of small businesses and even bigger companies.

If that is not existential risk for humanity I do not know what is. 😳

People are Worried about the Internet’s Trust Architecture

Generative AI is proliferating synthetic content that is often used in an untrustworthy way or to “game algorithms”. People are using Gen AI with incentives that aren’t based in trust, good intentions, truth or transparency any longer.

The idea that AI risks doesn’t matter is false and it’s often media narratives pushed by the PR of the ruling class that promote this. Despite widespread worry, public opinion on AI appears neutral—split evenly between optimism and pessimism. But this balance is misleading: views differ sharply across groups, and tensions are rising as AI rapidly expands into every part of life.

AI risk is important and relevant to human well-being and the quality of our lives, our economic well-being and our opportunities. But also our mental health, relationships and how we perceive our place in the world.

46% believe AI’s benefits will mostly go to the elites.

31% say AI gives them hope for humanity’s future.

People are clearly worried AI could be used to strip us of some of human rights, privacy, free-will and independence and autonomy as citizens in modern society:

Download the full report to explore all the details: (click on the image below)

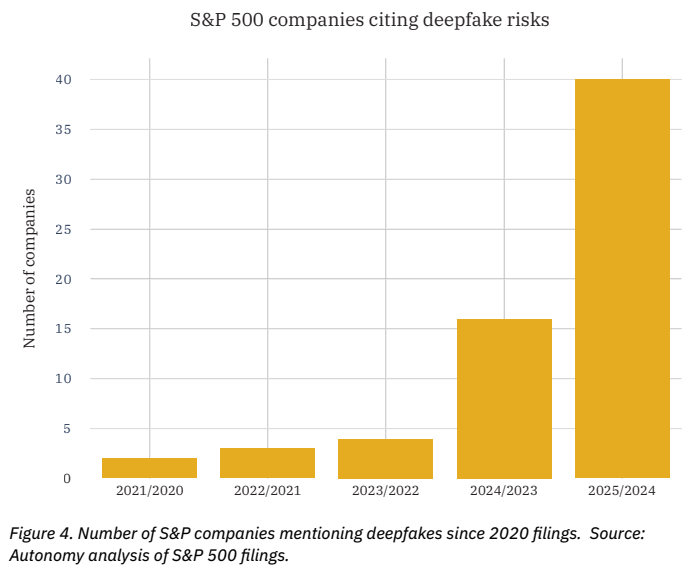

Corporations on the S&P are Expanding their AI Risk Disclosures

In technology, finance, communications and healthcare especially.

2025 is a Moment on the Razor’s Edge for AI Risk

People are less optimistic about AI than you can possibly imagine:

Less than 1 in 3 people see AI as a hopeful development for humanity.

1 in 2 see AI as a growing problem.

3 out of 5 are worried about AI replacing human relationships. [the survey casts a shadow on American AI products and their morality / AI alignment]

People fear the future, it’s adaptive to do so but how do you fear the implications of AI in a more complex world?

People are starting to understand how AI makes worse some of the biggest problems in society today.

What if AI risk weren’t hypothetic, but economic, social and human - damaging to our mental health, intimacy and ability to form meaningful relationships?

What if AI augmented social unpeavel, civil unrest and conditions where crime and poverty are more prevalent? To the detriment of the working middle class? The public fears that the biggest problems such as war and terrorism, and crime and violence could get worse by 15% and 8% , respectively.

People predict a majority of social problems are made worse by AI.

Most people have more to lose than to gain from AI continued entrenchment in our lives.

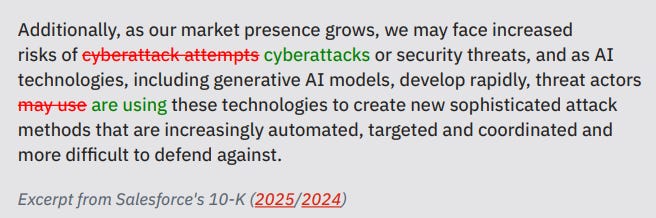

In the Corporate World Deepfakes, Cybersecurity and AI fraud are on the rise:

Deepfake risks are actually skyrocketing in 2025 and the S&P 500 companies reflect that. Generative AI is are making regulatory issues around security very much worse.

Does AI make you hopeful for the future of humanity?

Public sentiment around AI appears to be declining in most western countries. If you ask enough people, we as civilization are on the fence about it:

If AI hurts the middle class, future of jobs and human relationships, how do you suppose we will feel about it in the 2030s? Will we even remember how things were before AI eventually?

AI as a Social Problem is Rising

(Canadians are even more pessimistic about AI than Americans).

Very few members of society are undecided about if AI is a problem.

Now imagine you are GenZ or older Alpha and you graduate into a world with less good jobs? How are you likely to feel about it?

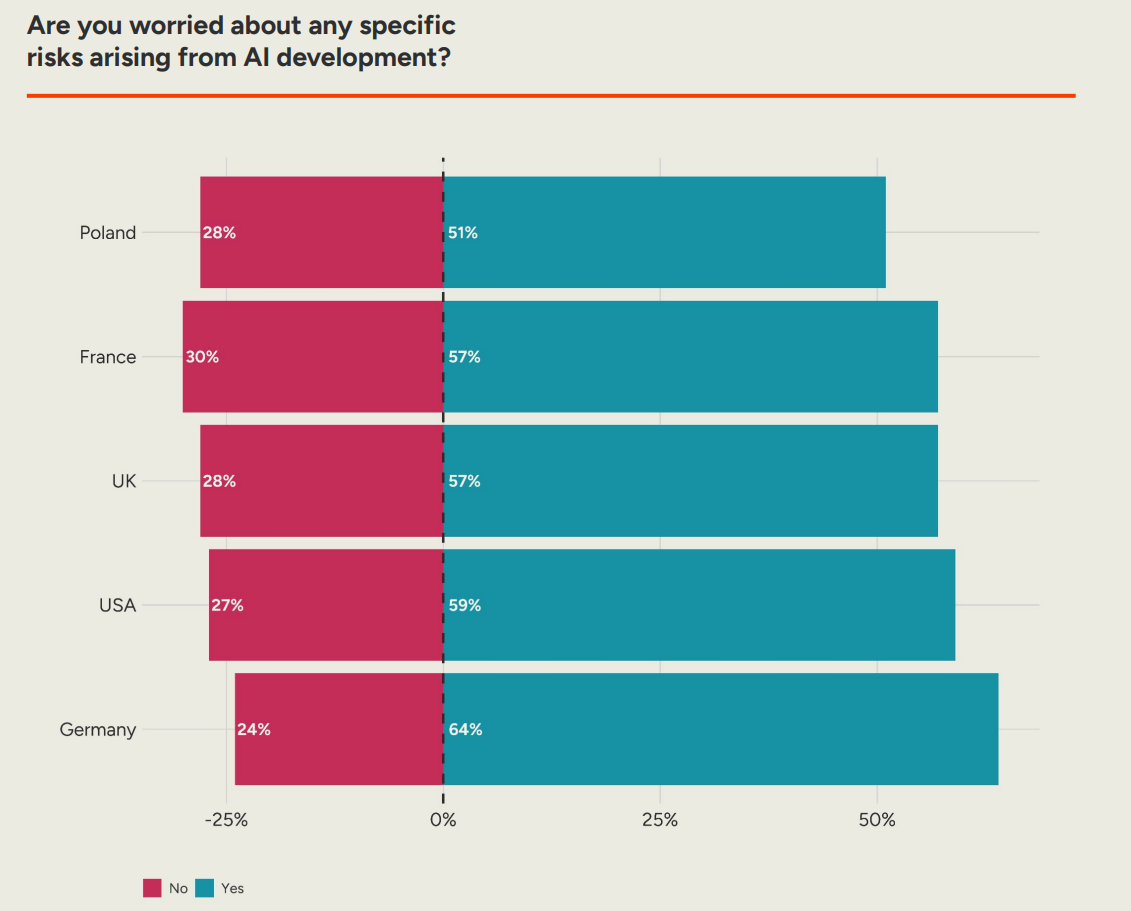

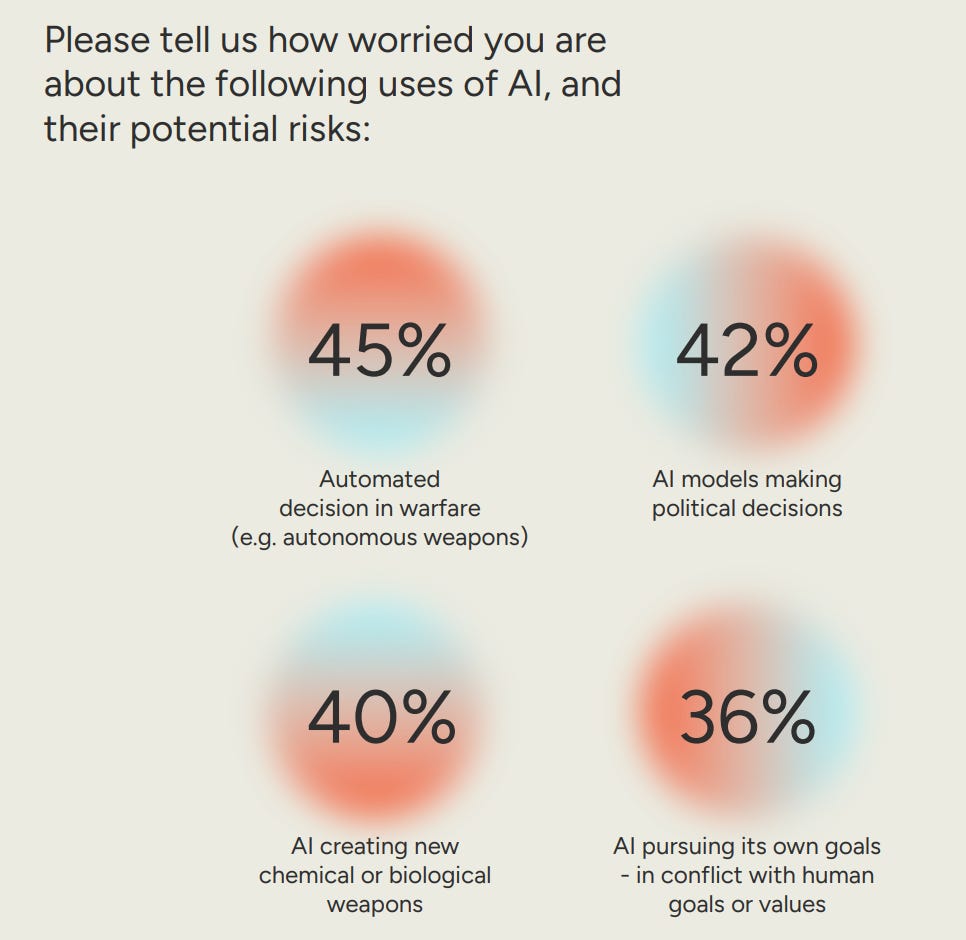

Most people told the researchers (survey) they are worried about specific applications of AI technology.

Fears about AI are not gender-neutral; women have a substantially more negative view of AI than men.

Women seem more concerned with AI risks not just to people and their circle but to the planet as a whole.

Women understand that the risks outweigh the benefits, especially for those are are most vulnerable.

AI Probably Makes the following worse:

Cybersecurity

Biosecurity

Climate Change

Economic equality, inflation, housing affordability

Government debt

Poverty, hunger, homelessness and basic needs

Mental health, family and interpersonal relationships

The ability to raise or have children

Immigration

Social order, civil unrest and crime and violence

Racial and wealth inequality

Privacy, surveillance and the protection of basic human rights

War, terrorism and machine led deaths

Democracy, trust in institutions, elections

Fraud, phishing, deep fakes and malevolent actors using AI for profit

Propaganda, illegal lobbying, disinformation and misinformation of State and corporate actors

Unemployment, re-skilling, job hopping, economic survival

Difficult to see how women are wrong on any of these sentiments towards AI. It’s the ones with the least financial incentives on a topic that have the most honest answers.

AI could decimate Fertility Rates and Have Nots

Across our global sample, lower-income respondents expressed significantly higher levels of worry than those who were better-off.

The middle class could also face downward pressure, as some jobs might be disrupted or demand for some labor might be noticeably decreased (e.g. SWE entry level jobs as a case study).

As with the female respondents, this distrust may reflect an appreciation that systemic issues already in place are likely to be exacerbated by AI.

When we examine overall opinions on AI by income level, the pattern is very clear. The very lowest income groups expect AI to have a negative effect on their children, the world, minorities and mental health. Meanwhile, higher-income groups foresee improvement, especially for their children. The divergence there is especially wide.

AI will usher in more class division, more economic inequality and a bigger gulf between them. (just like Bitcoin did, these act like ponzi schemes).

“This underscores fears that AI could intensify inequality and disproportionately impact those already at risk.”

We should never put down the fears of the majority, because to do so means that balance has been lost in our social systems and that civilization will reset. I’m afraid the AI hype cycle is putting short-term benefits ahead of long-term social issues. America’s Venture Capital system and Silicon Valley appear to be at odds morally and ethically with most citizens in the world. I expect this gap to continue to widen considerably in the 2025 to 2045 period with very volatile 2050 to 2070 period.

This is a warning to the future.

Almost half of people in our study think that AI benefits will mostly favor those who are already advantaged.

There’s significant emerging popular resentment of the wealthy, with 67% of people saying that the wealthy don’t pay their fair share of taxes, and 65% saying that the selfishness of the wealthy causes many of our problems.

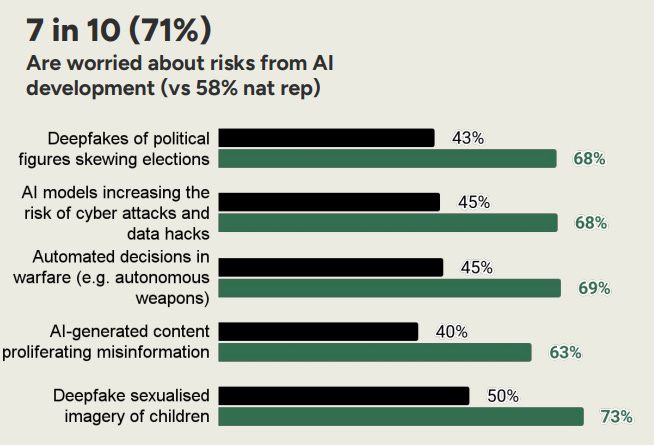

AI Specific Anxiety

Some of the most widely cited concerns about AI are based on crime targeted at individuals, especially false information.

Ordinary people are even more worried about the impact on their children and their relationships (FOTF). Young people have more anxiety about a future with AI.

Deepfakes, non-consensual pornography, misinformation and bullying were all cited as being of high concern.

New kinds of crime and digital pollution and blackmail are appearing due to the emergence and proliferation of Generative AI.

Ironic that Trump has drama with the Epstein files, but this is relatable to the impact of technologies he promotes like AI: Over 1 in 2 of our sample worried about deepfake sexualised imagery of children.

AI lowers the bar from everything from cyberbullying to revenge porn to various kinds of fraud, blackmail and online harassment.

Scams, crime and surveillance are also a cause of concern directly related to AI.

Catastrophic and Existential Risk are also AI related

What we consider Existential risks around AI are also changing and people get more educated. Actually the proportion of people worried humanity could lose control of AI appears to actually be decreasing. For example, this study found 2 in 3 people were worried about loss of control as recently as 2023.

Military automation

AI in Government (making decisions and enacting laws)

Biosecurity and the threat of new pandemics

AI self-determinism hostile to human civilization

32% of respondents thought AI would impact them positively in the next year or two, and their hopefulness remained relatively consistent, with 30% thinking this would still be the case after 20 years.

Unfortunately the impact of AI is making more people uncertain. Uncertainty about the future tends to spiral into negativity and worry.

Maybe the biggest surprise for me of the survey is the following:

It’s a tie, but specifically it’s not.

It’s a widely held view that relationships are more important than work, and that holds true when we talk about AI. In fact, more people are concerned about AI effects on relationships (60%) than are concerned about AI effects on employment (57%).

“Nearly two out of three people are either moderately or extremely worried that AI could replace human relationships…”

This also surprised me:

The U.S. the primary maker of Character AI like chatbots, is also the place where emotional entanglement with AI is viewed as a form of infidelity. 😂

Sad things are happening though in the real world with this, often the male in the household going off the deep end with their AI obsession. AI being a reason for divorce or separation are certainly on the rise.

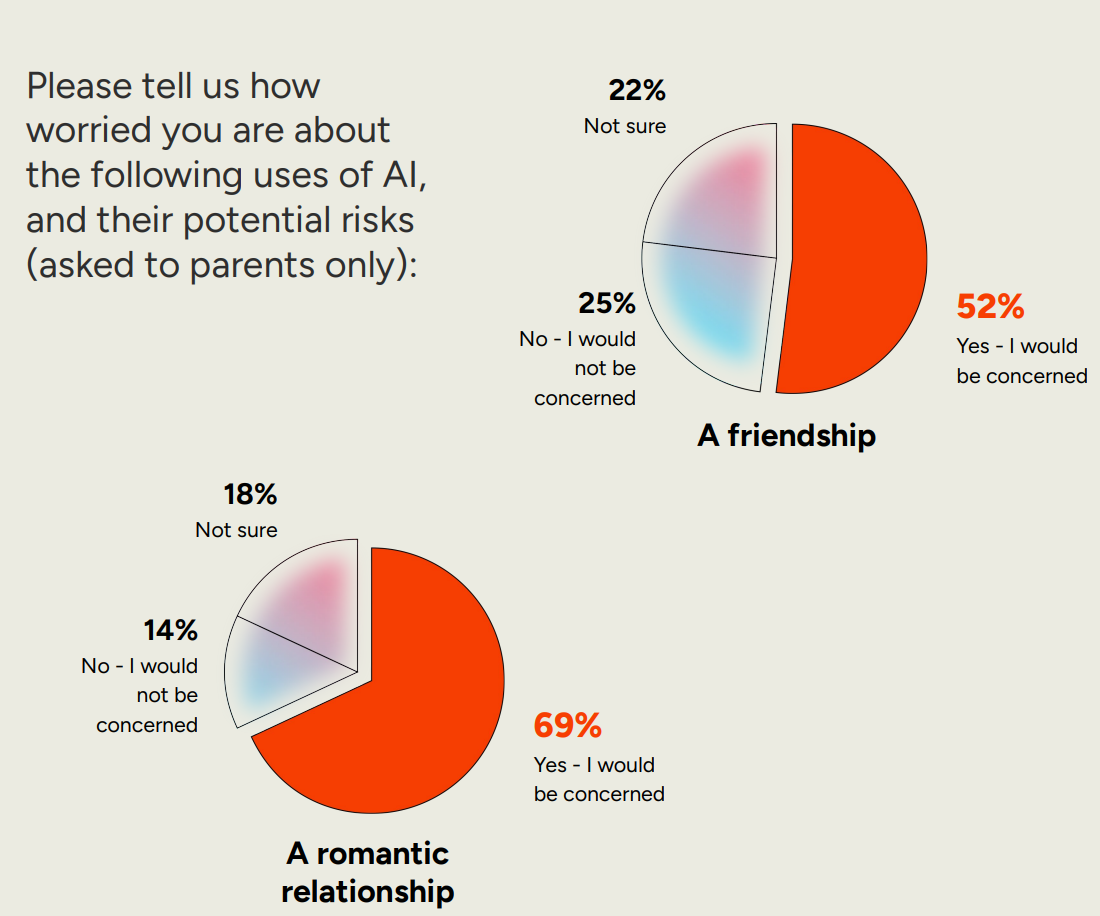

While Teachers and peers push kids to become more AI-native for their future career prospects, a majority of parents told the researchers that they were concerned about their children developing any kind of serious relationship with an AI.

Talk about mixed signals.

So the thing you “spend the most time with” well having romantic feelings for them is taboo.

When it came to a romantic relationship with an AI, over 2 in 3 parents would be concerned.

In 2025, developing romantic feelings for an AI is nearly as risky as being the victim of cyberbullying for a teen. That’s a crazy world to grow up in.

AI is only going to become more persuasive too.

While 3 of 5 students say AI is making studying easier, many feel unprepared for what comes next. (Do we want studies to be easier?)

Half of them report that their schools or universities aren’t helping them navigate the future of work. (Do teachers and professors even understand the future of work?)

4 in 10 students—and over 50% in the UK, Germany, and Poland—worry that what they’re studying today may be irrelevant by the time they enter the job market.

AI will make entry level jobs less common.

AI might both hinder and help me in the workplace and in my career.

With AI innovation, there may be less jobs for me when I graduate. (unsure).

But in America AI has more control in parts of the Government and Department of Defense than ever before.

Across all markets the public were very clear: on a macro level, 70% globally either agreed or strongly agreed that AI should never make decisions without oversight.

But does that include AI targeting of bombing sites in Gaza or not? People are already losing accountability for being in the loop.

About that AGI System

4 in 10 people agree that we should not pursue artificial general intelligence and should stop all technical development in this area.

It’s less clear how people will actually remain in control.

This includes healthcare decisions. [Slide 33 of the Report]

Labs like Google, OpenAI, Anthropic, Microsoft and others pretend that they care about the impact of their products on people and society. But do they really?

A majority of people also think the technology is progressing too rapidly to be safe.

Meanwhile Tycoons in America are deciding for us a lot about the future of our children and the rules of the game of the world they will work and thrive in.

Too Little AI Regulation?

Only a third of participants agreed that regulations were adequate, with the remaining majority either unconvinced or undecided.

So in effect, your age, economic status and location says a lot about how you will view AI.

Tech-Positive Urbanites

This segment consists of people who are more likely to be highly educated, politically engaged young professionals and parents living in urban areas.

They worry about career disruptions caused by AI.

They are happy with AI in their daily lives.

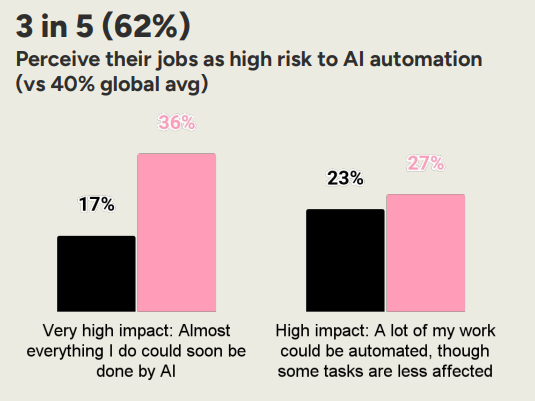

They view their job as high-risk to automation.

This segment, representing 20.2m people extrapolated across all our participating nations, is acutely worried about AI risks, especially through the lens of their own jobs.

Globalist Guardians

Globalist Guardians are generally affluent, skew slightly older, and hold socially progressive globalist values. They are highly engaged with news and current affairs and higher civic participation.

Actively worry about the future impact of AI.

While they don’t see their jobs as vulnerable, they worry about impact on society as a whole and other countries.

They are the only segment of the population whose worries center on existential risks.

Over half are extremely worried about AI making decisions in warfare (57%) and politics (54%), and half are worried about AI replacing human relationships (49%). (This is my type by the way).

They see AI as likely to worsen existing societal problems and are particularly alarmed about climate change.

They tend to mistrust AI developers, and are the most likely segment (60%) to think there is not currently enough regulation around AI. They fear the loss of the rules based global order due to AI’s impact.

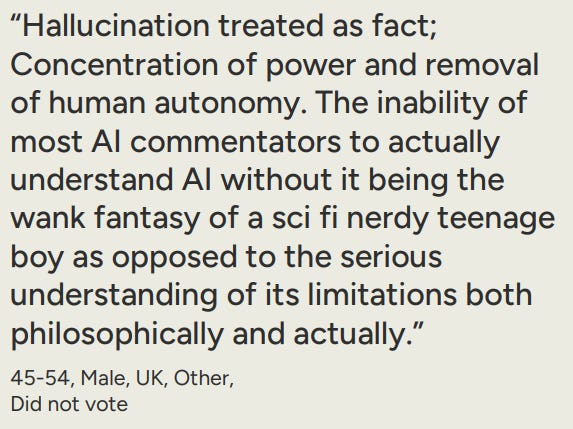

Some of the quotes from this group are especially fascinating and personally things I think about a lot:

Anxious Alarmists

The people who are more worried about AI risks than the full sample, especially through the lens of high-profile concerns like the economy, inflation, immigration, unemployment, and government spending.

Anxious Alarmists also skew slightly older and are the most active news followers. Many AI Newsletter readers may be of this type.

Psychologically the researchers say this group is generally skeptical of new developments in the world and are often nostalgic about the past.

They are twice as likely to believe AI will very negatively impact them in the long term (28% vs 14%).

This group seems to be more aware of how AI might impact social issues and governments and national sovereignty related to the geopolitical impacts around trade and the economy.

This group is afraid of of society losing rule of law and people losing their autonomy. They may also be AI resistant.

They are more cautious and conservative and many of their ideas on AI risks are actually fairly novel in an accurate existential sense:

Do you see yourself in any of these AI Groups?

Income levels, education, country and culture may skew how we view AI and AI risks to the future of humanity.

From the AI risks to Market report:

Risk of AI Disillusionment is Higher than you Think

Despite rapid advances in general AI capabilities, many companies investing heavily in AI struggle to achieve a clear return on investment, with 57 (11% of the S&P 500) explicitly warning that they may never recoup their spending or realise the expected benefits.

Excitement around AI creates pressure to appear innovative, yet quantifying tangible gains remains difficult for many at this stage.

Even companies publicly bullish on AI often strike a more sober tone in their disclosures.

Palantir’s stock PLTR 0.00%↑ is up 102% so far in 2025 YTD. After two decades of struggling to even become profitable.

Diverse Dreamers

Diverse dreamers, as the name suggests, are more likely to be from ethnic minorities, and are more religious than the general population. They are a truly fascinating grouping by the researchers (and may be more representative of young people in the Global south e.g. India, Brazil, Indonesia if we wanted to generalize this survey to other parts of the world).

They skew younger and to being more ethnically diverse.

They are almost twice as likely to strongly agree that they “believe the interests of AI labs are aligned with the interests of society” (21% vs 11%).

They are 47% more likely to say they strongly agree that “AI models pose a risk to children’s safety” (25% vs 17%). There are slightly more parents in this group.

They appear more open to say for example, AI in healthcare settings:

Black is full sample of survey, blue is Diverse Dreamers.

While optimistic, they are not unaware of the harms:

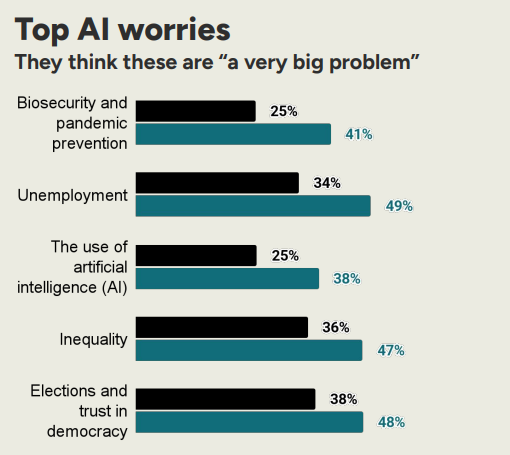

They seem most concerned about pandemics and unemployment, along with AI’s influence on democracy and inequality.

Stressed Strivers

This segment consists of people who are more likely to be younger, lower-income, with young children, and tend to have lower levels of education.

They are progressive with regards to AI making their life easier (more passive) even of AI taking over some parts of their financial and healthcare management.

Currently, 1 in 4 remain unsure of the repercussions AI will have on societal issues indicating they’re a swayable audience who haven’t had enough information to decide how they feel about AI and the impacts on the future.

They are often less involved in societal issues, perhaps because they are very busy juggling working life with raising young children, an undertaking likely to occupy much of their time and energy.

While they are more likely to be comfortable with narrow uses of AI, they are also more likely to believe that AI will deepen economic divides and trigger destabilizing economic shifts - to which they will be most vulnerable to given their lower-income backgrounds.

This group is aware of benefits and problems acutely. This group is a good proxy for the lower-middle class in general.

But they also show uncertainty, confusion and stress due to the potential upheaval of the impacts of AI now and in the future (especially seen in their quotes).

Disclaimer: This above survey was mostly done in European countries and could reflect European sensibilities that are not generalizable to other countries as easily. Thus many of the views are Eurocentric in the extreme. Certainly more Western in general of populations in typical developed countries.

Addendum Editor’s Notes on other Reports and Random Insights

Energy ⚡

10 of the 31 utilities firms in the S&P 500 (32%) added new reference to AI’s increasing energy requirements within their risk factors.

1 in 3 utilities companies added reference to AI’s increasing energy requirements.

The author notes: The impacts of AI and energy interdependence are not just limited to energy utilities. Top US tech firms developing AI and operating their own data centers, such as Meta, Tesla, and Alphabet, added mention of energy access and reliability as critical factors in scaling their AI infrastructure. Tesla writes that “as we continue to develop our artificial intelligence services and products, we may face many additional challenges, including the availability and cost of energy, processing power limitations and the substantial power requirements for our data centers”.

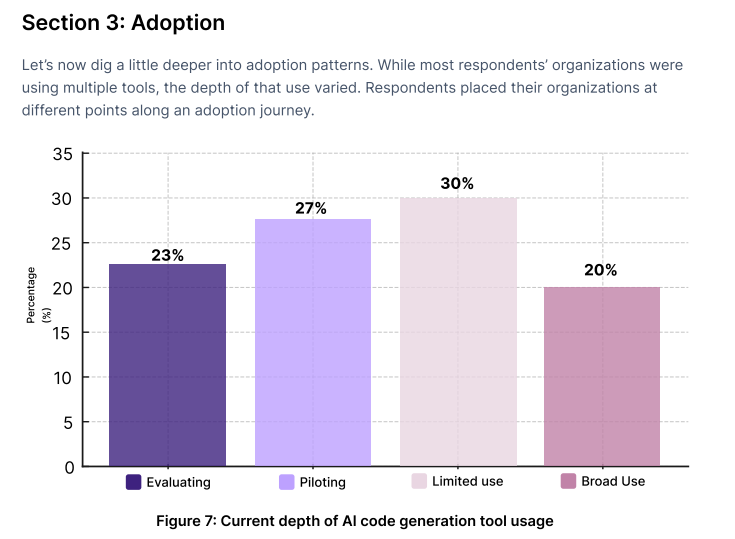

AI Code Generation

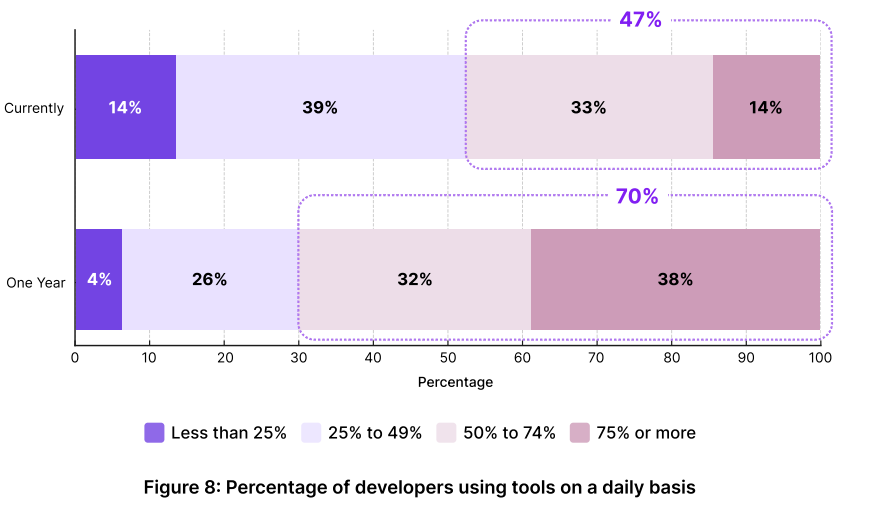

Stacklok asked hundreds of Engineering leaders in their survey:

Many of the respondents noted they were in relatively early phases of AI coding adoption at their companies.

AI Coding Tool use is surging among Devs in 2025

With expected rates to hit nearly 100% in the 2026 to 2029 period. (Cursor, Claude Code, Github Copilot, etc…)

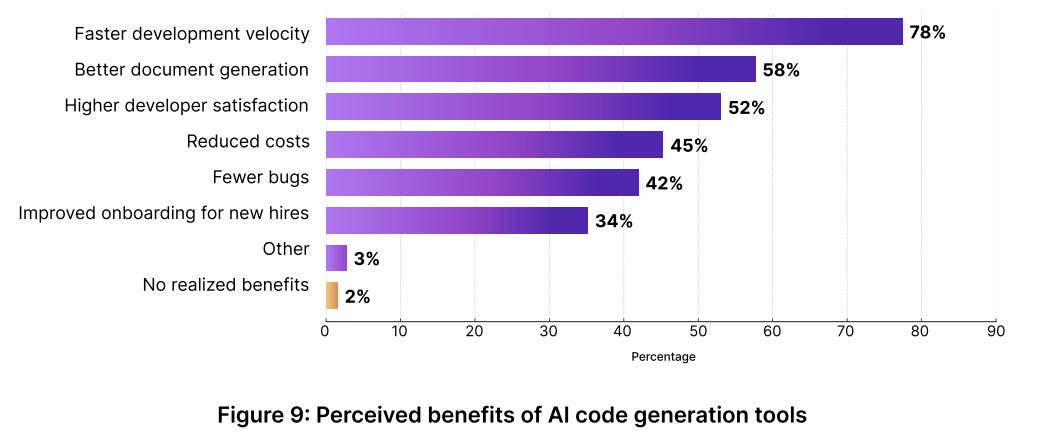

Benefits of AI Code Generating Tools

Speed, reduced friction, reduced costs, fewer bugs, faster onboarding

If AI is boosting productivity in the workplace, you have to imagine a lot of the benefits are in the engineering department pre 2027.

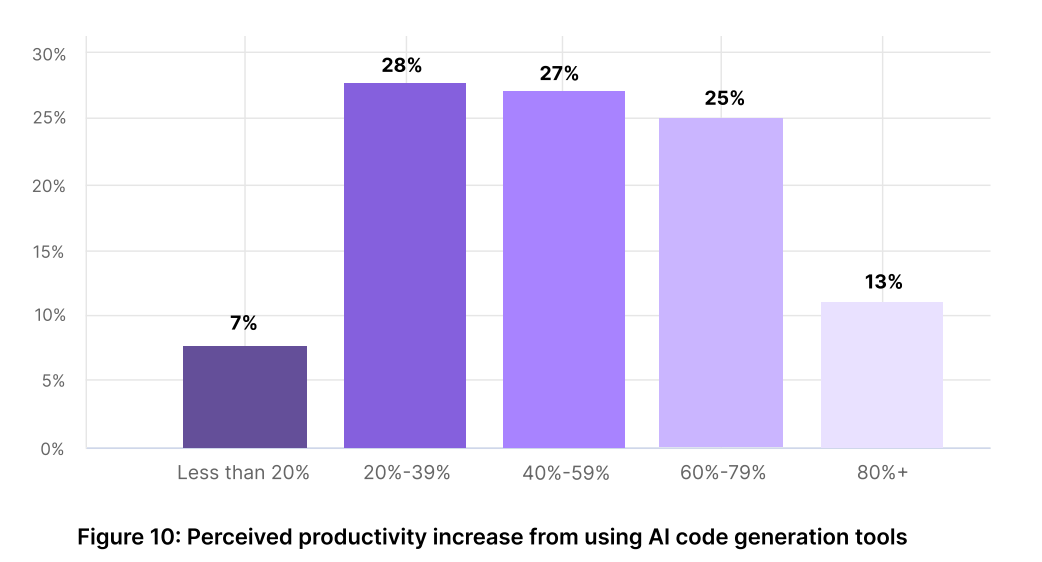

Perceived Productivity Gains by Using AI Coding Tools

Consensus is thus in the 20% to 45% zone in benefits. (this might increase as the tools themselves get better).

AI coding is generally thought to be improving rapidly in the mid 2020s.

As are the established best practices of developers using combinations of the AI coding tools.

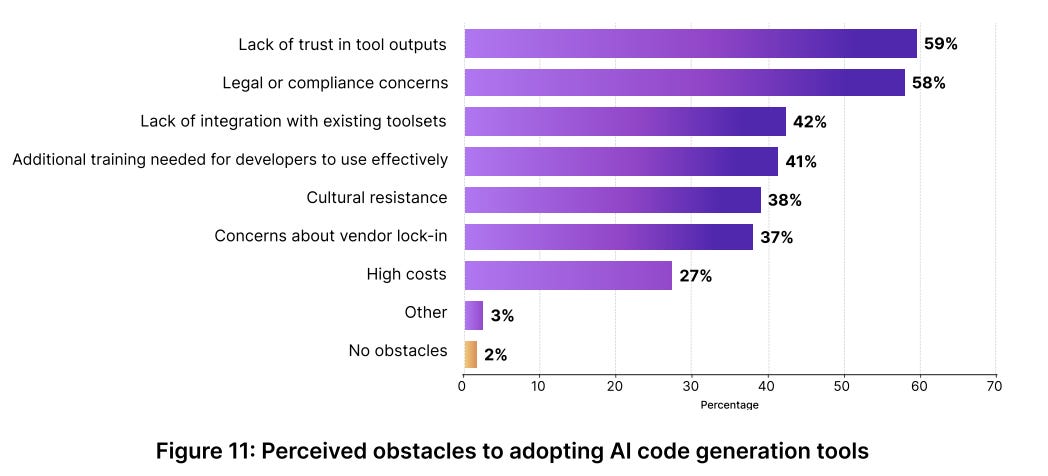

Obstacles to AI Coding Use in Engineering Teams

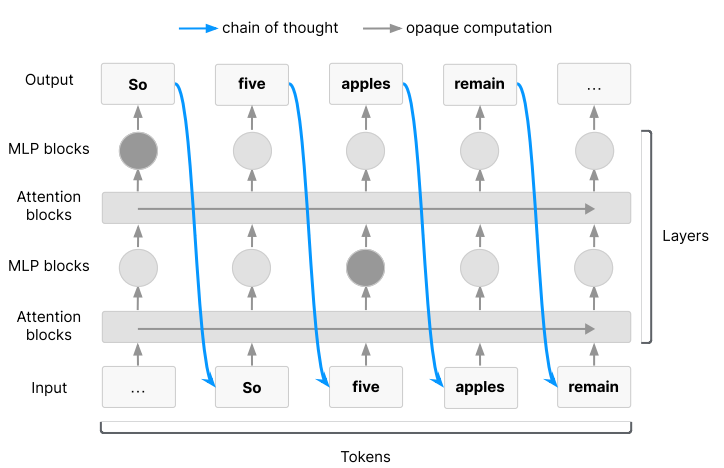

The Chain of Thought Window on LLMs is Closing

The AI researchers from several leading labs say: AI systems that "think" in human language offer a unique opportunity for AI safety: we can monitor their chains of thought (CoT) for the intent to misbehave. Like all other known AI oversight methods, CoT monitoring is imperfect and allows some misbehavior to go unnoticed. Nevertheless, it shows promise and we recommend further research into CoT monitorability and investment in CoT monitoring alongside existing safety methods. Because CoT monitorability may be fragile, we recommend that frontier model developers consider the impact of development decisions on CoT monitorability.

As AI explainability remains poor, AI alignment is almost impossible.

When you ask modern AI systems to solve complex problems, they often "think out loud" by writing out their reasoning step-by-step. But other studies have found that this CoT isn’t actually always accurate.

The key insight is that reasoning models are "explicitly trained to perform extended reasoning in CoT before taking actions or producing final outputs."However there is a big caveat:

That is, the "reasoning" the model displays might not be genuine or faithful to its actual internal processes.

Chain of Thought Monitorability as a Closing Window into AI Alignment

According to the paper and the AI researchers - Chain of Thought Monitorability represents both humanity's best current insight into AI reasoning and a rapidly closing window of opportunity.

The list includes a lot of Anthropic and Google DeepMind employees including a few from more specialized institutes.

There are however few commercial incentives to finish the “race against time”.

As Bowen Baker from OpenAI emphasized: "We're at this critical time where we have this new chain-of-thought thing. It seems pretty useful, but it could go away in a few years if people don't really concentrate on it."

OpenAI is certainly not going to focus on it, it has too many products to work on as does Meta, xAI and Alibaba. Can we expect SSI, Thinking Machines and Anthropic to do a good job on that? I doubt it.

The current moment represents a unique convergence of factors:

AI models powerful enough to require complex reasoning

Architectural constraints that force reasoning externalization

Training methods that haven't yet optimized away transparency

Industry awareness and willingness to preserve this capability

While it’s not strictly a petition, the academic paper seem to make a plea falling on deaf corporate ears.

The research is a collaborative effort from dozens of experts across the UK AI Security Institute, Apollo Research, Google DeepMind, OpenAI, Anthropic, Meta, and several universities. It suggests that analyzing an AI’s “chain of thought,” or its process of thinking out loud in text, offers a unique but potentially fragile way to detect harmful intentions.

The researchers try to argue that unlike traditional "black-box" AI models, where internal decision-making is opaque, CoT provides a window into the model’s thought process, offering a rare opportunity for oversight.

The researchers warn that monitorability of the chain of thought of reasoning models could be contingent on the way frontier models are trained today. There are multiple ways in which CoT monitorability could be degraded. Other researchers believe they have already been degraded by late 2025.

Competing Evidence and Ongoing Challenges

The optimism around CoT monitoring faces significant challenges from recent research, including studies by Anthropic showing that reasoning models often hide their true thought processes even when explicitly asked to show their work (already showing deception). In controlled experiments:

Claude 3.7 Sonnet mentioned helpful hints only 25% of the time

DeepSeek's R1 model acknowledged hints 39% of the time

Models frequently constructed false justifications rather than admitting they used questionable shortcuts

It may therefore already be too late to expect CoT reasoning and ITC (interference-time-compute) to produce a “window” for AI explainability. It sounds like even AI researchers still operate in a “black-box” environment even while being paid potentially million of dollars.

This suggests that even current CoT monitoring may be less reliable than safety advocates hope, and that the window for effective monitoring may already be narrower than initially believed.

What do you think?

If AI keeps up the way it’s been going the last three years, humanity and civilization may have even less agency by 2027 or the 2030s. If even AI researchers on Saftey and AI alignment seem confused about this - it’s not a terribly good sign.

Wow! What a detailed breakdown of these reports. It’s seems Natural people are quite cautious. The thing is, we've already seen the movie: everyone starts with good intentions (or so I believe), but 20 years down the line, we have technologies that have empowered many people and created significant value. However, the distribution of benefits is increasingly unequal, both between countries and among individuals. The question is, how long can this be sustainable