What is OpenAI's Operator and Blueprint? History and Tips of Prompt Engineering from 2020 to 2025 ✨

Can OpenAI's Operator compete with Anthropic's Computer Use and Google Jarvis? And insider history and tips of Prompt Engineering. Why OpenAI's Blueprint has me worried.

As we mark the two year anniversary of ChatGPT’s launch, I wanted to share again the deep dive on Prompting and its recent history by Mike Taylor. He wrote the book on AI Prompting titled: “Prompt Engineering for Generative AI: Future-Proof Inputs for Reliable AI Outputs”.

Mike’s work has appeared multiple times in places like Lenny’s Newsletter, on Ben’s Bites, and in other places like on Every.to. In this article later for premium readers, I’m also going to cover OpenAI’s new PR on their Operator tool and analyze their the AI infrastructure blueprint they presented in Washington. Skip lower down in the article if that’s of greater interest to you.

In a nutshell, OpenAI is set to launch a new AI agent called Operator in January 2025. This tool is designed to autonomously perform various tasks on behalf of users, such as booking travel, writing code, and conducting research.

🌟📋🌱 (November, 2024)

Popular Articles on AI Supremacy

How Silicon Valley is prepping for War

Major AI Functionalities 🧩 / with Apps🎮

"Who to follow in AI" in 2024? 🎓

AI for Non-Techies 🛠️: Top Tools for Meeting Notes

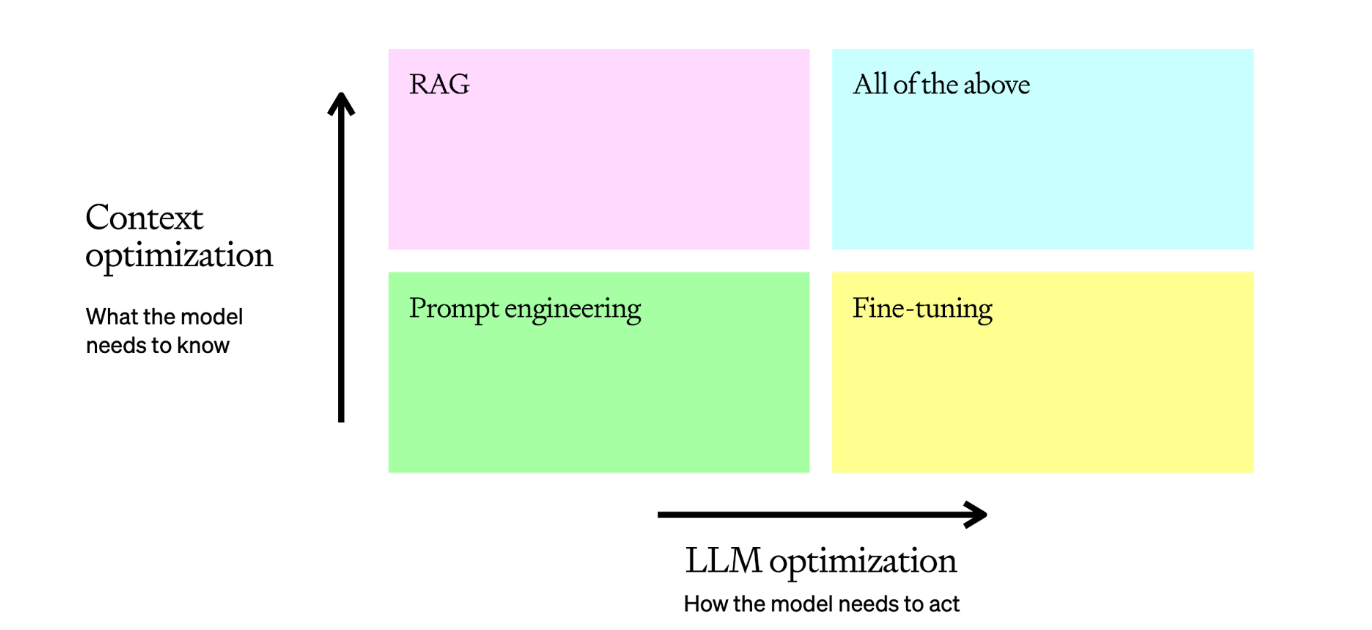

Recently OpenAI shared a guide on how to improve LLMs’ accuracy and consistency, and knowledge of AI tools really has to intersect prompt engineering in your own work tasks ideally to improve your AI literacy. Before we delve into the history of Prompt Engineering and Mike’s own journey with it, let’s familiarize ourselves with the basics.

Prompt Engineering is a new skillset that two years after ChatGPT went live, many people use to get ahead as students, professionals or in their own research and interactions with chatbots and frontier models.

So much of AI literacy in my mind remains knowing which tool to use, when and how. A big part of the how with frontier models remains the act of prompt engineering. The human-AI interface unless it evolves rapidly soon, is all about prompt-engineering in these nascent first years of Generative AI.

Prompt engineering is the art and science of designing and optimizing prompts to guide AI models, particularly large language models (LLMs), towards generating the desired responses.

Read Anthropic’s Prompting Overview here.

By Mike Taylor, Liverpool Area. First written in August, 2024.

This is a 20-minute version of his original article in audio format.

To dive deeper with Mike Taylor these are his articles that stood out to me.

Read more by the Author:

Why AIs Need to Stop and Think Before They Answer

Prompt Engineering: From Words to Art and Copy

How close is AI to replacing product managers?

Putting a Prompt into Production

Foundational Prompting Tips

While the field of prompt engineering has evolved rapidly with advanced techniques like chain-of-thought prompting and emotional stimuli, it's important to remember that certain fundamental principles remain effective regardless of the AI model or application. Whether you're a beginner just starting out or an experienced user working with cutting-edge models, these basic techniques will always help you get better results from AI:

Be Specific: Be specific in your requests. Rather than asking broad questions, focus on detailing exactly what you need. For instance, instead of asking generally about video conferencing, you might say, "Explain step-by-step how to set up and join a Zoom meeting on a Windows laptop, including how to test audio and video." This level of detail guides the AI to provide more precise and useful information.

Break Down Complex Tasks: Break down complex tasks into smaller, manageable parts. When tackling a home renovation project, for example, don't ask about the entire process at once. Instead, inquire about specific aspects like the key steps in planning a kitchen remodel, the pros and cons of different countertop materials, or budget-friendly options for updating a kitchen without full renovation. This approach allows you to gather detailed information at each stage of your project.

Provide Context: Provide context to get more relevant responses. By offering background information, you enable the AI to tailor its answers to your specific situation. For instance, when planning a dinner party, explain, "I'm hosting a dinner party for 6 people, including one vegetarian and one person with a gluten allergy. Suggest a three-course menu that can accommodate these dietary restrictions while impressing my guests." This context allows the AI to offer more appropriate and helpful suggestions.

Use Follow-up Questions: Use follow-up questions to refine and expand upon the information you receive. If you're working on improving your public speaking skills, you might start with a general question about techniques, then ask more specific queries about managing nervousness, structuring a talk, or improving voice projection. This iterative approach helps you dig deeper into the topic and gather more comprehensive information.

Verify Important Information: Always verify important information, especially for critical matters. Instead of asking directly for investment advice, you might say, "Can you list key questions I should ask a financial advisor about retirement planning? Include topics like risk assessment, diversification strategies, and long-term care considerations." This approach encourages consulting with professionals for crucial decisions while using AI to prepare for those conversations.

These foundational tips work well with everything from ChatGPT to Claude and open-source models. They're useful for anyone exploring AI technologies, regardless of experience level. By mastering these basics, you'll be well-prepared to experiment with more advanced techniques as you become more comfortable with AI interactions, and test and learn what works for you.

Historical Context: Prompt Engineering from 2020 to 2025

Since the introduction of large language models (LLMs), the skill of prompt engineering has become in-demand, as people and organizations look for ways to get better results from generative AI models. In this blog post, we'll take a journey through the key developments that have shaped the field, from the early days of GPT-3 to the cutting-edge techniques of today, with a look ahead to what’s next.

In order to understand the future of prompt engineering, it’s important to see what came before. This timeline isn’t exhaustive, but these have been the most personally impactful events on my own work as a prompt engineer. If you want to dive deeper, I covered these techniques and more in my book “Prompt Engineering for Generative AI” (O’Reilly, 2024).

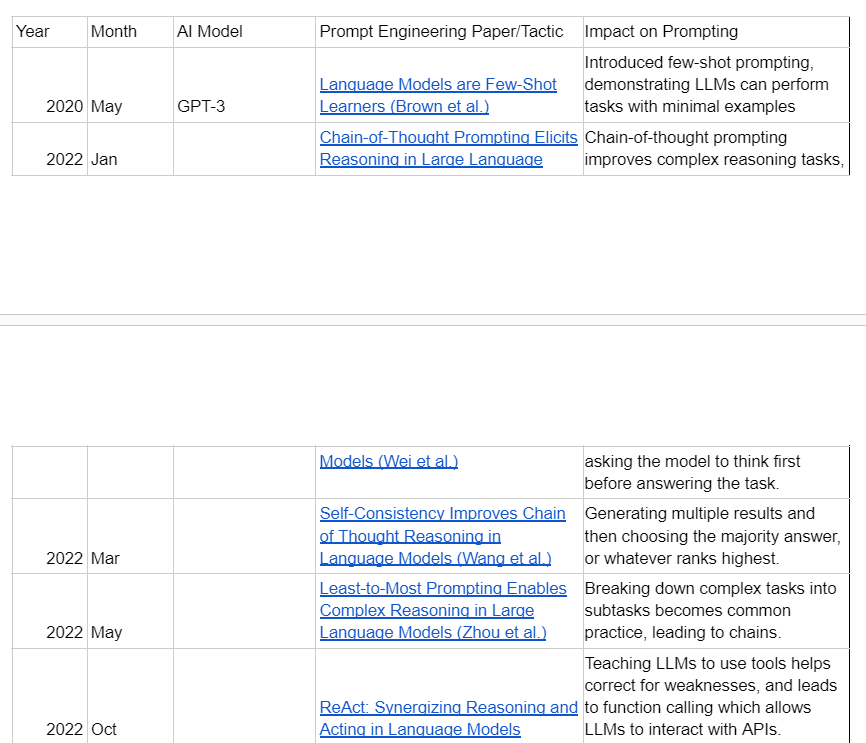

🐝 May 2020: GPT-3 and Few-Shot Prompting

The release of GPT-3 in May 2020 marked a watershed moment in natural language processing. The paper "Language Models are Few-Shot Learners" by Brown et al. popularized the concept of few-shot prompting, demonstrating that LLMs could perform tasks with minimal examples. This breakthrough challenged the traditional paradigm of machine learning, which typically required large amounts of labeled data for training.

Few-shot prompting opened up new possibilities for AI applications, allowing models to adapt to new tasks on the fly. It significantly reduced the time and resources needed for task-specific fine-tuning, making LLMs more versatile and accessible for a wide range of applications. This development laid the foundation for many of the prompt engineering techniques that would follow.

❄️ January 2022: Chain-of-Thought Prompting

Wei et al. introduced chain-of-thought prompting in their paper "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models." This technique improved performance on complex reasoning tasks by asking the model to think through its reasoning process before providing an answer. It marked a significant step towards more transparent and interpretable AI decision-making.

Chain-of-thought prompting not only enhanced the accuracy of LLMs on complex tasks but also provided insights into the model's reasoning process. This transparency made it easier for humans to verify and trust the model's outputs. Moreover, it opened up new avenues for debugging and improving model performance by identifying where in the chain of reasoning errors or biases might occur.

🍀 March 2022: Self-Consistency in Chain of Thought

Building on chain-of-thought prompting, Wang et al. proposed the concept of self-consistency in their paper "Self-Consistency Improves Chain of Thought Reasoning in Language Models." This approach involved generating multiple results and selecting the majority answer or the highest-ranking option, further improving the reliability of LLM outputs.

Self-consistency addressed one of the key challenges in LLM applications: the variability of outputs. By generating multiple chains of thought and comparing their conclusions, the technique could identify more robust and consistent answers. This method not only improved accuracy but also provided a measure of confidence in the model's outputs, making it particularly valuable for critical applications where reliability is paramount.

🌼 May 2022: Least-to-Most Prompting

Zhou et al. introduced the least-to-most prompting technique in their paper "Least-to-Most Prompting Enables Complex Reasoning in Large Language Models." This method involves breaking down complex tasks into subtasks, which became a common practice in prompt engineering and led to the development of prompt chaining.

Least-to-most prompting addressed the challenge of tackling complex problems that were beyond the scope of a single prompt. By decomposing tasks into smaller, manageable steps, it allowed LLMs to approach problems more systematically. This technique not only improved performance on complex tasks but also made the problem-solving process more transparent and easier to debug.

🎃 October 2022: ReAct - Reasoning and Acting

The ReAct framework, introduced in "ReAct: Synergizing Reasoning and Acting in Language Models," taught LLMs to use tools to compensate for their weaknesses. This development paved the way for function calling, allowing LLMs to interact with APIs and expand their capabilities beyond text generation.

ReAct represented a significant step towards more capable and versatile AI assistants. By enabling LLMs to reason about when and how to use external tools, it addressed limitations in areas such as up-to-date information retrieval, complex calculations, and interaction with external systems. This framework laid the groundwork for more advanced AI systems that could seamlessly integrate with a wide range of applications and services.

🍂 November 2022: The ChatGPT Revolution

The release of ChatGPT brought conversational prompting into the mainstream. It demonstrated the potential of LLMs to engage in human-like dialogue, understand context over multiple turns of conversation, and adapt to various user needs and communication styles. This was when prompt engineering became a viable career path, and I started earning enough from training and freelancing to go full time as one.

With ChatGPT, system prompts gained importance as a way to set the context and behavior of the AI assistant. This development highlighted the significance of carefully crafted instructions in guiding the model's responses. It also sparked widespread public interest in AI capabilities, leading to increased focus on ethical considerations and potential applications of conversational AI across various industries.

🌷 March 2023: GPT-4 and Improved Reasoning

With the release of GPT-4, LLMs became significantly better at reasoning and following instructions. This advancement made prompt engineering easier and led to more reliable response formats, expanding the potential applications of AI in complex problem-solving scenarios. A lot of the hacky tricks we had to use to get GPT-3 to behave no longer were necessary, and we became less reliant on finding that one magic word to put in a prompt.

GPT-4's improved capabilities allowed for more nuanced and context-aware interactions. It demonstrated enhanced ability to understand and execute multi-step instructions, leading to more sophisticated applications in areas such as content creation, analysis, and decision support. This development also highlighted the importance of clear and well-structured prompts to fully leverage the model's capabilities.

🦋 May 2023: Tree of Thoughts

The "Tree of Thoughts" approach introduced a method for simulating multiple experts thinking through steps in reasoning and correcting each other until an answer is found. This technique represented a more advanced form of collaborative reasoning within a single LLM. This isn’t in common use yet, but the idea of multiple agents working together to solve a task is likely to see a lot of play over the next few years.

By exploring multiple reasoning paths simultaneously and allowing for self-correction, the Tree of Thoughts method improves performance on particularly challenging and open-ended tasks. It demonstrated the potential for LLMs to engage in more human-like problem-solving processes, considering alternative viewpoints and revising initial assumptions when necessary.

🌞 June 2023: LLM-as-a-Judge

Zheng et al. demonstrated in their work "Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena" that using an LLM to judge the performance of another LLM can yield results that reasonably agree with human ratings. This finding opened up new possibilities for automated evaluation and improvement of AI models. This has been one of the biggest impacts on the speed at which I can get results, because now I no longer have to wait for a human expert to judge every experiment I run.

The LLM-as-a-Judge approach provided a scalable method for assessing the quality of AI-generated content and responses. While not a perfect substitute for human evaluation, it offered a valuable tool for rapid iteration and improvement in AI development. This technique also raised interesting questions about AI self-assessment and the potential for creating more self-aware and self-improving AI systems.

🍉 July 2023: Emotional Stimuli in Prompting

Research showed that incorporating emotional stimuli or even making threats in prompts could improve LLM performance, particularly in avoiding "lazy" responses. This finding highlighted the complex relationship between language, emotion, and AI behavior.

This was a real problem in the winter of 2023 and emotion prompting was the number one effective method for dealing with it. With subsequent releases OpenAI has largely fixed the problem, and this technique is less needed now with GPT-4o or the other state-of-the-art models.

🌻 August 2023: Role-Play Prompting

Asking an LLM to adopt a specific persona was a common technique as far back as I can remember, but nobody was sure if it was actually helping or just superstition. The effectiveness of role-play prompting was scientifically validated with the “Better Zero-Shot Reasoning with Role-Play Prompting” paper, proving that asking LLMs to assume specific roles can indeed improve performance in certain areas.

Role-play prompting demonstrated particular effectiveness in tasks requiring specialized knowledge or unique perspectives. By instructing the model to "act as" a specific type of expert or character, users could elicit more focused and relevant responses. This technique also showed potential in creative writing and storytelling applications, allowing for more diverse and character-driven narratives.

🍁 September 2023: Automated Prompt Engineering

Kattab et al. introduced DSPy, a framework for automating prompt engineering by using LLMs to rewrite prompt instructions and add relevant examples. This development marked a significant step towards reducing the manual effort required in crafting effective prompts. It built on the work of previous papers like “Large Language Models Are Human-Level Prompt Engineers” (Zhou et al, 2022), with the idea that we can use AI to automate our prompt engineering work.

Automated prompt engineering promised to make AI more accessible to non-experts by handling the complexities of prompt crafting behind the scenes. It also opened up possibilities for dynamic prompt optimization, where prompts could be automatically refined based on task performance and evaluation scores. This approach has the potential to significantly accelerate the development and deployment of AI applications across various domains, and is my number one focus area at present.

🌰 November 2023: Mega Prompts and Multimodal Prompting

The release of GPT-4V with a 128K token context window (about 70,000 words) led to the rise of "Mega prompts" – detailed instructions and relevant context spanning two or more pages. This expanded context window allowed for more comprehensive and nuanced interactions with the AI model. Former Head of Google Brain Andrew Ng revealed that many of the AI applications he sees use mega prompts with pages full of instructions, and this is something I’ve observed myself.

Additionally, the ability to process images opened up new possibilities in multimodal prompting. This development enabled AI to understand and respond to prompts that combined text and visual information, significantly expanding the range of tasks that could be addressed. From image analysis to visual question answering, multimodal prompting represented a major leap forward in AI capabilities.

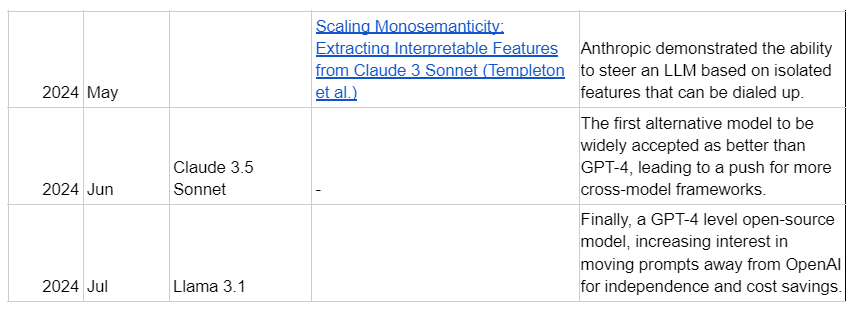

💐 May 2024: Feature Isolation and Steering

Anthropic's research on "Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet" demonstrated the ability to steer an LLM based on isolated features that can be adjusted, offering new levels of control. The ‘golden gate claude’ model they released (a model that couldn’t help but talk about the Golden Gate Bridge) was funny, but hinted at stronger capabilities.

Instead of dialing up the “Golden Gate Bridge” neurons, find the neurons responsible for being good at coding or strong at reasoning, and dial them up instead. Alternatively dial down the neurons responsible for undesirable responses, like racist remarks, or bugs in code. This development is not publicly available yet, but it promises to enhance the reliability and specificity of AI systems across various applications.

🍹 June 2024: The Rise of Claude 3.5 Sonnet

The release of Claude 3.5 Sonnet marked a significant milestone as the first alternative model widely accepted as superior to GPT-4. This development has led to increased interest in cross-model frameworks for prompt engineering. I use this model for all of my coding and creative writing tasks, and have started shifting client workloads as well.

Claude 3.5 Sonnet's success highlighted the importance of diversity in the AI ecosystem. It spurred efforts to develop prompt engineering techniques that could work effectively across different models, promoting interoperability and reducing dependency on any single AI provider. This trend towards model-agnostic prompt engineering promises to make AI applications more robust and adaptable, and competition among vendors can only benefit consumers of AI models with lower costs and faster feature development. .

😎 July 2024: Open-Source Breakthrough with Llama 3.1

The introduction of Llama 3.1, an open-source model performing at GPT-4 level, has sparked renewed interest in moving prompts away from proprietary models. This shift is driven by a desire for independence and cost savings in AI applications: if you can avoid paying fees to OpenAI and sending them your data, you will. Even if OpenAI or Anthropic pulls further ahead with GPT-5 or Claude 4, the current Llama model is good enough to do 80% of the tasks I have used AI for, and it will only get better as the open source community fine-tunes it and adds additional capabilities.

Llama 3.1's release democratized access to high-performance AI models, allowing smaller organizations and individual developers to build sophisticated AI applications without relying on costly API services. This development accelerated innovation in prompt engineering, as a wider community of researchers and developers began experimenting with and refining prompting techniques on powerful, freely available models. While the largest LLMs need less instruction to do a good job, a shift towards smaller, on-device, open-source models will necessitate stronger prompt engineering skills.

🔮 What will the trends be in 2025?

As we look back on the evolution of prompt engineering, it's clear that the field has undergone rapid and significant changes. From the early days of few-shot learning to the current era of multimodal, emotionally-aware, and highly controllable LLMs, prompt engineers have continuously adapted their techniques to harness the growing capabilities of AI models.

The latest developments all point towards a future where prompt engineering becomes more automated, model-agnostic, and finely tuned over the next year. With the rise of open-source alternatives and the increasing sophistication of LLMs, we can expect prompt engineering to play an even more crucial role in unlocking the full potential of AI across various domains. Rather than prompting back and forth with ChatGPT, expect to be writing instructions and personas for multiple AI agents, who collaborate on your task.

As we move forward, the challenge for prompt engineers will be to stay ahead of these rapid developments, continually refining their skills and exploring new frontiers in AI interaction. Technical abilities will continue to be essential, as these AI systems get more complex with the introduction of agents. With LLMs starting to grade their own homework, the focus of prompt engineering will shift towards monitoring performance, and finding and fixing edge cases that the AI can’t handle yet. End of Mike Taylor’s guest post.

In the news cycle related to OpenAI, we have two major developments.

What is OpenAI Operator?

The rest of the article is for premium readers and analyzes what this tool could mean and whether the trajectories of diminishing returns in frontier models and on whether powerful or userful AI agents of 2025, are even compatible. We also explore OpenAI’s blueprint for U.S. AI infrastructure at some length.