How to use NotebookLM for personalized knowledge synthesis

Two powerful workflows that unlock everything else. Intro: Golden Age of AI Tools and AI agent frameworks begins in 2025.

In 2025 are we entering a golden age of AI tools I ask? Scroll down if you want to get to the guide about NotebookLM in a hurry. Let me first build a quick case for you and talk about some of the recent trends in Enterprise AI, Agent frameworks, Open-Source innovation, how they intersect with capex on AI products, tool development - ultimately discover how this all begins to converge in 2025. Recently OpenAI released a free online course designed to help K-12 teachers learn how to bring ChatGPT, the company’s AI chatbot platform, into their classrooms.

Meta also announced that it’s forming a product group to build AI tools for businesses. They even poached Clara Shih from Salesforce AI to lead it. Shih will be responsible for developing and monetizing tools within Meta's AI portfolio that are catered specifically to businesses i.e, B2B.

At Microsoft Ignite, Microsoft revealed its plans for AI agents in some more detail. What’s clear is that in artificial intelligence 2025 will be the year where AI tool products begin to mature. Many of the projects and AI tools Google DeepMind have been working on will also see new light. We’re just at the two year mark since ChatGPT went alive, so Generative AI products have a lot of growing up to do.

The way TikTok maker ByteDance is integrating Generative AI into Ed-Tech products is also notable and rarely covered or even mentioned. Gauth in particular as a home-work app seems to be trending. For a greater understanding of what ByteDance is doing so well, check out Gauth, Coze, Cici, BagelBell, Hypic and Doubao. In terms of consumer app development integrating Generative AI, ByteDance is way ahead of even many Western giants.

Since the advent of TikTok, ByteDance has already learned a lot about how to build, integrate new technology, scale and build and market mobile app for actual consumer product-market fit. In 2025 I expect their products to make rapid progress in consumer app B2C adoption. Recently DeepSeek, an AI research company funded by quantitative traders in China, released a preview of DeepSeek-R1, which the firm claims is a reasoning model competitive with OpenAI’ o1. How Chinese labs are building models like Qwen and DeepSeek-R1-Lite are only going to continue in 2025.

Meanwhile you have Google, Meta, Microsoft, Anthropic and OpenAI scrambling to build more Generative AI tools, utility, features and agents. One of the major trends for 2025 is how AI tools will evolve as products and increase their adoption levels among mainstream users. Google’s multiplicity of AI products is really a playground of prototypes. Google gives us access to a variety of experimental tools that aren’t quite full-fledged products quite yet. The “cousins” of NotebookLM’s audio overviews are particularly exciting like Illuminate, Learn About, and others on the way.

What is Google Learn about?

Google's new AI tool, Learn About, is designed as a conversational learning companion that adapts to individual learning needs and curiosity. It allows users to explore various topics by entering questions, uploading images or documents, or selecting from curated topics. The tool aims to provide personalized responses tailored to the user's knowledge level, making it user-friendly and engaging for learners of all ages.

Is Generative AI leading to a new take on Educational technology? It certainly appears promising heading into 2025.

The Learn About tool utilizes the LearnLM AI model, which is grounded in educational research and focuses on how people learn. Google insists that unlike traditional chatbots, it emphasizes interactive and visual elements in its responses, enhancing the educational experience. For instance, when asked about complex topics like the size of the universe, Learn About not only provides factual information but also includes related content, vocabulary building tools, and contextual explanations to deepen understanding.

🌟📋🌱 (November, 2024)

Popular Articles on AI Supremacy

The Chief AI Officer of America, Elon Musk

How Silicon Valley is prepping for War

Major AI Functionalities 🧩 / with Apps🎮

"Who to follow in AI" in 2024? 🎓

AI for Non-Techies 🛠️: Top Tools for Meeting Notes

Perplexity - the Advent of AI Commerce

Perplexity has been partnering with U.S. campuses striking deals to offer students Perplexity Pro. They are also piloting whether Perplexity can act as shopping assistant with a “Shop like a Pro” feature. To become more commercially viable it’s not surprising to see that Perplexity is now a one-stop solution where you can research and purchase products in what they term a new era of AI-commerce and one-click purchasing. Using Perplexity Buy with Pro, consumers can checkout with one click, requiring users to save their shipping and billing information just once. Perplexity manages this partnering with Shopify among others.

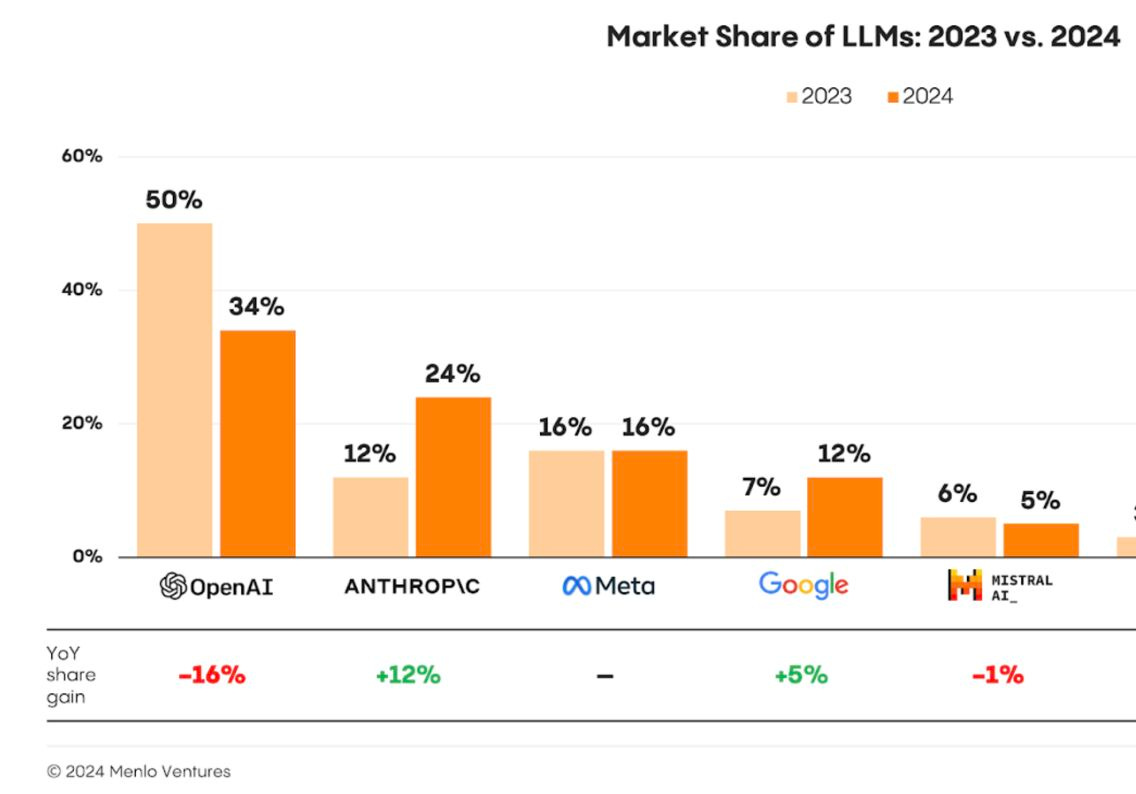

Another trend we are going to see in 2025 is a variety of AI agent frameworks vying for more mainstream adoption among developers. This is a super importance race if you believe AI agents have a lot of potential to automate tasks. Meanwhile, on the Enterprise AI level, AI spend in 2024 has gone from $2.3B to $13.8B, a 6x increase.

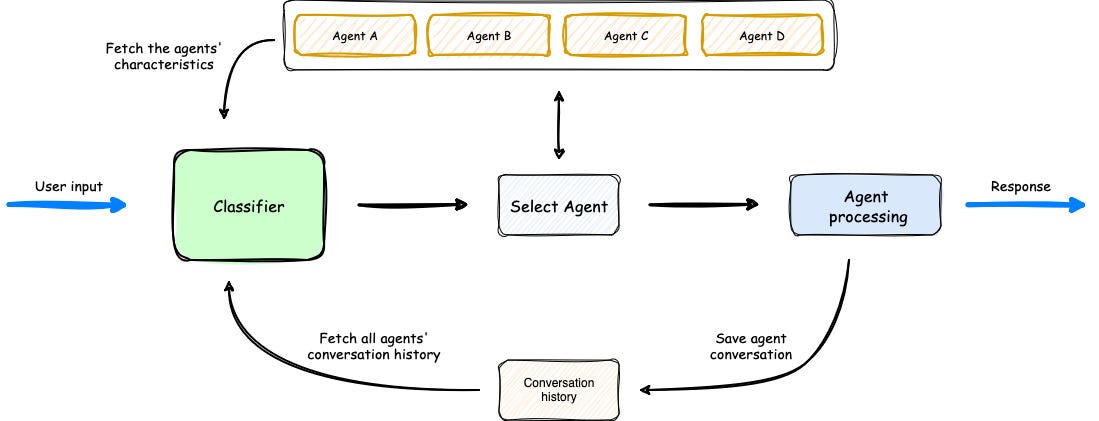

In November, 2024 Amazon announced via AWS a ‘Multi-Agent Orchestrator’ that is its AI agent framework.

The framework routes queries to the most suitable agent, maintains conversational context, and integrates with various environments, including AWS Lambda, local setups, and other cloud platforms. Get more details. In my view, 2025 will be the year major AI agent frameworks compete for developers globally. In terms of the general public’s appreciation of what Generative AI can do, the wow-factor of Audio Overviews really went a bit viral in September, 2024 and that momentum has continued.

NotebookLM emerges in 2024

How NLM has gone mainstream for Google in 2024 is a success story all on its own. NotebookLM, an AI-powered note-taking tool, was developed by a team at Google Labs and was initially introduced as "Project Tailwind" during the Google I/O conference in May 2023. The project was led by Steven Johnson (Steven Johnson of the Newsletter Adjacent Possible), who has been instrumental in shaping its vision and functionality. Johnson's collaboration with Google aimed to create a tool that enhances the research and ideation processes for users by leveraging AI capabilities to interact with their documents effectively.

When Audio Overviews was released, the synthetic podcasts went viral, creating Google’s own little “mini-ChatGPT” moment in the Autumn of 2024. I’ve been getting a lot or requests to dive more deeply into this tool. I asked Alex McFarland of AI Disruptor who already made us a hit guide of Perplexity for his insights.

More articles by Alex

OpenAI Operator agent will change how we use AI

New data shows AI is replacing freelancers faster than expected

How to use Perplexity in your daily workflow

An image of Alex McFarland in Rio de Janeiro, Brazil and the logos of the various AI tools he covers and writes useful guides for.

If you want full access to AI Supremacy and my work, considering upgrading.

(☝—💎For a limited time get a Yearly sub for $5 a month✨— ☝).

Let’s now get to the deep dive by our guide Alex McFarland. NotebookLM is certain to keep improving, but it already has some level of customization.

Recommend that you watch the videos, his explanations are super clear, lucid and full of extra tips.

How to use NotebookLM for personalized knowledge synthesis

When Google released NotebookLM's podcast feature, my inbox exploded with people asking about it. Everyone started creating basic podcast summaries - but they were missing the real power of this tool.

I've spent the last few weeks diving into NotebookLM, discovering workflows that go far beyond simple summarization.

Here's the thing: most people are using NotebookLM like a basic text-to-speech tool. Upload a document, get a summary, done. But that's like using ChatGPT just to fix your grammar - you're barely scratching the surface.

Before we dive into the specific workflows, let's quickly cover what makes NotebookLM fundamentally different from other AI tools:

It stays focused on your sources - unlike ChatGPT, it shouldn’t hallucinate or bring in outside information

It can process multiple documents at once, finding connections between them

It generates natural-sounding podcast discussions about your content

It provides source citations for everything, linking directly to the original text

It's completely free (for now)

The power of NotebookLM isn't in any single feature - it's in how you combine them with the new customization options rolled out in October. This is where things get interesting.

In this guide, I'll show you:

How to use the new advanced audio customization features

Two specific workflows for synthesizing information (research papers and YouTube videos)

Pro tips for maximizing results with any type of content

Common pitfalls to avoid (learned these the hard way)

Let's start by looking at the recent customization updates that make a lot of this possible…

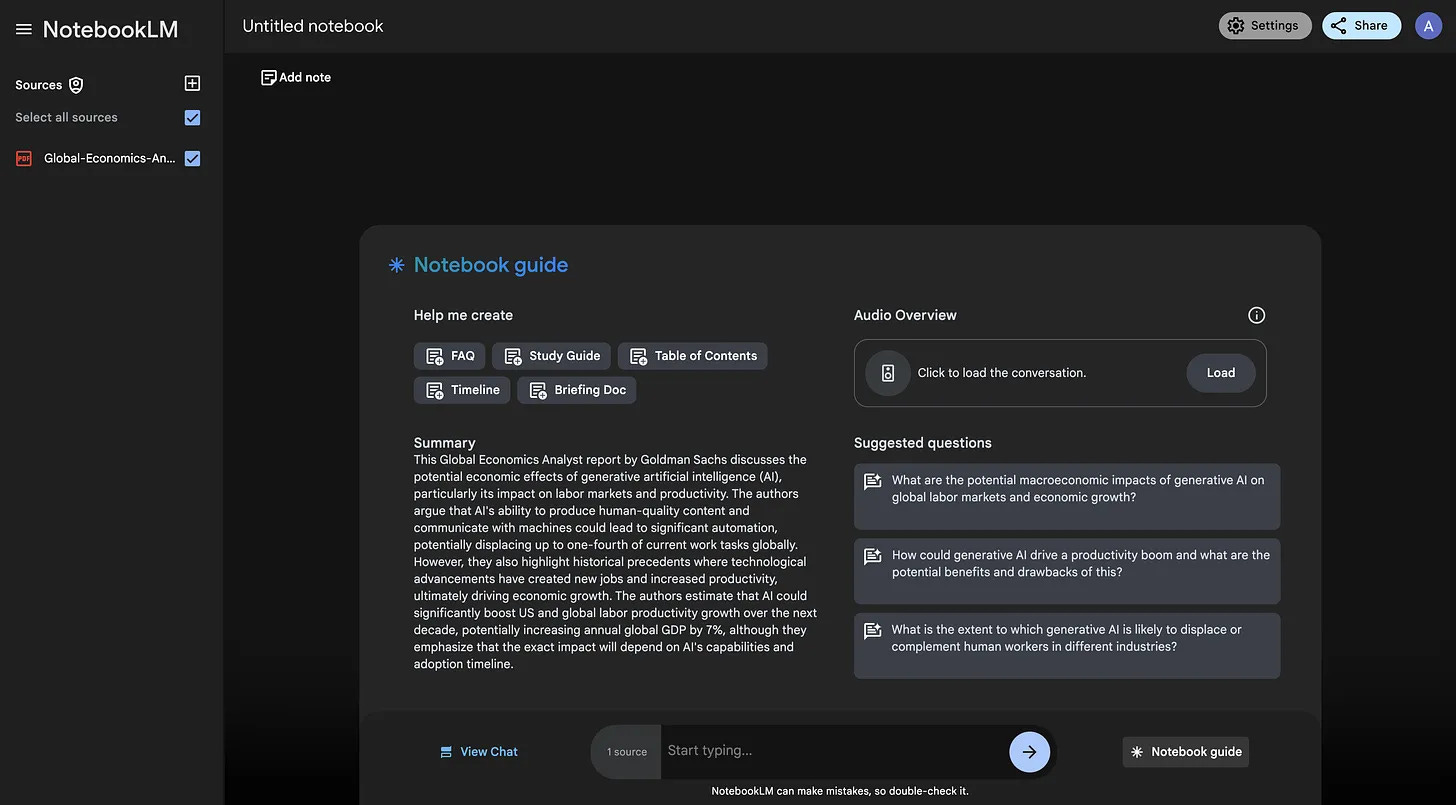

Understanding NotebookLM and its new superpowers

What exactly is NotebookLM? Think of it as your personal AI research assistant that helps you understand and process information. You feed it documents - PDFs, Google Docs, YouTube transcripts, web pages - and it helps you extract insights in multiple ways.

The basic workflow is simple:

Create a notebook (like a digital workspace)

Upload your documents (up to 50 files)

Start interacting with your content through summaries, Q&A, or audio discussions

But here's where it gets interesting: Unlike ChatGPT or other AI tools, NotebookLM stays laser-focused on your sources. It won't make things up or bring in outside information. Everything it tells you is directly linked back to your documents.

What really sets it apart is the Audio Overview feature. Instead of just reading summaries, you get an AI-generated podcast-style discussion about your content. It’s two knowledgeable hosts breaking down complex topics while you work on other tasks.

And this is where the October 2024 update comes in..

The October update transformed NotebookLM from a useful tool into a real powerhouse AI.

Here's what's actually possible now:

Guided conversations that matter

Instead of getting generic overviews, you can now direct the AI hosts to focus on exactly what you care about:

Extract methodology sections from research papers for quick analysis

Focus on implementation details in technical documentation

Pull out specific strategic insights from market reports

Compare different viewpoints across multiple documents

Focus on specific audiences

Pro tip: I always include an expertise level in my instructions. The difference between "explain this to a beginner" and "discuss this at an advanced technical level" is noticeable.

Common mistakes to avoid:

Don't overload with too many documents at once

Avoid overly broad instructions like "tell me everything important"

Don't skip the customization step

Remember to specify your audience level (this drastically improves output quality)

Think of these customization options as your control panel. They're what allow us to transform NotebookLM from a simple document reader into a sophisticated research and learning tool. Now that you understand both the basics and these advanced features, let's dive into our first major use case: transforming how you process research papers…

Quick setup for NotebookLM

This is an explainer video by Alex one minutes and forty-one seconds.

Transform how you process academic content

If you've ever stared at a stack of research papers wondering how to efficiently process them all, this workflow changed everything for me. I've developed a system that cuts my research time in half while actually improving comprehension.

Let me show you exactly how I process research papers now. I discovered this approach after noticing that traditional academic reading – going front-to-back, taking notes, trying to connect ideas – was painfully inefficient. Even if you feed it to an LLM like ChatGPT, it still is not as interactive as NotebookLM.

With NotebookLM, we can be much smarter about it.

I start with what I call the "overview phase." I upload a single paper to a fresh notebook and generate a customized Audio Overview. But here's the key: I don't use generic instructions. Instead, I tell NotebookLM exactly what I care about: "Create a discussion that focuses on the key methodology choices, main findings, limitations and gaps, and connections to existing research. Present it for a non-technical audience." This really helps me as in my work as a writer for several publications, I often cover research papers from the AI and Robotics fields.

This gives me a perfect high-level understanding.

Now let’s say you’re not just a writer, but instead an actual researcher or academic.

Once I have this foundation, I move to what I call "deep understanding." This is where NotebookLM really shines. Instead of trying to extract every detail, I focus on what matters. I ask about key assumptions in their methodology, explore alternative approaches they might have considered, and examine how their findings compare to related work. For this, I interact with the text. Forget the Audio Overview here.

But here's where it gets really powerful – the synthesis phase. After processing a few papers in the field, I create what I call a "research synthesis notebook." I upload 2-3 closely related papers and have NotebookLM analyze them together. The instruction here is crucial: "Compare and contrast these papers' approaches and findings. Identify patterns, contradictions, and gaps that could inform future research."

The insights this generates are incredible. NotebookLM finds connections between papers that I might have missed, highlights contradicting results that need investigation, and often reveals promising research directions that aren't obvious when reading papers in isolation.

Video guide:

This is an explainer paper Synthesis by Alex five minutes and twenty-seven seconds long.

Audio Overview:

Deep dive into any topic through YouTube synthesis

Ever find yourself coming across a lot of interesting YouTube videos on a given topic but not having enough time to watch them all? Who does.

This process is useful for any topic you're trying to master - whether it's understanding AI agents, learning about quantum computing, exploring new marketing strategies, anything. The key is how we combine multiple creator perspectives into a coherent narrative.

Instead of watching videos sequentially, we can upload links from different creators and generate a synthesized discussion that connects all the dots. The magic is in the customization.

Rather than generating a simple overview, you can craft instructions that provide a unique and in-depth perspective: "Create a comprehensive discussion about AI agents, focusing on unique perspectives from each source."

The resulting discussion doesn't just summarize – it finds patterns and connections across creators. Where one person discusses theory, another might show practical applications. When someone raises a challenge, another might offer solutions. These connections are often missed when watching videos in isolation.

In my experience, watching a couple YouTube videos can end up being spread out over multiple days. And by the time I get to them, there are many more taking their place.

A process that would typically take days of watching and note-taking becomes a focused period of high-quality learning. Whether you're trying to understand AI agents for your next project or diving into any other complex topic, this workflow turns scattered video content into clear, actionable knowledge.

Video guide:

This is an explainer video around Synthesis of YouTube Videos by Alex five minutes and forty-nine seconds long.

Audio Overview:

Why I focused on these two workflows (and why you should go deeper)

I've found these two workflows - research paper processing and YouTube synthesis - to be two of the most useful for most people at this point. They solve real problems we all face: how to efficiently process complex information and how to learn from scattered content.

What makes these workflows special is their flexibility. The same principles we used for research papers can be applied to industry reports, technical documentation, or any complex text. The YouTube synthesis approach works just as well for conference talks, interviews, or training videos.

But here's the thing - what I've shown you is just the beginning. My goal is to inspire you to dive deeper and experiment on your own. The workflows I demonstrated use 2-3 documents or videos, but you could easily scale this to 10-20 sources. I barely touched on the text-based interactions, which could transform how researchers interact with their sources, essentially creating a personalized AI research assistant.

The key to staying ahead with AI tools isn't just following tutorials - it's diving in and experimenting. Maybe you'll discover that combining YouTube transcripts with related research papers creates incredibly rich learning experiences. Or perhaps you'll find ways to use the customization features I haven't even thought of.

That's what excites me about tools like NotebookLM. Everyone who uses it will discover their own unique ways to make it valuable. The workflows I've shared aren't meant to be rigid templates - they're starting points for your own exploration.

If you're ready to get started, the video guides will walk you through the foundations. But don't stop there. Push the boundaries, try new combinations, experiment with different instructions. That's how you'll uncover workflows that perfectly match your needs and keep you ahead of the curve.

And tell me after, because I want to learn.

Editor’s Note:

Prefer to read the guide in dark mode, that’s possible too.

For more guides like this: check out the Newsletter of the guest author and guide: https://aidisruptor.ai/

If you want to read our guide of end of September before customization features had come out by Nick you can read it here: https://www.ai-supremacy.com/p/googles-notebooklm-a-game-changer