How Thinking Machines Lab just made History

As capex accelerates higher, so does the price of entry. The bar is high for AI talent, and Mira Murati is the honeypot. 🍯

With the huge talent exodus from OpenAI in recent years, it spawned Anthropic and many other AI startups. But we have to mention the main off-shoot that is being led by OpenAI’s former CTO, Mira Murati. There is more here than it seems, let me try and explain.

Thinking Machines Lab officially closed a $2B seed round at a $12B valuation – the largest seed round in VC history and 4x bigger than any previous seed funding. The era of Gen AI calls for moonshots, and Thinky is unusual in many regards.

In a world where women struggle in Technology, to be CEOs or even to get funding from mostly male VCs, Mira Murati is the exception. She breaks all the rules, and from Albania to Silicon Valley, we are witnessing a special moment in history. 🏹

The scope of the project is also related to Open-source LLMs, a vast wasteland in the West where the likes of Mistral, Together AI, Ai2 and a few others huddle around the campfires of open-weight innovation with Hugging Face, hoping for better days.

Well, it’s official now, Thinking Machines Lab has raised $2 billion in fresh capital — making it the largest seed round in venture capital history for a company that's been around for just six months and has yet to ship a single product. But don’t let that fool you!

Thinking Machines Lab is less than a year old and has yet to reveal what it’s working on.

I’m so passionate about AI startups and their potential, but even this story is hard to fathom at the peak AI bubble in the United States, where even the Pentagon wants in on the action. But when you dig a bit deeper it actually starts to make a bit more sense.

Investors include a16z, NVIDIA, Accel, Cisco, AMD, Jane Street

Murati has said that their first multimodal, open-source-friendly product lands “within months”

Here is a startup that the two U.S. leaders of AI chips are both involved in, Nvidia and AMD, along with a16z making a classic moonshot bet.

The quality of the AI talent at Thinking Machines Lab is likely to exceed that of Google Deepmind or OpenAI, only approached by Anthropic. Because we are living in the OpenAI mafia era where splinter projects become more attractive both for doing pioneering work and for the financial incentives.

Thinking Machines Lab represents yet another “pivot” in the failed Mission of OpenAI. While Google, xAI, Meta Superintelligence Lab and even Anthropic mirror the tactics and behavior of OpenAI, here we have yet another fresh start of OpenAI vets thinking and planning for a re-do. If OpenAI was founded out of fear and envy of Google DeepMind, a lot has changed in just a few years. Thinking Machines know that they can do better, that LLMs weren’t supposed just to be people-pleasing token gulping sycophant-heavy machine economy chatbots.

The Product is a Mystery

Murati peeled back the curtain on the company’s first product a bit in a post on X on July 15th, 2025, claiming that the startup plans to unveil its work in the “next couple months,” and it will include a “significant open source offering.”

At a time when China has overtaken the U.S. in open-weight models, it’s a bizarre period of the evolution of LLMs where new frontier models land nearly every month or at most, every six weeks.

Amid OpenAI’s controversial past, Mira Murati is a recognisable face - Murati rocketed into the spotlight in 2023 when she was named interim CEO of OpenAI after Sam Altman was briefly ousted by the company’s board. It was a complicated time where ChatGPT went on to become synonymous with AI, and only Anthropic, itself a splinter startup of OpenAI’s original team, seemed to be able to follow. So now we have a third player in the game.

This is Talent

The team is comprised so far of about 30 researchers and engineers, with roughly two-thirds being former OpenAI employees, alongside talent from Meta AI, Mistral AI, Character.AI, and Google DeepMind. When a mission goes south like it did at OpenAI, what is the phoenix that rises from the ashes of manipulation and mismanagement (not to mention stringent NDAs, and a murder framed as a suicide?). Some of these names might sound familiar to you:

Mira Murati, CEO and founder, formerly OpenAI's Chief Technology Officer, instrumental in developing ChatGPT, DALL-E, and voice models.

John Schulman, Chief Scientist, an OpenAI co-founder who helped build ChatGPT and previously worked at Anthropic on AI alignment.

Barret Zoph, Chief Technology Officer, former OpenAI Vice President of Research, who left OpenAI alongside Murati.

Jonathan Lachman, former head of special projects at OpenAI, now a key member of the founding team.

Lilian Weng, a co-founder with a background in AI safety and robotics at OpenAI.

Alexander Kirillov, former multimodal research head at OpenAI, now part of the leadership team.

Andrew Tulloch, with expertise in pretraining and reasoning from his time at OpenAI.

Luke Metz, a research engineer with experience in post-training at OpenAI.

Devendra Chaplot, a founding team member, previously involved in AI research.

Myle Ott, another founding team member with a strong research background.

In some ways Thinking Machines Lab is more OpenAI than the talent who work at OpenAI are today - and might need to take that original mission and fulfil it with a greater promise. These are some of the people that witnessed OpenAI become almost unrecognizable in just a few short years of product and commercial pressure.

If I had to take key values or framing of what this startup is about from their website, I’d gather:

Science

Open-Source

Democratization of AI

Explainability of frontier AI systems

Customizable models at scale

You will notice the mission statement is less ideology and more pragmatism of action. Less Sam Altman-Ilya Sutskever cult-speak and more holistic alignment.

A $12 Billion valuation for a startup that really doesn’t formally exist yet with a product, is totally unheard of and the biggest moonshot we’ve yet to see in the AI space. I’d argue it’s more important also than Meta Superintelligence Lab trying to poach talent from OpenAI, Apple and xAI in some reckless act of Tycoon envy otherwise known as a day in the life of Mark Zuckerberg.

Thinking Machines isn’t a long shot, it’s the highest average concentration of AI talent we’ve ever seen in a small deal, higher than DeepMind in its early days. Led by a woman, and that’s fundamentally important when building and aligning an early team. These are OpenAI vets that have seen the good and bad of what power and influence can do to a rising AI startup. Who have witnessed Sam Altman in his paranoia and bizarre attempts at indoctrinations in a mission he doesn’t even truly believe in. Mira Murati isn’t Sam Altman, and it matters.

Thinking Machines Lab - Ghost in the Shell

Science is better when shared

Scientific progress is a collective effort. We believe that we'll most effectively advance humanity's understanding of AI by collaborating with the wider community of researchers and builders. We plan to frequently publish technical blog posts, papers, and code. We think sharing our work will not only benefit the public, but also improve our own research culture.

AI that works for everyone

Emphasis on human-AI collaboration. Instead of focusing solely on making fully autonomous AI systems, we are excited to build multimodal systems that work with people collaboratively.

More flexible, adaptable, and personalized AI systems. We see enormous potential for AI to help in every field of work. While current systems excel at programming and mathematics, we're building AI that can adapt to the full spectrum of human expertise and enable a broader spectrum of applications.

Solid foundations matter

Model intelligence as the cornerstone. In addition to our emphasis on human-AI collaboration and customization, model intelligence is crucial and we are building models at the frontier of capabilities in domains like science and programming. Ultimately, the most advanced models will unlock the most transformative applications and benefits, such as enabling novel scientific discoveries and engineering breakthroughs.

Infrastructure quality as a top priority. Research productivity is paramount and heavily depends on the reliability, efficiency, and ease of use of infrastructure. We aim to build things correctly for the long haul, to maximize both productivity and security, rather than taking shortcuts.

Advanced multimodal capabilities. We see multimodality as critical to enabling more natural and efficient communication, preserving more information, better capturing intent, and supporting deeper integration into real-world environments.

Learning by doing

Research and product co-design. Products enable iterative learning through deployment, while great products and research strengthen each other. Products keep us grounded in reality and guide us to solve the most impactful problems.

Empirical and iterative approach to AI safety. The most effective safety measures come from a combination of proactive research and careful real-world testing. We plan to contribute to AI safety by (1) maintaining a high safety bar--preventing misuse of our released models while maximizing users' freedom, (2) sharing best practices and recipes for how to build safe AI systems with the industry, and (3) accelerating external research on alignment by sharing code, datasets, and model specs. We believe that methods developed for present day systems, such as effective red-teaming and post-deployment monitoring, provide valuable insights that will extend to future, more capable systems.

Measure what truly matters. We'll focus on understanding how our systems create genuine value in the real world. The most important breakthroughs often come from rethinking our objectives, not just optimizing existing metrics.

This is a research lab unlike any other on the planet, perhaps even a radical departure from commercial norms like DeepSeek brought us six months ago.

These are also seasoned Machine learning researchers who know the responsibility and the weight that building LLMs can have on the world, who understand their impact like few other human beings on the planet.

A Little bit more of “Thinky” in our Life

The acronym or pet name on X for the startup is quickly morphing into “Thinky”. It appears to have a more action biased plan than SSI, the other mega “AGI startup” in the OpenAI Mafia leagues.

Mira Murati has been working diligently, she left OpenAI in September of 2024 and launched Thinking Machines in February, 2025 though she has not shared many details about the startup publicly.

Interesting corporate backers like Servicenow and Cisco are surprising. As was the fact the valuation is at $12 Billion and not $10 Billion that was earlier reported. This is a project many VCs wanted to get into even at an astronomical valuation. Consider the uniqueness of the merits of the team, the average seed round size for AI startups in 2024 was approximately $3.5 million, according to industry analyses. This team managed to get $2 Billion?

These are clearly “big” thinking machines that the startup are working on. Amid OpenAI’s delay of their own open-weight model, it gives more weight to the work that Thinking Machines are doing behind the scenes. With Meta going closed-model now, it creates a huge void in the ecosystem as Chinese models take the crown in the open-weight LLM rankings and leaderboards.

“We believe AI should serve as an extension of individual agency and, in the spirit of freedom, be distributed as widely and equitably as possible,” Murati wrote. “We hope this vision resonates with those who share our commitment to advancing the field.”

Some of the PR statements made certainly sound like those an open-source startup or at least an open-weight model maker might make. Bloomberg’s Mark Gurman considering Thinking Machines Lab as a potential Apple acquisition target are pretty far-fetched and amaze me.

AI explainability is truly important in terms of trust, security and the accountability of these systems:

“Soon, we’ll also share our best science to help the research community better understand frontier AI systems,” said Murati. - Tweet.

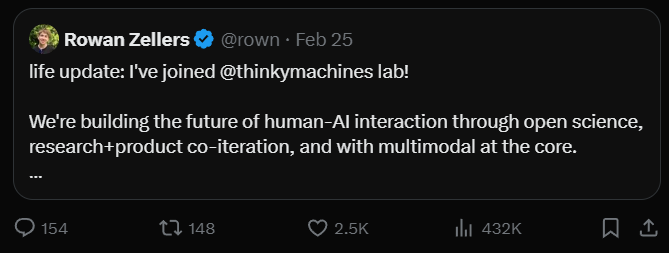

In addition to Meta Superintelligence Lab poaching several core members of OpenAI’s team, there’s a continued exodus here too:

What does “Open Science” even look like in the future of America? 🤔

Open-science

Rapid iteration

Co-design and collaborative principles

Focus on real-world deployments

Frontier capabilities with multi-modal nativity

Since Murati launched her venture, Thinking Machines Lab has attracted some of her former co-workers at OpenAI, including John Schulman, Barret Zoph (CTO), and Luke Metz. These are some of the most senior people that were left at OpenAI. These are some of the humble ones. Who take the science of research seriously.

The combined experience of these founding researchers at Thinking Machines Lab is off the charts as young a field as Generative AI and LLMS are. As we have seen with the talent wars, experience is at a premium.