How China Built a Parallel AI Chip Universe in 18 Months

The AI arms race is pivoting fast. Is de-Nvidification risk going to be a problem for the United States in market dominance soon?

Listen on the Go (audio version): 30 min, 39 seconds.

Good Morning,

In October, 2022 the U.S. decided it was a good idea to impose export controls on advanced semiconductors and AI chips on China. Three years later in 2025, it would appear that these sanctions became a catalyst for China to accelerate its own semiconductor supply-chain and relevant AI chip startups as rapidly as possible.

This could turn out to be the biggest strategic mistake in AI policy ever if I’m not mistaken. While there are plenty of Newsletters now on China, trade, geopolitics and technology, it’s sometimes good to get an insider source and analysis. I asked Poe Zhao (formerly TechTarget, Synced, etc…) who is an analyst in Beijing to look into this with greater detail.

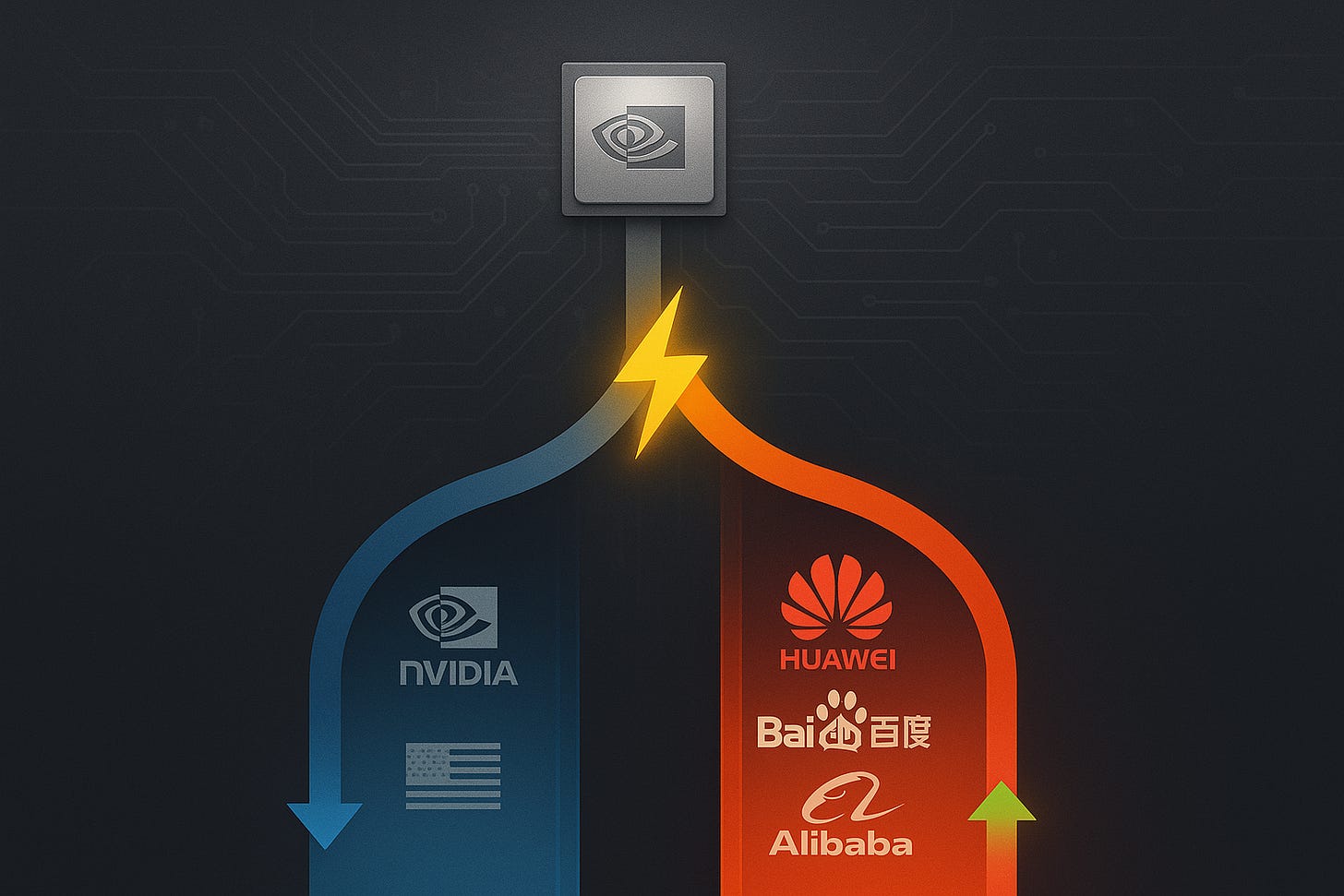

At a time of trade uncertainty and an AI boom, this topic is a major turning point for the future of AI. The Biden administration’s Bureau of Industry and Security (BIS) enacted immediate rules targeting advanced computing chips that took effect October 21st, 2022. Now a full three years later it appears Nvidia’s AI chips have no future in China (easily the world’s 2nd biggest AI market).

I think Jensen Huang understands the situation very well, speaking at a Citadel Securities event on October 6, 2025 the Nvidia CEO blamed U.S. export controls and said the company now assumes no revenue from China in its forecasts.

“We are 100% out of China. We went from 95% market share to 0%. I can’t imagine any policymaker thinking that’s a good idea… whatever policy we implemented caused America to lose one of the largest markets in the world to 0%”. - Nvidia, CEO.

Nvidia’s marketshare in China might end up being Zero Percent

It’s not so long ago China was 20% to 25% of Nvidia’s data center revenue. As the AI arms race heats up China’s market size for AI chips is likely to grow fast as they accelerate AI Infrastructure with a superior energy and especially their renewable energy stack.

With U.S. China trade tensions in full force, China’s doesn’t just have a dominant physical AI & robotics automation sector and a world leading open-model ecosystem with implications in Agentic AI products, it has what Kyle Chan calls an “emerging export control regime”.

Trade | Geopolitics | Energy | AI | The Future | Semiconductor Stacks

Nvidia has a commanding lead as an AI chip monopoly the last few years, but I think it’s wise to listen carefully to Jensen Huang’s recent interviews to get a sense of the magnitude of the situation. Nvidia CEO Jensen Huang said in early October, 2025 that the U.S. is “not far ahead” of China in the artificial intelligence race, and that the country needs a “nuanced strategy” to stay on higher ground.

China’s Energy, Renewable and Infrastructure Prowess could lead to AI domination

As for China’s energy advantage in the AI Infrastructure build out to come, China generated a total of 10,000 terawatt hours, or one trillion watt hours, of electricity in 2024, over double the U.S.’s output, according to the Energy Institute.

Few China tech analysts in recent weeks have impressed me as much as Poe Zhao with his detailed coverage and ability for synthesis at a very deep level:

Learn more about the Newsletter.

Selected Works

Poe Zhao’s ability to tease out details many Western analysts and current China tech analysts miss is truly noticeable.

China’s Answer to Silicon Valley: Alibaba’s $53 Billion Challenge to Big Tech

The Billion-Dollar Bonfire: China’s AI War and the Creation of the “Do-Engine”

Poe Zhao is a Beijing-based technology analyst who builds rigorous, bilingual explanations of China’s tech ecosystem for global readers. He founded Dailyio (Chinese) and Hello China Tech (English) to map how China’s AI, robotics, semiconductors and EVs actually scale—from policy design and state capital, to supply-chain execution and market adoption. His editorial approach favors verifiable signals over hype: structural data points, filings, and strategy moves reframed into a coherent narrative of “parallel development,” not copycat catch-up.

Hello China Tech

Decoding China tech for global minds. China is quietly reshaping the future of AI, electric vehicles, robotics, and semiconductors. Poe Zhao expertly (doing this for 12 years) bridges the gap between China’s rapidly evolving tech ecosystem and the global community. (Read the coverage in Mandarin).

How China Built a Parallel AI Chip Universe in 18 Months

October, 2025 by Poe Zhao.

Nvidia lost $2.5 billion in quarterly revenue from China and faces continued market pressure. That’s just the beginning.

Why This Isn’t About Nationalism (It’s About Money)

The phrase “去英伟化” (de-Nvidification) has become ubiquitous in Chinese tech circles. But it risks obscuring what’s actually happening.

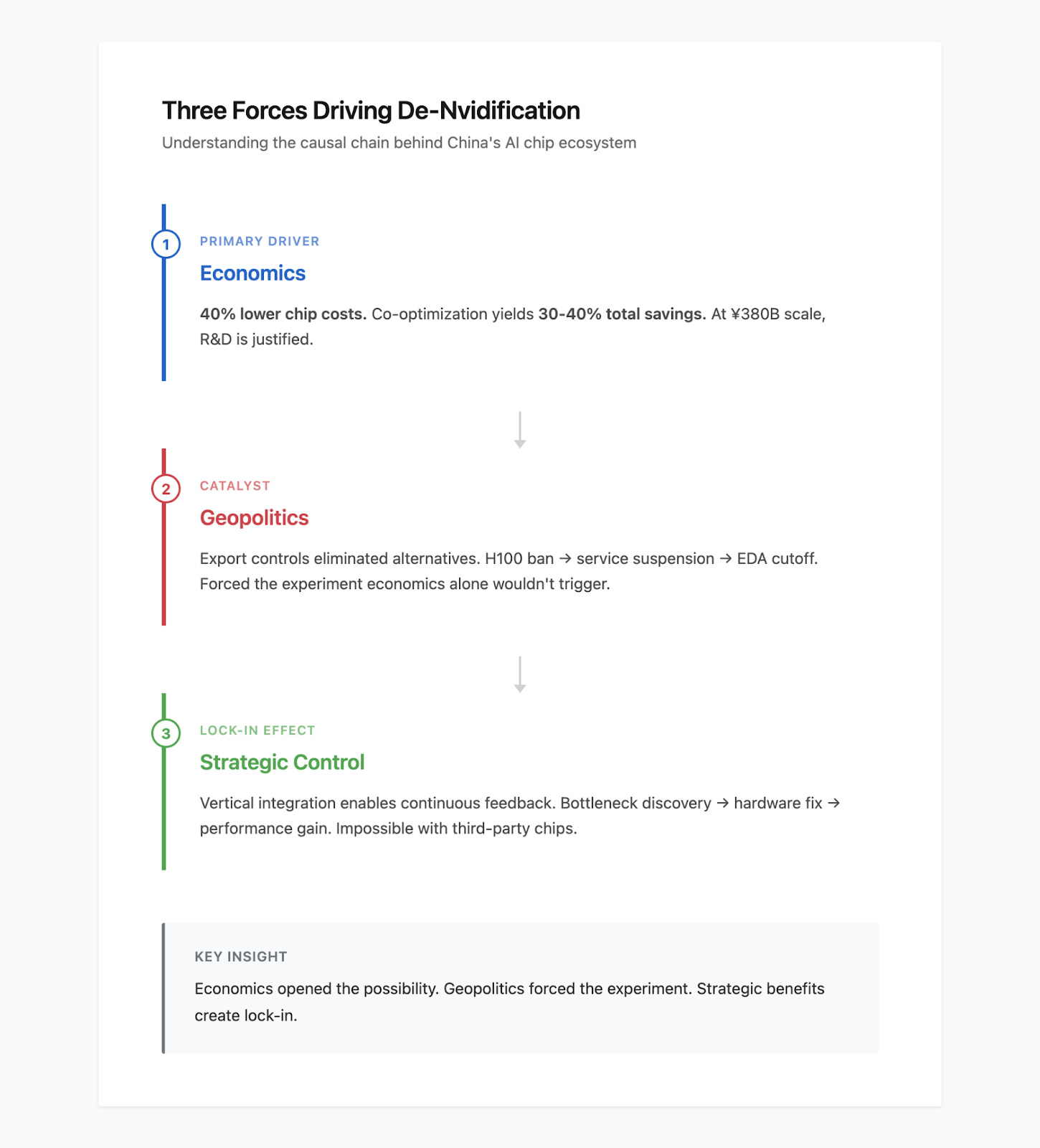

Three forces are at work. Distinguishing between them matters.

Economics is the primary driver, not geopolitics.

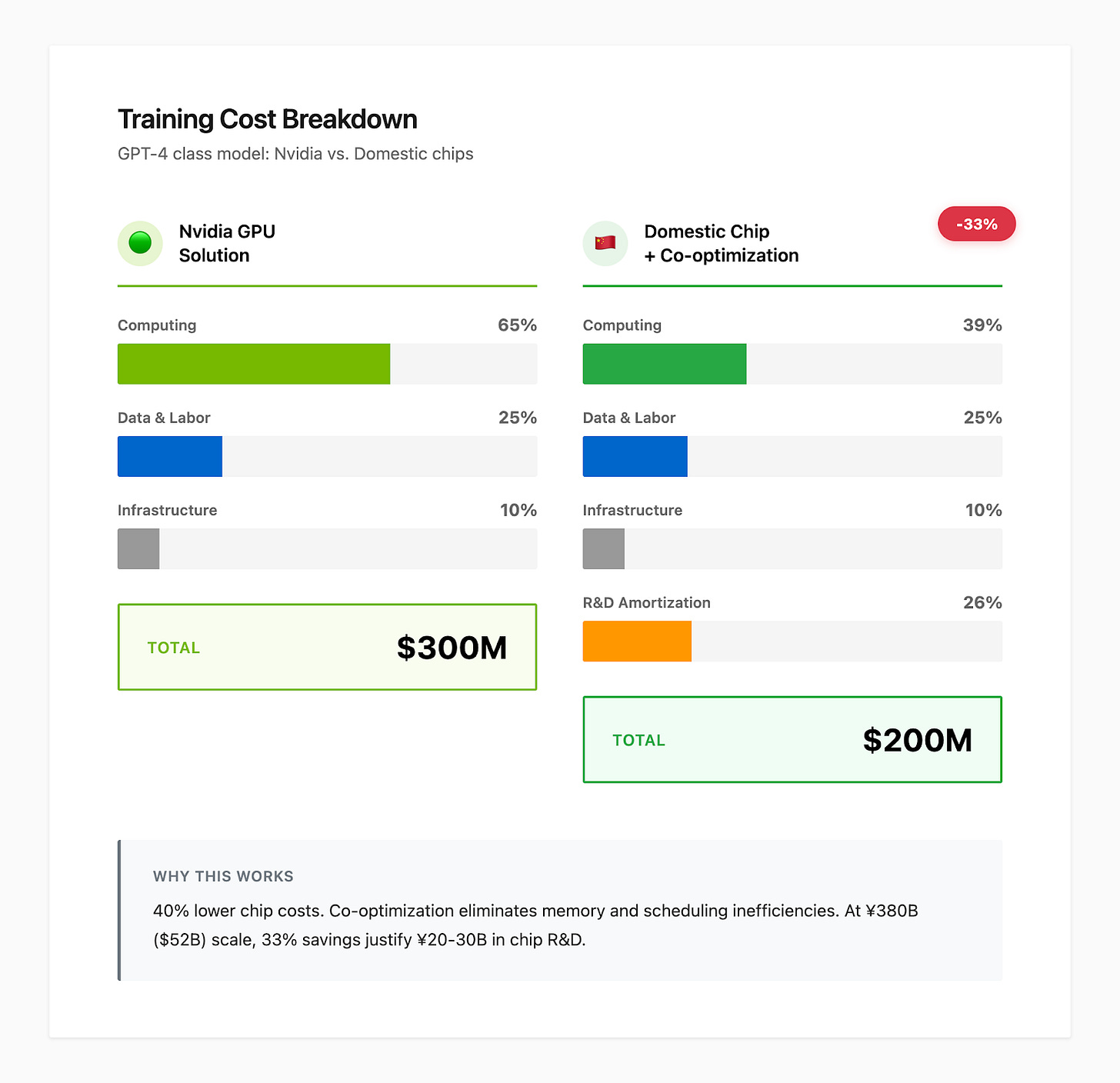

Here’s the business case. Training GPT-4-class models costs hundreds of millions of dollars. Computing represents 60-70% of those expenses. When Chinese companies evaluate switching core model training to domestic chips, they cite substantial potential cost savings–combining lower chip unit costs with software-hardware co-optimization gains–the business case becomes compelling.

When Alibaba spent over 380 billion yuan on AI infrastructure over three years, even 30-40% savings justify billions in chip R&D. This is cold calculation, not patriotic fervor.

Editor’s Favorites:

Moore Threads’ Record IPO: Blueprint or Gamble?

Hangzhou’s Contingent Coordination: When Improvisation Becomes Strategy

Cambricon: A Bet on AI’s Narrowest Edge

Geopolitics is the catalyst, not the cause. 🌍

U.S. export controls didn’t create the economic incentive. They eliminated the option of continuing with existing suppliers. The progression tells the story: restricting H100 GPUs in 2022, temporarily suspending Nvidia’s chip support services in early 2025, and critically, three major EDA providers (Synopsys, Cadence, Siemens) collectively pausing software support in May 2025.

When the tools to design advanced chips became unavailable, Chinese companies faced a binary choice. Accept permanent technological subordination or invest massively in alternatives.

Here’s the crucial distinction: geopolitics didn’t make domestic chips economically attractive. It made experimenting with them necessary. Without export controls, most Chinese companies would have continued optimizing within Nvidia’s ecosystem. They knew alternatives might offer better long-term economics. But the disruption forced the experiment that economic logic alone wouldn’t have triggered.

Strategic control creates compounding advantages.

Once companies invest billions in vertical integration–designing chips, building frameworks, deploying at scale–they’re not just buying computing hardware. They’re constructing platforms that capture long-term value. The optimization is continuous. It’s impossible with third-party purchases.

When Huawei’s MindSpore team discovers performance bottlenecks, they can request Ascend hardware modifications. When Baidu identifies that certain transformer operations dominate LLM inference, Kunlun’s next generation adds specialized accelerators. This feedback loop creates compounding advantages over time.

Understanding this causal chain matters.

If geopolitics were primary, we’d expect Chinese chips adopted despite economic disadvantages. Performance gaps would be tolerated for supply security. If economics were everything, companies would have pursued domestic chips earlier when technically feasible.

The reality: economic logic opened the possibility, geopolitical pressure forced the experiment, strategic benefits are creating lock-in. This isn’t nationalism. It’s calculated adaptation to changed constraints.

Three 2025 breakthroughs illustrate this dynamic crystallizing:

Cambricon Technologies | Baidu | Alibaba

Cambricon Technologies reported H1 2025 revenue of 2.88 billion yuan. That’s a 4,347% year-over-year increase. This wasn’t gradual adoption. Customers dependent on Nvidia suddenly needed alternatives. They found Cambricon’s Siyuan 370 chips offered acceptable performance for specific workloads at lower cost.

Baidu deployed a 30,000-card Kunlun P800 cluster. First Chinese company operating domestic AI accelerators at hyperscale for LLM training. More telling: Baidu’s Intelligent Cloud won a 1 billion yuan China Mobile contract for AI inference servers. Kunlun chips captured all three sub-packages in the “CUDA-like ecosystem” category.

When state-owned enterprises make large-scale procurement based on technical validation rather than policy directives, a threshold has been crossed.

CCTV footage in September 2025 inadvertently revealed Alibaba’s new training chip. Codenamed PPU. Using domestic 7nm process and 2.5D Chiplet packaging. Specifications reportedly matching H100 at ~40% lower costs. When China’s tech giants commit 380 billion yuan to AI infrastructure, they’re betting on domestic chips as long-term foundations, not stopgaps.

But here’s the critical distinction that separates hype from reality.

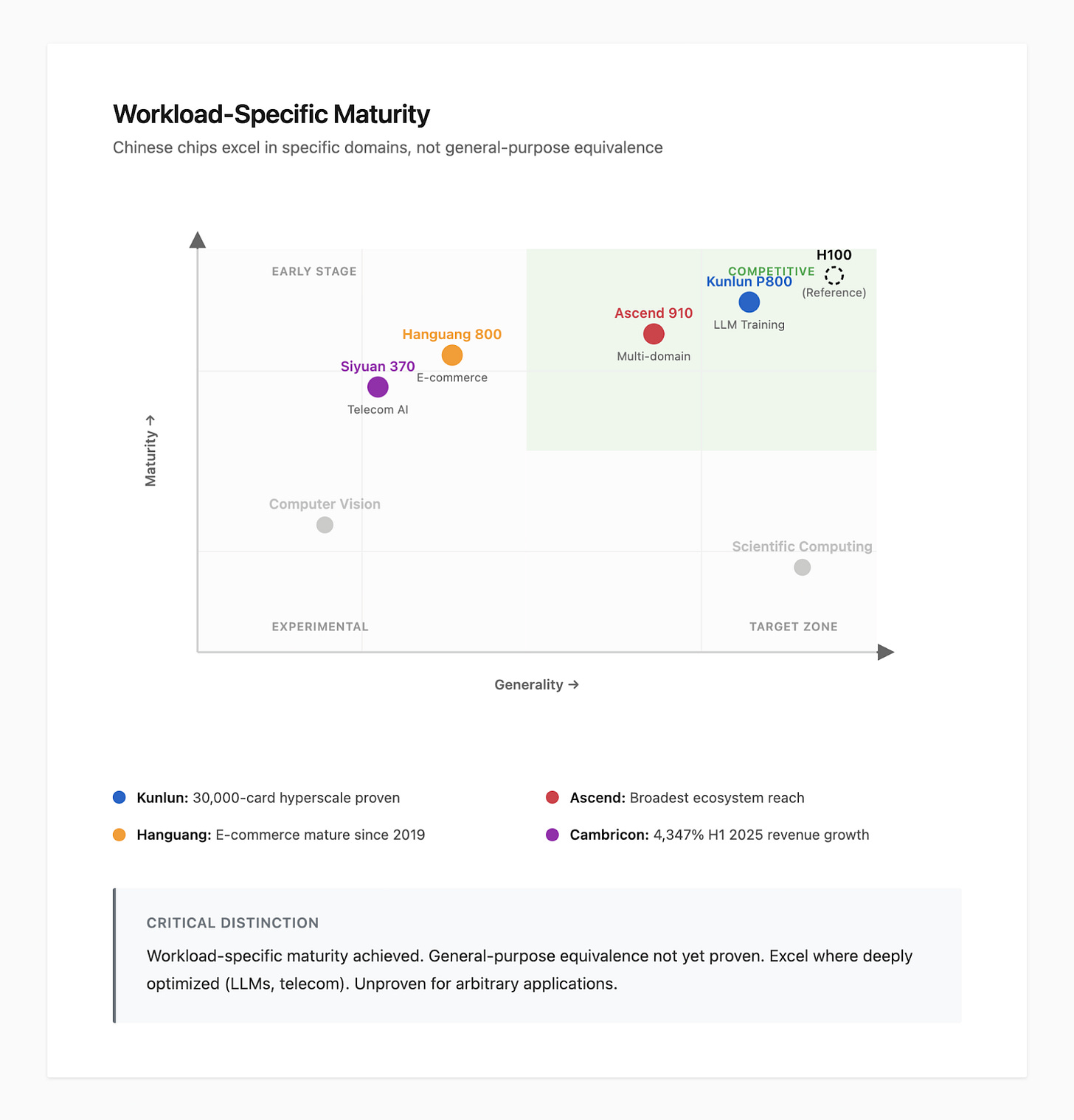

Chinese AI chips are achieving workload-specific maturity rather than general-purpose equivalence. Baidu’s Kunlun cluster is proven for LLM training. But it’s not yet validated across computer vision, reinforcement learning, scientific computing. China Mobile’s procurement validated telecom-specific AI workloads–intelligent customer service, network optimization. Not arbitrary applications.

This gap between narrow competence and broad capability is central to understanding both genuine progress and remaining limitations. It’s the difference between “we can do this specific thing well” and “we can do anything you might want.”

Figure 3: Workload-specific maturity landscape. Chinese chips excel in deeply optimized domains (Kunlun for LLM training, Cambricon for telecom AI) but remain unproven for general-purpose applications. This distinction separates genuine competitiveness from aspirational claims.

Why Chinese Tech Giants Are Building Everything In-House

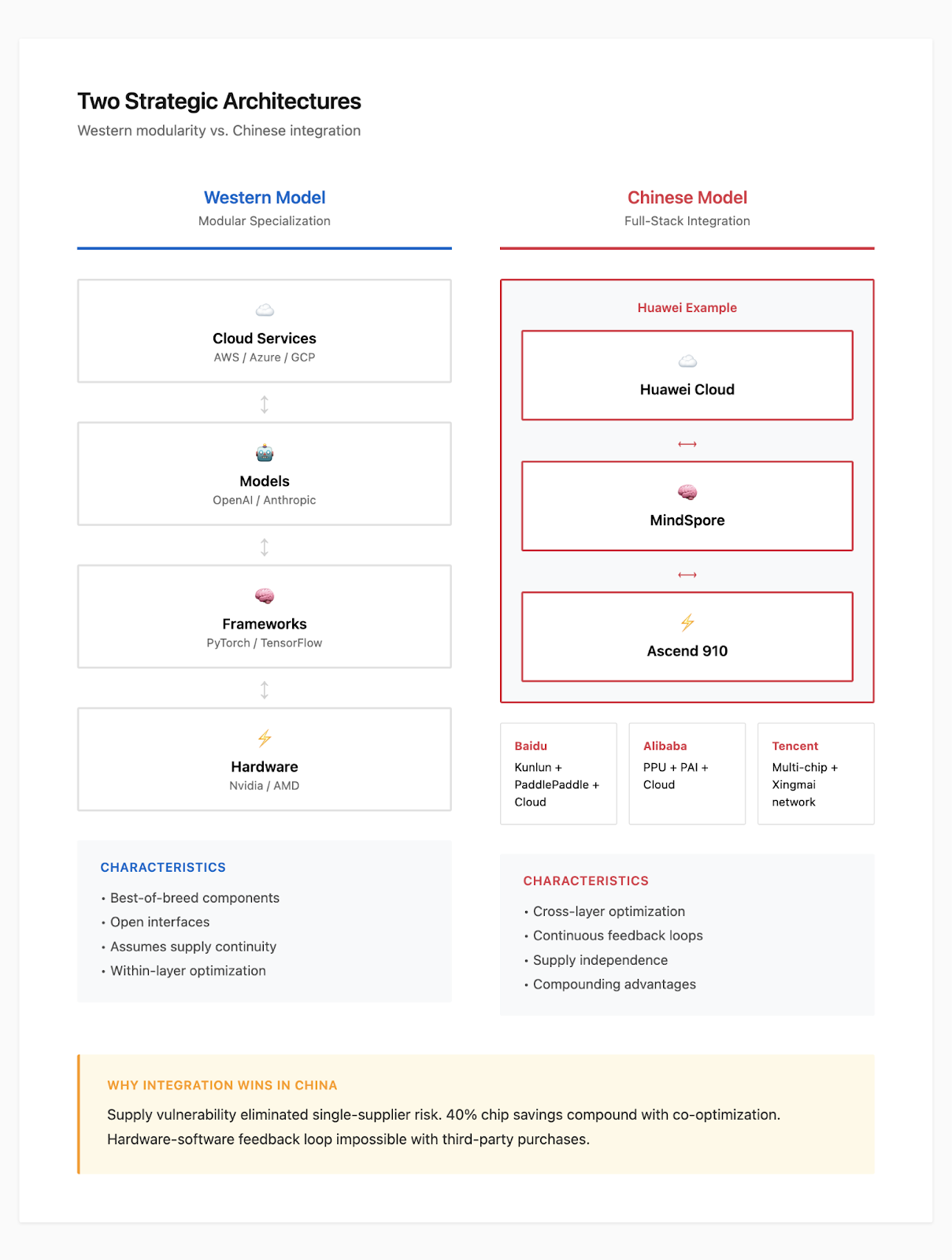

Perhaps the most striking pattern–the one that most clearly differentiates China’s approach from Western models–is vertical integration everywhere.

Tech giants build complete stacks from silicon to cloud services. Contrast this with Western specialization. Nvidia provides chips. OpenAI builds models. AWS/Azure/GCP offer cloud infrastructure through modular collaboration.

Figure 4: Two strategic architectures. Western model relies on modular collaboration with best-of-breed specialists. Chinese model pursues full-stack vertical integration driven by supply vulnerability, cost compounding, and continuous optimization advantages.

Why does vertical integration dominate in China but not the West?

Supply chain vulnerability made single-supplier dependence existentially risky.

When Nvidia’s quarterly China revenue drops 50% not from demand reduction but supply restrictions, continuation risk becomes impossible to ignore. Western companies can assume continued access to best-of-breed components. Chinese companies cannot.

Cost advantages compound at scale.

The 40% chip unit cost advantage multiplies when combined with framework-level optimizations. Baidu’s claimed 80% total cost reduction comes from both cheaper hardware and software-hardware co-design. The co-design eliminates inefficiencies in memory management, operator scheduling, and distributed communication that generic GPUs cannot address.

A company spending $50+ billion annually on AI infrastructure can justify $2-3 billion in chip development if it yields 30-40% ongoing savings. Smaller companies cannot. Which is why independent chip designers like Cambricon focus on selling to customers lacking scale for vertical integration.

Ecosystem control enables continuous optimization. This creates compounding advantages impossible with third-party purchases.

Three distinct strategic approaches have emerged:

Huawei’s Ascend ecosystem represents the most ambitious vertical integration.

Designing Ascend 910 chips (256 TFLOPS at 7nm). Developing MindSpore framework. Operating Huawei Cloud as an integrated platform. They gained significant market share by late 2024. The announced three-year roadmap shows next-generation Ascend 950 chips incorporating FP8 precision, heterogeneous architecture, and a proprietary high-speed interconnect called “Lingqu” (灵衢) to enable million-card scale clusters.

Huawei’s bet: parallel ecosystem development rather than CUDA emulation. MindSpore introduces its own programming model optimized for distributed training and automatic parallelization. The assumption: if Ascend deployment reaches sufficient scale domestically, developers will learn MindSpore through necessity. Just as they once learned CUDA.

This trades international portability for domestic optimization. It’s viable within China’s large protected market. But faces challenges for global adoption.

Baidu’s approach emphasizes compatibility layers to reduce switching costs.

Kunlun P800 achieves competitive specifications. PaddlePaddle maintains PyTorch and TensorFlow interfaces. Researchers can port models with minimal code changes. Then optionally use Kunlun-specific APIs for performance tuning. The tradeoff: engineering complexity. Maintaining multiple frontend interfaces while optimizing backends demands massive resources.

Baidu’s advantage is demonstrable maturity in the specific domain of LLM training and inference. The company has operated PaddlePaddle since 2016. Trained successive generations of ERNIE models. When announcing its 30,000-card Kunlun deployment, Baidu wasn’t launching untested hardware. It was commercializing infrastructure already supporting production workloads internally.

The China Mobile contract validated that this narrow but deep capability was sufficient for specific enterprise use cases.

Alibaba’s strategy adds manufacturing economics optimization.

Beyond Hanguang 800 inference chips deployed since 2019 for e-commerce search and recommendation, the revealed PPU training chip uses domestic 7nm process and 2.5D Chiplet packaging. The bet: achieving H100-comparable specifications while maintaining ~40% lower costs through domestic fabrication and packaging creates sustainable competitive advantage.

Alibaba plans 380 billion yuan investment over three years. Core AI model training will gradually shift to self-designed chips.

Tencent chose the opposite path.

Explicitly not designing chips. Instead pursuing “open compatibility, diverse computing power.” Tencent Cloud comprehensively adapted mainstream domestic AI chips. Developed “Xingmai” (星脉) high-performance networking to improve communication efficiency across heterogeneous accelerators. Claims nearly 10x improvement in thousand-card cluster performance.

This offers initial flexibility. But may prove disadvantageous in cost competition as rivals optimize complete stacks and capture integration benefits.