Welcome Back,

If you know me I’m a huge fan of international writers and publication Creators from smaller countries and off the beaten track geographies. Nat is one of the hidden gems of original deep dives on A.I.

A former Chess player from Georgia & a lover of robots (hence the ‘broken robot’ series of images in this post), her body of work speaks for itself. By the end of this article you will know what I’m talking about.

Nat obsesses about the same things as I do in this changing world, like A.I. ethics, social justice, privacy and sociological concerns around emerging tech for things like social and wealth inequality.

By profession she’s a marketer, coder and builder in blockchain and A.I. With that technical background in breadth and depth, her deep dives & tutorials are a urban legend to fanatic readers of Substack. Suffice to say that I’m a huge fan. She tinkers and her tutorials are therefore very valuable.

A word of warning, this deep dive is over 5,000 words, something like an 18 minute read. Check out her about page. Click on the title of this piece to read in your browser for optimal reading.

If you want to support the channel, a huge part of my mission is to get some of the most exciting writers to contribute diverse perspectives with their guest posts.

Substack writers after fees are making about $0.84 on the dollar.

In the future I’m hoping to be able to afford to pay contributing writers. As such, I’m counting on you to give this emerging author traffic to her work to also give her increased motivation and support, to allow her to continue writing here for free (Stripe is not supported yet in Georgia) among the many entrepreneurial & civic projects she undertakes.

She is a former lecturer, founder, translator and prolific author while having a strong communal streak. She is acting as a liaison between the Ministry of Regional Development, Infrastructure, and the local community. This article is going to be a tour-de-force on things you may not have fully considered but at this radically unique moment in human history, maybe you should.

First written in August, 2023.

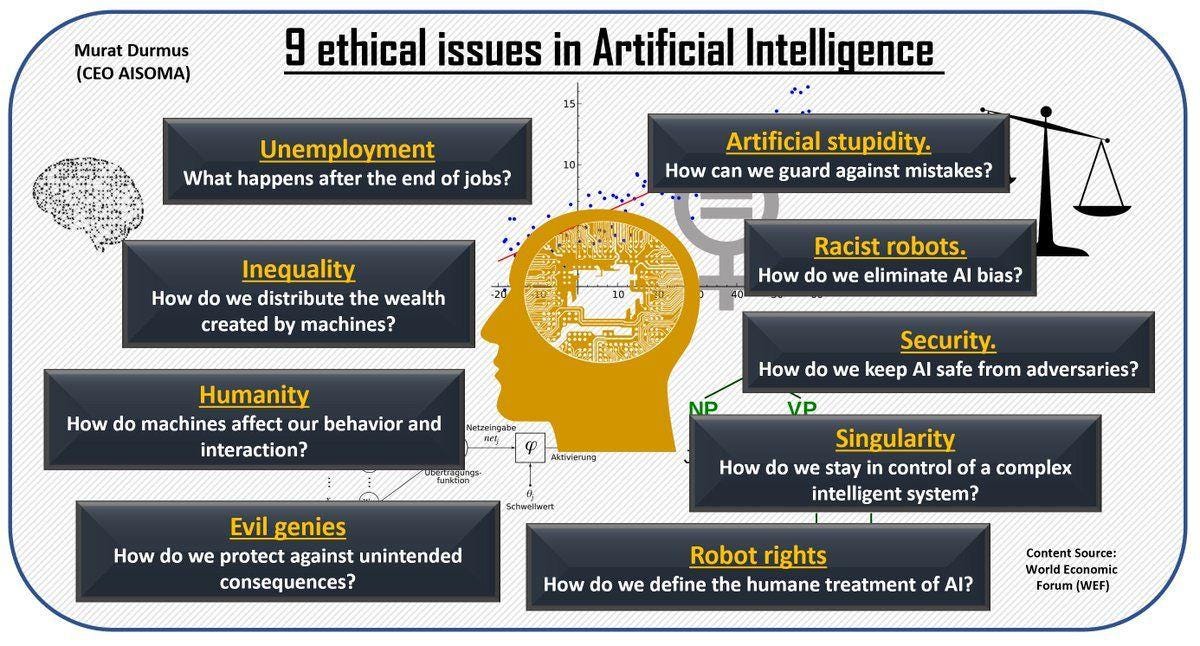

Artificial intelligence has rapidly evolved from sci-fi fantasy to everyday reality. Once the stuff of robot movies and alien worlds, AI now quietly powers our smartphones, streaming services, social media, and more. This lightning-fast progress has been transformative - yet also raises serious ethical questions we must grapple with.

As AI becomes further intertwined with our civilization, how do we ensure it aligns with human values? What are the potential pitfalls if we develop AI without wisdom and foresight? From biases baked into algorithms to jobs lost to automation, the risks are real. But so too are the incredible benefits, if harnessed responsibly.

We stand at a crossroads for one of the most consequential technologies of our time. The future remains unwritten - will we authors an AI narrative that serves society, or succumbs to existential threats? Our choices today will determine the next chapter for generations to come.

In this piece, we'll explore the central ethical dilemmas posed by artificial intelligence. We'll examine the nuances, weigh difficulties and trade-offs, and uncover impacts on social fabric and individual lives. This journey illuminates how AI may shape our collective destiny, and the power we have to guide it down a path aligned with human values. The time is now to have these urgent conversations that will reverberate through the ages.

✨ TOP POSTS BY AI OBSERVER:

The author is known for her guides, deep dives and tutorials.

Automate Your Creativity: GPT-4's Game-Changing Headline and Tagline Generator (CODE included)

Building an AI Chatbot with GPT-4 on Windows 11: A Comprehensive Guide

Beyond Human Capabilities: Decoding the Chessboard with Artificial Intelligence

🧙♂️ Superintelligence and its potential dangers

The prospect of superintelligent AI conjures both awe and unease. This hypothetical AI would possess intellect far surpassing even the greatest human minds. It could systematically outthink the smartest researchers, unravel our thorniest scientific mysteries, and conceive of revolutionary inventions beyond our wildest dreams. The vision of an AI system with such unlimited potential is equal parts enthralling and disquieting. We stand amazed by what such an AI could accomplish. Yet we recoil at the notion of an intelligence exponentially more powerful than our own operating unchecked and without oversight. This stark dichotomy has fueled heated debate among experts about whether we can possibly control superintelligent AI once created - or if it poses an existential threat beyond our ability to contain. Some describe it as tantalizing but perilous power, like "playing with fire." As AI advances steadily forward, the possibility of superintelligence moves closer to reality. We must carefully consider how to harness its upside while safeguarding against catastrophic downside risks. The stakes could not be higher for humanity's future.

This existential risk is a massive concern for many AI researchers and thinkers. They worry that if we don't handle superintelligence with extreme caution, we might accidentally create something that threatens humanity's survival.

👀 Implications and future considerations

To be clear, superintelligent AI remains firmly in the realm of theory - we are still far from developing an AI system with such breathtaking cognitive abilities. But the rapid pace of AI progress suggests superintelligence may be less far-fetched than once believed. AI capabilities that long lived solely in the imagination now shape our everyday lives. With AI research accelerating on multiple fronts, advanced systems like deep learning achieving new milestones, and more investment pouring into the field, we inch closer to thresholds we can barely comprehend. Though not imminent, superintelligent AI looks less like science fiction with each new breakthrough. Its prospects force us to confront head-on whether we can control intelligences that may one day eclipse our own. We cannot afford to dismiss this as a distant hypothetical - the stakes are too high. The conversation must begin now.

Researchers and organizations are pushing for robust safety measures and ways to control superintelligence if and when it arrives. The notion of an adversarial AI turning against its creators may evoke sci-fi tropes. But we cannot dismiss the hazards of misaligned superintelligent AI as merely the stuff of movies. There are sobering reasons these catastrophic scenarios capture our imagination. Even if the odds seem low, the sheer scale of damage an uncontrolled superintelligence could wreak requires vigilant prevention. When building systems that may eventually far surpass human-level intelligence, we must proactively engineer them to align with human values and ethics. This entails solving monumental technical problems in value alignment and AI safety - failing to address these risks could have irreversible consequences. So while superintelligent AI is not imminent, its potentially existential downsides warrant the utmost research and preparation today. We have a duty to channel AI's immense capabilities to benefit humankind, not sow destruction. Time is of the essence to implement robust safeguards and prevent nightmarish scenarios from creeping closer to reality.

It's like trying to tame a dragon – we want the power but must also ensure it doesn't burn down the whole kingdom.

Nat is also a fan of robots.

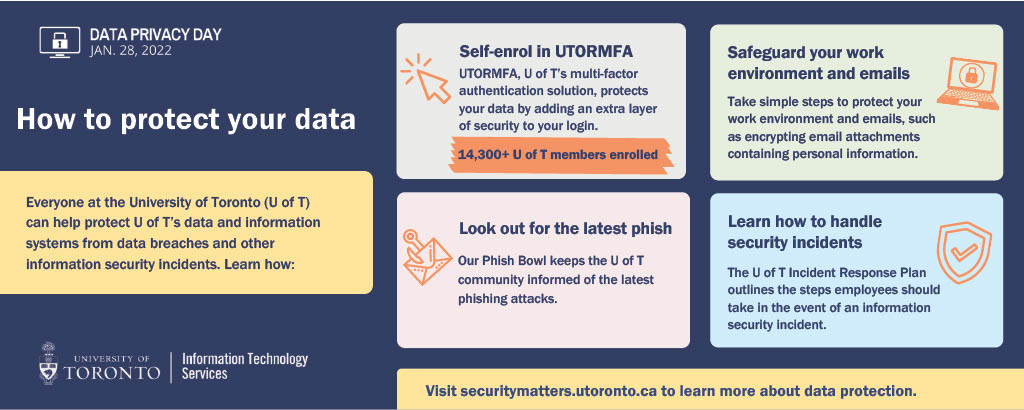

🔐 Privacy and data protection concerns

Imagine if your sensitive personal data, like financial records or medical history, gets into the wrong hands due to a data breach in an AI system. Scary, right? That's why ensuring robust data protection measures is crucial.

We must also scrutinize how our personal data gets utilized behind the scenes. AI algorithms frequently mine our digital footprints - from web browsing histories to social media activity - to infer patterns and make personalized recommendations or ad targeting. This automated data harvesting can provide convenience and relevance. But it also tests the boundaries of consent, transparency and our right to privacy. When private companies covertly collect, analyze and monetize the details of our digital lives, we must ask - do the benefits outweigh the risks? Does it erode people's agency over their information? Could it enable manipulation or discrimination? Does it set dangerous precedents without adequate oversight? As data-hungry AI systems expand, we need robust regulations and ethicists at the table to ensure our data gets handled responsibly, not exploited through opaque algorithms operating unchecked in the shadows.

Balancing data usage with privacy rights and security concerns

This one is a tricky tightrope walk.

On the one hand, data is the fuel that drives AI's remarkable capabilities. The more data AI systems have, the better they can learn and make informed decisions. So, companies and researchers are always hungry for it to improve their AI models and provide us with personalized experiences.

This is where a central tension emerges - our data holds tremendous value, yet it is also deeply personal. We have fundamental rights to privacy and security of our information. But companies and researchers seek unfettered access to amass more data to feed ever-hungry AI systems. Their goals often conflict with people's agency over their digital footprint and right to control how their data gets utilized. This clash between widespread data collection and personal privacy protections is increasingly coming to a head. As AI algorithms grow more invasive and influencial in our lives, we must determine where to draw the line and implement safeguards against misuse of our data. How do we balance individual rights with pursuit of technological progress? The choices we make today on data regulations will have far-reaching implications for the future of privacy.

So, it's like a tug-of-war, right? On one side, we want AI to be super smart and helpful, but on the other, we don't want our data to be mishandled or end up in the wrong hands.

We need robust data protection measures and ensure that our privacy rights are respected while allowing AI to access enough data to function effectively.

The Evolution of Surveillance Capitalism

“Surveillance capitalism challenges democratic norms and departs in key ways from the centuries long evolution of market capitalism” - Shoshana Zuboff

In today's digital landscape, personal data has transformed into a sought-after asset. Companies, especially those offering complimentary online services like Google's search functionalities and Facebook's networking platform, are at the forefront of this data-driven economy. They meticulously track our online footprints—be it our search preferences, social interactions, or shopping behaviors—to generate monetizable insights.

This phenomenon, termed 'surveillance capitalism' by academic Shoshana Zuboff in 2014, is deeply embedded in our global digital infrastructure. It's not just about advertising anymore; it's about leveraging vast amounts of "big data" for diverse revenue streams.

But as we delve deeper into this era, the ramifications become more profound and concerning:

Digital Health Footprints

The rise of health-centric apps, smart wearables, and online medical consultations has led to an influx of sensitive health and psychological data on the web. Such data, if misappropriated, can be used to exploit individuals' health conditions or even influence insurance decisions.

State-led Digital Watch

Many governments are tapping into the reservoirs of big data, sometimes justifying it as a measure for public safety or national interest. While some applications might be genuine, there's an ever-present danger of overstepping boundaries, compromising individual freedoms.

The Shadowy Data Trade

The value of personal data has given birth to a bustling market, both in the open and in the hidden corners of the internet. Data brokers, straddling the line between legitimate business and murky dealings, are trading in this new currency. The repercussions can range from identity theft to more sinister personal threats.

In essence, as our existence becomes more digitally intertwined, the balance between convenience and privacy is teetering. The digital age, while offering unprecedented opportunities, also brings with it challenges to our rights and individual autonomy.

The “Broken Robot” series / Midjourney.

🧘 Safeguarding personal data and addressing data breaches

We need to be proactive about safeguarding our data. That means being cautious about sharing sensitive information online, especially on sketchy websites or with unknown apps.

Think twice before clicking on those suspicious links or giving away too much info – you never know who might be lurking!

Use strong and unique passwords

The temptation to reuse passwords across sites is understandable - remembering a unique one for each account can quickly become unmanageable. But recycling credentials is like gifting the keys to hackers, allowing our entire digital life to be compromised. Instead, let's get creative and diligent with our password hygiene. Using unpredictable combinations of upper and lower case letters, numbers, and symbols can go a long way. Pair this with changing passwords regularly and avoiding common phrases. It's a bit cumbersome, but far better than the alternative.

And when breaches inevitably occur, we must respond swiftly to protect ourselves. If a company we rely on suffers a data leak, closely monitor the situation and follow their guidance. Reset any reused passwords immediately. Review account activity and statements for suspicious transactions. Consider credit freezes if highly sensitive information was exposed. Remain vigilant for phishing attempts using stolen data. While breaches produce anxiety, good cybersecurity habits can mitigate damages. We may not fully avoid risks in the digital age, but proactive precautions keep us resilient.

Check if they provide any guidance for users and follow their instructions to protect ourselves.

Now, let's talk about encryption.

When sending sensitive data, like personal information or passwords, let's make sure it's encrypted. Encryption turns our data into secret code that only the intended recipient can decode, like having a secret language.

Monitor financial accounts regularly

Vigilance is key - routinely monitoring financial accounts and statements allows early detection of any suspicious charges or activity. At the first sign of unauthorized transactions, immediately contact institutions to report fraud and halt further damage. Accepting risks is one thing, but neglect invites trouble.

Beyond finances, discretion with personal information also thwarts potential attacks. Oversharing intimate life details on social media may be tantalizing, but restraint is advisable. Revealing too much hands over ammunition to hackers in the form of security questions, password reset hints, and other exploitable bits. The most innocent-seeming tidbits can enable social engineering schemes, identity theft, or targeted scams when pieced together. Acknowledge risks, then think twice before posting private information that cybercriminals thrive on acquiring.

Finally, let's stay informed about data protection and privacy trends. Technology is always changing, and new threats can pop up, but if we stay in the loop, we'll be better prepared to protect our personal data like champs.

So, with these smart strategies in our back pocket, we can tackle data breaches and safeguard our data like the savvy cyber wizards we are.

Let's keep our data safe and our digital lives secure!

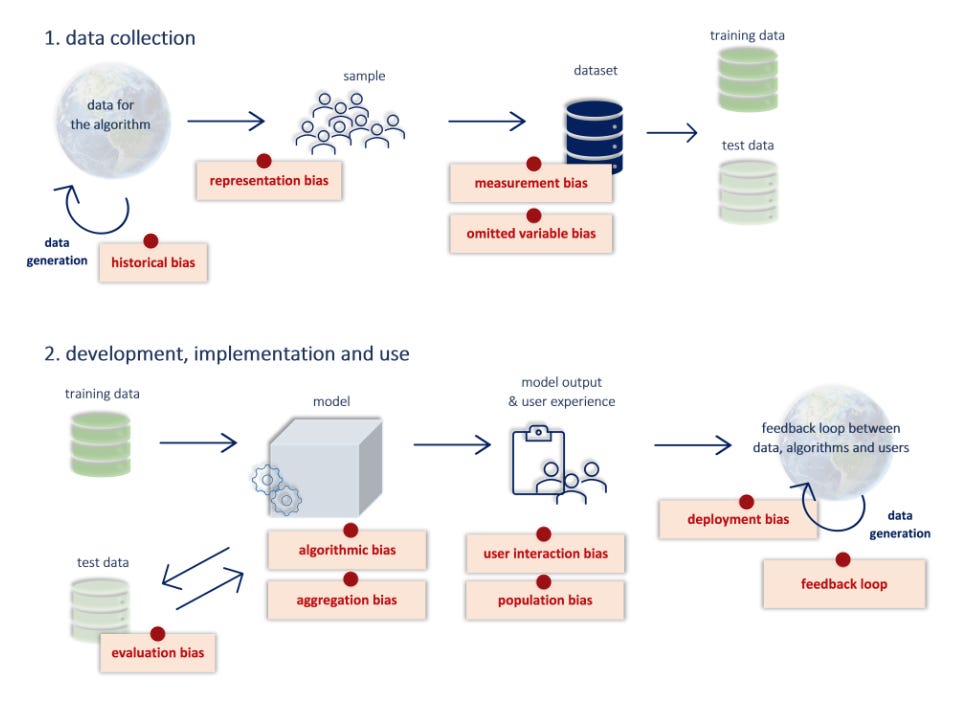

🙅🏻 Bias and fairness in AI

AI models are built on the data they're trained with.

But here's the catch - that data can have hidden biases from past human prejudices and societal norms. And guess what? These biases can sneak into the AI systems and unknowingly make their decisions and recommendations biased too.

It's like passing on those unfair patterns without even realizing it.

Challenges posed by "black box" AI algorithms 🔲

The temptation to reuse passwords across sites is understandable - remembering a unique one for each account can quickly become unmanageable. But recycling credentials is like gifting the keys to hackers, allowing our entire digital life to be compromised. Instead, let's get creative and diligent with our password hygiene. Using unpredictable combinations of upper and lower case letters, numbers, and symbols can go a long way. Pair this with changing passwords regularly and avoiding common phrases. It's a bit cumbersome, but far better than the alternative.

And when breaches inevitably occur, we must respond swiftly to protect ourselves. If a company we rely on suffers a data leak, closely monitor the situation and follow their guidance. Reset any reused passwords immediately. Review account activity and statements for suspicious transactions. Consider credit freezes if highly sensitive information was exposed. Remain vigilant for phishing attempts using stolen data. While breaches produce anxiety, good cybersecurity habits can mitigate damages. We may not fully avoid risks in the digital age, but proactive precautions keep us resilient.

Black box AI can unknowingly inherit biases from the data they're trained on. And without transparency, we might not even know those biases are there like a hidden trap waiting to surprise us!

The thing is, we want to trust AI, but it's hard when we can't peek into its decision-making process. Plus, when things go wrong, it's tough to figure out what caused it and how to fix it.

⚖️ Mitigating bias and promoting fairness in AI algorithms

Tackling AI bias and discrimination is no easy feat. It involves diving into the technical nitty-gritty of AI, considering social and cultural factors, and understanding the real impact of AI decisions.

Without strong regulations in place, we run the risk of letting biased AI systems roam free, which could seriously harm society and shake people's trust in AI. It's a big challenge, but we must face it head-on to ensure fair and responsible AI use.

There are several approaches that researchers and developers are exploring to keep bias in check and promote fairness in AI algorithms.

Using diverse and representative training data: This means making sure the data used to train AI includes people from all different backgrounds to avoid unfair biases.

Pre-processing and data augmentation: It basically cleans up the data and creates more balanced datasets to reduce potential biases.

Developing algorithms that explicitly include fairness constraints during AI model training: This helps strike a balance between accuracy and fairness, making sure no group gets favored or disadvantaged.

Placing posthoc fairness interventions: These come into play after training the AI model to adjust the results and achieve greater fairness if any biases are detected.

Making AI more interpretable : Understanding how AI makes decisions makes it easier to spot biases and improve fairness.

Involving humans in the loop : By having people review and validate AI outputs, we can catch and correct biases, taking a more human-centered approach.

And, of course, it's a continuous process! We need to keep monitoring and evaluating AI systems to ensure fairness over time.

The Evolution of A.I. Regulation in 2023

In my article Navigating the Regulation of Artificial Intelligence I explored the latest developments in the regulation of AI. I will be adding new information to the article as it becomes available, so you can stay informed about the latest progress.

Here are the key points about AI regulation from the article:

• OpenAI CEO Sam Altman and two other experts have proposed a plan for governing superintelligence. They predict that within the next decade, these systems could surpass human expertise and be as productive as large corporations.

• There is a growing debate on how to regulate superintelligence. The difficulty of regulating emerging technology, such as social networks and cryptocurrency, raises questions about how we will regulate superintelligence.

• Microsoft has joined the global debate on AI regulation, calling for a new federal agency to control its development. Microsoft President Brad Smith outlined a five-point plan for addressing the risks of AI and called for an executive order requiring federal agencies to implement a risk management framework for AI.

• Opponents such as IBM argue that AI regulation should be integrated into existing federal agencies due to their expertise in the sectors they oversee. Christina Montgomery advocates for a precision regulation approach to AI, where regulation focuses on specific use cases of AI rather than the technology itself.

• The European Union’s Artificial Intelligence Act (AIA) moved closer to passage on May 11, 2023. The AIA is a risk-based regulation that would impose different obligations on AI systems depending on their risk level.

Key Takeaways 🔑

In the meantime, everybody agrees that regulating AI is a nuanced challenge due to the technology's rapid evolution and unique complexities. While government oversight will play a key role, a multi-faceted approach is needed for balanced, adaptive policies. This could involve:

Developing flexible regulatory frameworks attuned to AI's technical capacities and limitations. Overly rigid regulations risk stifling innovation and falling behind the technology's pace.

Fostering strong self-regulation within the AI industry via ethical codes of conduct and best practices. Government and industry collaboration is key for effective co-regulation.

Promoting AI research and literacy to inform evidence-based policies. Regulators must maintain technical expertise to regulate intelligently. Public education builds trust and social license.

Ensuring transparency and accountability across the AI design, development and deployment pipeline. Ongoing impact assessments enable adaptive regulations that address emerging risks.

Building participative governance structures that integrate diverse voices - industry, academia, civil society, marginalized groups. Inclusive discourse strengthens oversight.

Regulating AI is a complex socio-technical challenge with high stakes for society and human rights. We need multifaceted governance embracing nuance, expertise, transparency and stakeholder diversity to steer AI's evolution ethically. The goal is maximizing its benefits while mitigating potential harms.

Autonomy and accountability of AI systems 🗽

Some AI systems now operate with increasing autonomy, able to make decisions and act without direct human oversight. We are ceding more control to AI in high-stakes domains like healthcare, transportation and finance. But granting unchecked autonomy risks relinquishing too much responsibility. We must assure that autonomous AI aligns with ethics, safety and human priorities.

Blindly trusting algorithms to make impactful choices on their own, without transparency or accountability, is reckless. However, we also cannot afford to constrain AI advancement by demanding humans manually approve every minor action. Finding the right equilibrium - where AI can independently navigate bounded contexts but defers to human judgment for major decisions - is an ongoing challenge. It necessitates continuous risk assessment, ethical guidelines and often some calculated trial-and-error. But the alternative, surrendering key decisions about health, freedom or human life to black-box algorithms, is far more dangerous than imperfect solutions. Our future may depend on getting the level of AI autonomy right.

The interplay of independence and human guidance

When AI systems operate autonomously, it can be challenging to figure out who's responsible if things go wrong. Imagine an AI making a mistake or causing harm – who takes the blame?

But don't worry; we're working on ways to hold AI accountable. Creating guidelines and regulations helps ensure that AI is developed responsibly and ethically.

It's not about stifling AI's potential but finding the right balance. We want AI to be independent thinkers but also to ensure they don't run wild like unruly teenagers!

That's why researchers are exploring ways to make AI more transparent, so we can understand how they make decisions.

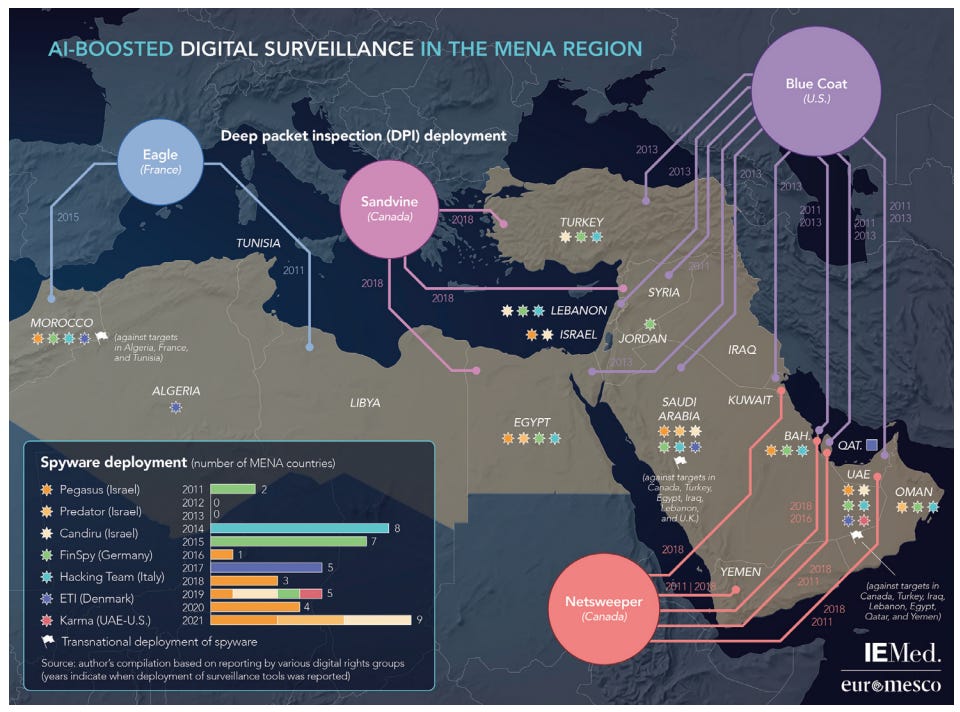

⚔️ AI's role in warfare and surveillance

We have autonomous weapons that are AI-powered machines taking the reins in warfare.

While they might reduce human casualties, they could also lead to unprecedented and rapid escalation of conflicts. The idea of machines making life-and-death decisions raises ethical questions about accountability, proportionality, and our value of human life.

Next, we've got mass surveillance – AI technologies that can predict behavior, mine data, and recognize faces.

While these tools can aid crime prevention and national security, there's a flip side – oppressive regimes could misuse them to control and suppress their citizens.

With these AI advancements, we need to tread carefully, setting strong regulations and ethical guidelines to ensure we harness AI's power responsibly. We must keep a vigilant eye on the potential risks while maximizing the benefits for society.

By proactively addressing these challenges, we can build a future where AI serves humanity's best interests and doesn't compromise our values and rights.

🪂 The Risks of AI-Enabled Military Competition

The intensifying strategic rivalry between the U.S. and China raises urgent concerns about an AI arms race with destabilizing effects. Both nations are pouring resources into developing AI, including for military applications like autonomous weapons and logistics. This risks fueling a dangerous escalation cycle.

However, the militarization of AI does not have to be inevitable. The high stakes require the U.S. and China to explore avenues for increasing transparency, building trust and promoting cooperation around AI's development and deployment.

They should expand efforts to align on ethical AI principles and governance frameworks. Confidence-building measures like reciprocity in restricting certain AI weapons capabilities could mitigate risks. Ongoing dialogue between security communities, policymakers and AI researchers is vital for progress.

Furthermore, the dual-use nature of AI demands responsible approaches. Governance mechanisms should aim to unlock its benefits for development while restricting harmful applications like in biological warfare. Avoiding an unchecked AI arms race will require stakeholders to elevate long-term foresight above short-term advantages.

AI will shape the future of power and security in complex ways. But its risks are manageable if key actors work proactively to build cooperation, not confrontation. With strategic vision and ethical leadership, the U.S. and China can steer AI's trajectory toward stability, not escalation. But averting crisis requires prioritizing diplomacy and mutual understanding.

Employment disruption and inequality

Although they boost productivity, AI could also replace some human workers. A robot taking over our job – not a great feeling, right?

This could mean lots of people losing their jobs, leading to big problems for society and the economy. You can get a domino effect, causing major changes in how we live and work.

Job redesign is a smart move – it means reshaping jobs to work hand in hand with AI. Instead of competing with them, we can team up and make the most of their superpowers!

And let's not forget about social security reforms. Changing how we support people during unemployment can provide a safety net for those affected by AI's rise.

STUDY: According to a policy brief by the OECD, Artificial Intelligence (AI) has made significant progress in areas like information ordering, memorization, perceptual speed, and deductive reasoning – all of which are related to non-routine, cognitive tasks. As a result, the occupations that have been most exposed to advances in, and automation by, AI have tended to be high-skilled, white-collar ones. This contrasts with the impact of previous automating technologies, which have tended to take over primarily routine tasks performed by lower-skilled workers.

IMPORTANT CONSIDERATIONS

Addressing socioeconomic disparities and promoting inclusivity

The kicker is, the ones hit hardest by job displacement might not see the rewards of AI advancements which can be a cruel twist of fate!

This could lead to more gaps between regions and different demographics. It's like some places and people getting left behind while others race ahead.

Promoting access to AI education and training can help more people benefit from AI's growth. We should give everyone a fair shot at the AI game.

We also need policies that ensure the economic gains from AI are shared more broadly.

Overreliances on AI: striking a balance to preserve humanity

As we grow increasingly dependent on AI, some important implications must be considered. It's a balancing act, ensuring we use AI's capabilities wisely without losing sight of our human touch.

AI can be a game-changer, boosting efficiency and innovation, but we must be cautious about potential pitfalls. We need to be mindful of not letting AI overshadow our unique human qualities and intuition.

We can navigate the implications thoughtfully by staying aware of our reliance on AI and fostering a healthy relationship with technology. We have to be the ones in control, not the other way around.

Addressing dehumanization in an AI-driven world

As AI plays a bigger role in our lives, we must be mindful of how it affects our human connections. We have to find that sweet spot where AI enhances our lives without diminishing our unique human qualities.

We can foster empathy and emotional connections, ensuring AI doesn't overshadow our interpersonal relationships.

By being aware of the impact of AI on our humanity, we can take proactive steps to preserve our human essence in this technology-driven era.

It's all about embracing the benefits of AI while holding onto what makes us human.

The Digital Double-Edged Sword: How Technology Can Both Connect and Isolate Us

Advances in digital technology and social media have fundamentally transformed communication and relationships. While these tools help us instantly connect with others worldwide, they also carry risks if allowed to replace human interaction and emotional bonds.

In an ongoing project that explores the benefits of time spent in natural environments, 12 older adults of diverse backgrounds who walked three to five times a week for at least 30 minutes were asked to list the top five benefits of walking outside.

Superficially, social media provides the illusion of companionship. Yet curating our online image filters out the authenticity that comes from vulnerability and presence. Platforms engineered to maximize engagement can foster addictive usage and displacement of in-person community.

Overreliance on technology for connection risks reducing multidimensional people to one-dimensional profiles. Nuance and empathy wane when we relate to each other as content, not beings with inner lives. Even as we become more globally connected, many report feeling more isolated and depressed.

As AI grows more sophisticated, we should be wary of anthropomorphizing it despite its human-like capabilities. While AI can provide utility, its inability to truly empathize means it should complement, not substitute, human relationships.

In one study, participants kept a daily log of time spent doing 19 different activities during weeks when they were and were not asked to abstain from using social media. In the weeks when people abstained from social media, they spent more time browsing the internet, working, cleaning, and doing household chores. However, during these same abstention periods, there was no difference in people's time spent socializing with their strongest social ties.

SOURCE: Social media’s growing impact on our lives (apa.org)

Seeking balance is critical. While embracing tech's benefits, we must consciously preserve time for meaningful real-world interactions. Technology is not inherently isolating, but excessive use can detach us from the interpersonal bonds essential for well-being. With self-awareness and perspective, we can harness it to enrich, not erode, our essential human need for genuine connection.

Feel free to quote from this article and restack it on Notes.

Church of Apple

The future of AI ethics and responsible development

Figure 1Source: wef

With rapid technological advancement, it becomes imperative to establish clear guidelines and regulations to safeguard against AI bias and discrimination while ensuring the protection of privacy and data rights.

We can empower individuals and businesses to make informed decisions by emphasizing education and AI literacy.

We must stay vigilant and adaptable, promoting AI that amplifies human potential and contributes positively to society. We stand at a crossroads with AI - we can either recklessly rush ahead or thoughtfully steer its trajectory. The path we must take is clear: building an AI-enabled future that uplifts humanity. This requires proactive collaboration across sectors - technologists, ethicists, policymakers, and society. Together, we can foster innovation guided by wisdom, ethics and foresight at every step.

The destination is within reach, but we must begin the journey in earnest. Only through open and urgent dialogue, grounded in our shared values, can we ensure AI's profound impacts are overwhelmingly positive. The task before us now is to create a future where AI amplifies our humanity, not diminishes it. Our collective choices will ripple through generations. The time for thoughtful action is unequivocally now - our children's children deserve nothing less.

Churn of Tesla.

Summary

As we end our journey through the captivating world of AI ethical dilemmas, it's clear that the road ahead is both fascinating and challenging.

We've explored the potential dangers lurking within superintelligence, danced with the complexities of privacy and data protection, and championed the importance of fairness and transparency in AI's realm.

But that's not all.

We've also confronted the intriguing questions surrounding AI's autonomy and accountability, uncovering its influence on warfare and surveillance, and pondered the impact on employment and inequality. It's been quite the adventure.

The future remains undetermined - and in our hands. We possess immense power to guide AI's trajectory, steering it toward benevolence over destruction. But mastering this technology requires wise restraint and moral imagination as much as raw innovation.

We must approach AI aligned with ethics and shared human values. And we must remain vigilant - establishing guardrails, demanding accountability, and centering our shared humanity. If we embrace this challenge with wisdom, foresight, and empathy for one another, the story we author can uplift the human spirit rather than diminish it.

The task at hand is immense, but so is our capacity for good. By joining together, we can nurture AI that elevates our collective potential. There is still time to shape a future where humanity flourishes alongside our technological creations. But first, we must revive this urgent conversation - for our generation, and all those to come.

We can navigate the twists and turns of AI ethics and forge a path toward a brighter, more equitable world where technology and humanity dance harmoniously into the future.

✨ TOP additional POSTS BY AI OBSERVER:

The author is known for her guides, deep dives and tutorials.

How Fine-Tuning is Taκing GPT-4 to New Heights: A Non-Techie's Guide

The Dark Side of LLMs: Addressing Security Concerns in the Age of AI

Revolutionizing Education: The Multifaceted Impact of AI in Learning and Teaching

I hope I’ve convinced you of the brilliance of the The AI Observer and the complexity of these topics and how they overlap with the future of civilization.

Excellent piece. I appreciate both yours and Nat's work. A simple, straightforward question: What is the probability the Security State already has achieved and secretly deployed AGI?

Congratulations to both of you! This is a very sensible collaboration between two folks who do longer form AI philosophy/news well.

This really hammers home the need to cooperate across multiple dimensions, and you do a great job of outlining the dual-edged nature of AI. It's probably not unfair to state that this is the central challenge of our time.