The Insurmountable Problem of Formal Reasoning in Large Language Models

Or why LLMs cannot truly reason, and maybe never will… Hybrid Claude 3.7, Nvidia's DeepSeek optimizations, 🔥 Mostly Harmless Ideas!

Good Morning,

Today we are going to talk about reasoning models and their capabilities. Anthropic the makers of Claude, have released this week a new ‘hybrid reasoning’ AI model. While OpenAI and others have released separate models focused on longer reasoning times, Anthropic adds advanced reasoning into standard models. Sort of like what OpenAI plans to do with a more unified GPT-5 later this year.

Today we have a guest post from Alejandro Piad Morffis of the Newsletter Mostly Harmless Ideas. I consider Alejandro a highly philosophical AI writer (more on this later this week) covering Op-Eds in a fairly satisfying manner. He’s a full-time college professor and researcher, working at the intersection between artificial intelligence and formal systems.

Mostly Harmless Ideas 🔬

Anthropic > OpenAI in Reasoning Models

Claude 3.7 is an important moment in the history of “reasoning” models so it fits our topic today very well. This model is touted as the first hybrid reasoning AI model available on the market, blending both real-time responses and deeper reasoning processes into a single system. Anthropic has basically beaten OpenAI to this execution which says a lot about today’s ecosystem even though Claude is mostly a tool for Enterprise AI and B2B businesses, while ChatGPT is more popular among B2C consumers.

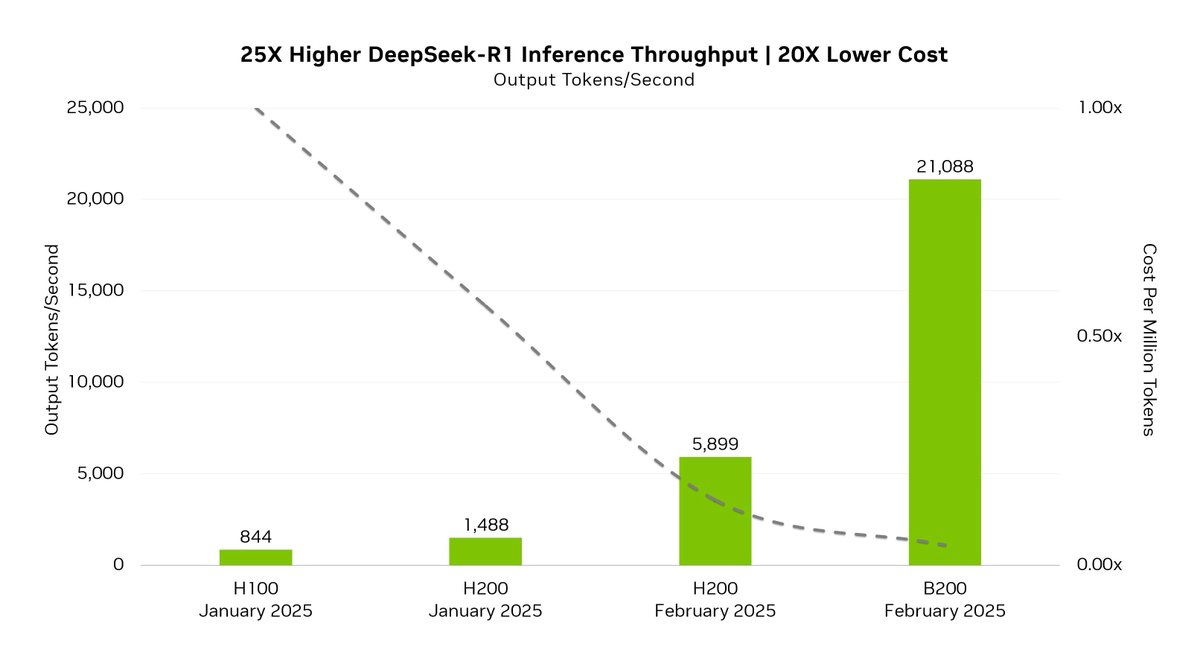

Nvidia recently released by the way, DeepSeek-R1 optimizations for Blackwell, delivering 25x more revenue at 20x lower cost per token, compared with NVIDIA H100 just four weeks ago. Fueled by TensorRT DeepSeek optimizations for Nvidia’s Blackwell architecture, including FP4 performance with state-of-the-art production accuracy, it scored 99.8% of FP8 on MMLU general intelligence benchmark.

Whether its OpenAI’s o3 model, DeepSeek, or Claude 3.7, reasoning models are part of the experiments of 2025 in AI and yet another frontier.

Alejandro is a double PhD in Machine Learning from the University of Alicante and the University of Havana, specifically in language modelling for the health and clinical domains. So we might want to take his opinions and enjoy his historical explorations seriously: (he is also fairly prolific)

His general Substack: https://blog.apiad.net

His Gumroad profile: https://store.apiad.net

List of books he’s working on: http://books.apiad.net

His link tree: https://apiad.net

As CoT becomes mainstream in our chatbots, Anthropic says the new model is capable of near-instant responses or extended responses that show step-by-step reasoning with users able to choose which type of response they're looking for.

Claude 3.7 Sonnet shows particularly strong improvements in coding and front-end web development. On February 10th, Anthropic released their Anthropic Economic Index that is super interesting. (Highly recommend you check it out - paper)

Claude 3.7 Sonnet introduces a unique approach to AI reasoning by integrating quick response features with the ability to perform extended, step-by-step reasoning.

Anthropic with the release is also introducing a command line tool for agentic coding, Claude Code.

Meanwhile Perplexity has a new browser called Comet.

Top Articles by the Guest Contributor

Alejandro challenges us to think differently with some bold ideas:

Why reliable AI requires a paradigm shift

The Most Beautiful Algorithms Ever Designed

Artificial Neural Networks Are Nothing Like Brains

Why Artificial Neural Networks Are So Damn Powerful - Part I

Read more of his (top) work here.

We can Learn a lot from Anthropic’s Product Development about the Future 🚀

Launched on February 10, 2025, the Anthropic Economic Index aims to understand the impact of artificial intelligence (AI) on labor markets and the economy. Results:

Today, AI usage is concentrated in software development and technical writing tasks.

Over one-third of occupations (roughly 36%) see AI use in at least a quarter of their associated tasks, while approximately 4% of occupations use it across three-quarters of their associated tasks. Read more.

Claude 3.7 Sonnet is rolled out to all users and developers on Monday February 24th, 2025. Anthropic will likely acquire some of the best AI startups at the intersection of AI and software development. It’s so obvious. While Anthropic already powers AI coding tools like Cursor, it’s pitching Claude Code as “an active collaborator that can search and read code, edit files, write and run tests, commit and push code to GitHub, and use command line tools.”

Claude Code boasts a range of functionalities that streamline the coding process:

Code Understanding

Active Collaboration

Automation of Routine Tasks

Testing Support

Small Teams are the Future 💡

Ben Lang of Next Play made this infographic celebrating some of the AI startups that are scaling faster than usual with smaller than usual teams.

The Advent of Reasoning Models in 2025 🌊

Claude, (I mean AI) is indeed starting to rewrite the playbook for many AI startups, hilariously many of them powered by Anthropic. Important to note we can include Together AI in the above club. Read their guide on Prompting DeepSeek-R1. Now with better reasoning models, what will become possible? Claude 3.7 Sonnet has the same price as its predecessors: $3 per million input tokens and $15 per million output tokens—which includes thinking tokens.

While OpenAI’s ChatGPT is synonymous with Generative AI, it’s actually companies like Anthropic who are powering the future of Enterprise AI, along with companies like Cohere and others.

So this is all a segway to the very timely and relevant piece by Alejandro.

OpenAI Explorations 🗺️

If you are OpenAI fans, you have to check out a new series by Eric Flaningam of Generative Value:

OpenAI, Part 3: The (Probabilistic) Future of OpenAI

This is a very fulfilling deep dive today, especially suited for advanced reasons on AI, but it’s all made accessible by Alejandro, who currently lives in Cuba.

By Alejandro Piad Morffis January and February, 2025.

The Insurmountable Problem of Formal Reasoning in Large Language Models

Subtitle: Or why LLMs cannot truly reason, and maybe never will…

TL;DR: 🐇

Large Language Models, at least in the current paradigm, have intrinsic limitations that prevent them from performing reliable reasoning.

The stochastic sampling paradigm means hallucinations are irreducible, and some mistakes will always be possible.

The bounded-computation architecture of Transformer-based LLMs means there are always problems that require more “thinking time” than any LLM can provide.

Even with techniques like chain-of-thought prompting and integration with external tools, these limitations cannot be fundamentally overcome.

Newer reasoning-enhanced models are a technical evolution but not a qualitative paradigm shift, so they are subject to the same limitations, even if diminished in practice.

Ultimately, reliable formal reasoning in natural language might be unsolvable.

Reasoning models are the latest trend in AI.

Support independent authors who uncover glimpses of AI that the mainstream media cannot or will not, explore.