The AI Semiconductor Landscape

The semiconductor industry is top of mind in 2025. But how does it all fit together?

Happy Trump inauguration day! With the U.S. continuing a number of stringent exports controls and this next administration expected to keep it up maybe even with elevated tariffs it’s a super interesting time to think more about the semiconductor industry. The AI arms race and national security due diligence related to U.S. exceptionalism is upon us.

A flurry of Executive Orders by the Biden Administration on his last two weeks in office were telling. Trump and the new administration will be carefully watched and their actions scrutinized. Meanwhile, I’ve long admired the work of Eric Flaningam for his macro overviews on various aspects of technology stacks. Let’s feature some of them here:

⚗️ Generative Value 🌍

His Newsletter, Generative Value provides great insights into how everything is connected.

A Deep Dive on Inference Semiconductors

A Primer on Data Centers

A Primer on AI Datacenters

Nvidia: Past, Present and Future

Whether you are an investor, technologist or just a casual reader his overviews will provide you some value and are easy to understand and scan.

As you know, the Biden administration has implemented a series of executive orders (EOs) and export controls (ECs) aimed at regulating the semiconductor industry, particularly in response to national security concerns and competition with China. Trump has said various things with regards to tariffs on China as well. The U.S. appears to be trying to control how AI spreads to other nations, limiting China’s ability to for example access the best Nvidia AI related GPUs and chips.

Jake Sullivan — with three days left as White House national security adviser, with wide access to the world's secrets — called on journalists and news media to deliver a chilling, "catastrophic" warning for America and the incoming administration:

The AI Arms Race circa 2025

What happens this point on is fundamentally a new world of innovation and competition in innovation.

“The next few years will determine whether artificial intelligence leads to catastrophe — and whether China or America prevails in the AI arms race.”

According to JS, as reported by Axios, “AI development sits outside of government and security clearances, and in the hands of private companies with the power of nation-states.”

“U.S. failure to get this right, Sullivan warns, could be "dramatic, and dramatically negative — to include the democratization of extremely powerful and lethal weapons; massive disruption and dislocation of jobs; an avalanche of misinformation." It wasn’t clear in his briefing if OpenAI, Anthropic, Google and others can be expected to “get this right”. The U.S. believes it is the AI leader heading into the new year and new administration.

Clearly in 2025, corporations and the financial elite who have the most say (majority shareholders), have enormous power in the AI arms race that’s ahead in the 2025 to 2035 period, an incredible decade of datacenters, semiconductors and a sprawling new landscape related to AI ahead. The 2025-2035 argutely is the most important decade in the history of innovation human civilization has ever witnessed.

Geopolitics aside, the semiconductor industry is becoming way more important with datacenters and a new emergence of AI’s capabilities. I will be covering the semiconductor industry more closely in 2025 in this and related publications.

But how does it all work? What are the companies involved? Why are companies like Nvidia, TSMC, ASML and others so pivotal? What about the big picture and landscape?

The AI Semiconductor Landscape

By Eric Flaningam, December, 2024.

Hi, my name’s Eric Flaningam, I’m the author of Generative Value, a technology-focused investment newsletter. My investment philosophy is centered around value. I believe that businesses are valued based on the value they provide to customers, the difference between that value & the value of competitors, and the ability to defend that value over time. I also believe that technology has created some of the best businesses in history and that finding those businesses will lead to strong returns over time. Generative Value is the pursuit of those businesses.

1. Introduction

Nvidia’s rise in the last 2 years will go down as one of the great case studies in technology.

Jensen envisioned accelerated computing back in 2006. As he described at a commencement speech in 2023, ”In 2007, we announced [released] CUDA GPU accelerated computing. Our aspiration was for CUDA to become a programming model that boosts applications from scientific computing and physics simulations, to image processing. Creating a new computing model is incredibly hard and rarely done in history. The CPU computing model has been the standard for 60 years, since the IBM System 360.”

For the next 15 years, Nvidia executed on that vision.

With CUDA, they created an ecosystem of developers using GPUs for machine learning. With Mellanox, they became a (the?) leader in data center networking. They then integrated all of their hardware into servers to offer vertically integrated compute-in-a-box.

When the AI craze started, Nvidia was the best-positioned company in the world to take advantage of it: a monopoly on the picks and shovels of the AI gold rush.

That led to the rise of Nvidia as one of the most successful companies ever to exist.

With that rise came competition, including from its biggest customers. Tens of billions of dollars have flowed into the ecosystem to take a share of Nvidia’s dominance.

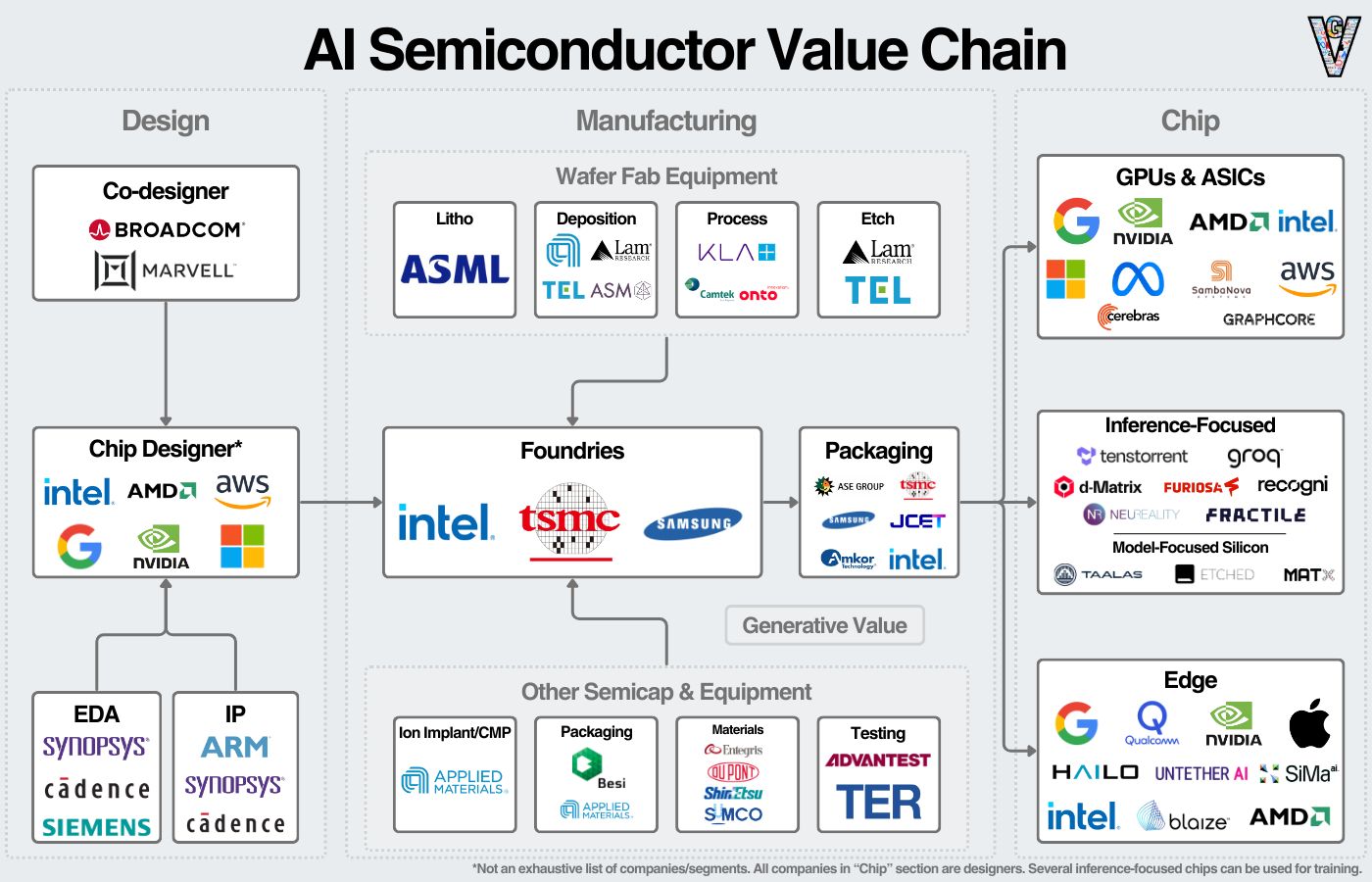

This article will be a deep dive into that ecosystem today and what it may look like moving forward. A glimpse at how we map out the ecosystem before we dive deeper:

To read the entire piece, consider supporting the Newsletter for less than $2 a week.

💡 Generative Value Newsletter ✏️

A mental model for the AI semiconductor value chain. The graphic is not exhaustive of companies and segments.

2. An Intro to AI Accelerators

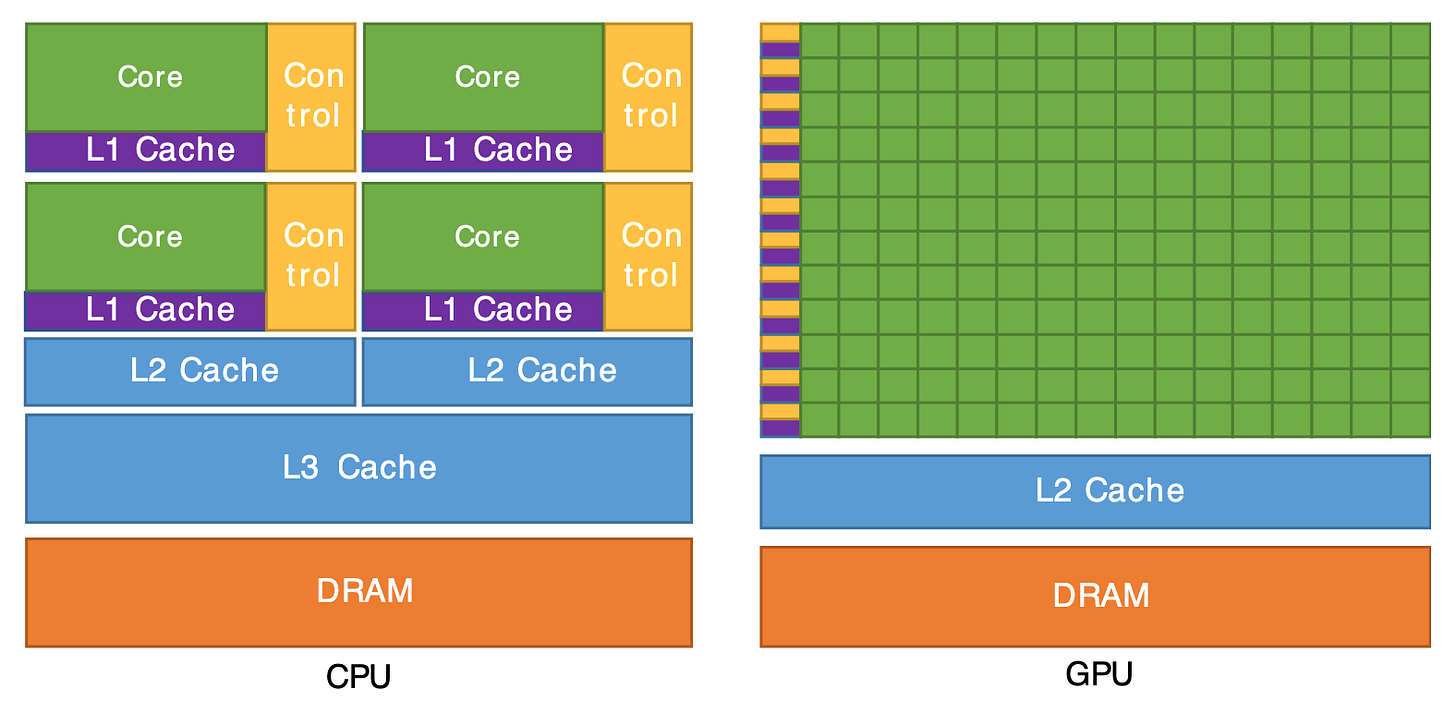

At a ~very~ high level, all logic semiconductors have the following pieces:

Computing Cores - run the actual computing calculations.

Memory - stores data to be passed on to the computing cores.

Cache - temporarily stores data that can quickly be retrieved.

Control Unit - controls and manages the sequence of operations of other components.

Traditionally, CPUs are general-purpose computers. They’re designed to run any calculation, including complex multi-step processes. As shown below, they have more cache, more control units, and much smaller cores (Arithmetic Logic Units or ALUs in CPUs).

Source: https://cvw.cac.cornell.edu/gpu-architecture/gpu-characteristics/design

On the other hand, GPUs are designed for many small calculations or parallel processing. Initially, GPUs were designed for graphics processing, which needed many small calculations to be run simultaneously to load displays. This fundamental architecture translated well to AI workloads.

Why are GPUs so good for AI?

The base unit of most AI models is the neural network, a series of layers with nodes in each layer. These neural networks represent scenarios by weighing each node to most accurately represent the data it's being trained on.

Once the model is trained, new data can be given to the model, and it can predict what the outputted data should be (inference).

This “passing through of data” requires many, many small calculations in the form of matrix multiplications [(one layer, its nodes, and weights) times (another layer, its nodes, and weights)].

This matrix multiplication is a perfect application for GPUs and their parallel processing capabilities.

(Stephen Wolfram has a wonderful article about how ChatGPT works.)

The GPU today

GPUs continue to get larger, with more computing power and memory, and they are more specialized for matrix multiplication workloads.

Let’s look at Nvidia’s H100 for example. It consists of CUDA and Tensor cores (basic processors), processing clusters (collections of cores), and high-bandwidth memory. The H100’s goal is to process as many calculations as possible, with as much data flow as possible.

Source: https://resources.nvidia.com/en-us-tensor-core

The goal is not just chip performance but system performance. Outside of the chip, GPUs are connected to form computing clusters, servers are designed as integrated computers, and even the data center is designed at the systems level.

Training vs Inference

To understand the AI semiconductor landscape, we have to take a step back to look at AI architectures.

Training iterates through large datasets to create a model that represents a complex scenario, and inference provides new data to that model to make a prediction.

A few key differences are particularly important with inference:

Latency & Location Matter - Since inference runs workloads for end users, speed of response matters, meaning inference at the edge or inference in edge cloud environments can make more sense than training. In contrast, training can happen anywhere.

Reliability Matters (A Little) Less—Training a leading-edge model can take months and requires massive training clusters. The interdependence of training clusters means mistakes in one part of the cluster can slow down the entire training process. With inference, the workloads are much smaller and less interdependent; if a mistake occurs, only one request is affected and can be rerun quickly.

Hardware Scalability Matters Less - One of the key advantages for Nvidia is its ability to scale larger systems via its software and networking advantages. With inference, this scalability matters less.

Combined, these reasons help explain why so many new semiconductor companies are focused on inference. It’s a lower barrier to entry.

Nvidia's networking and software allow it to scale to much larger, more performant, and more reliable training clusters.

On to the competitive landscape.

3. The AI Semiconductor Landscape

We can broadly look at the AI semiconductor landscape in three main buckets:

Data Center Chips used for Training

Data Center Chips used for Inference

Edge Chips used for Inference

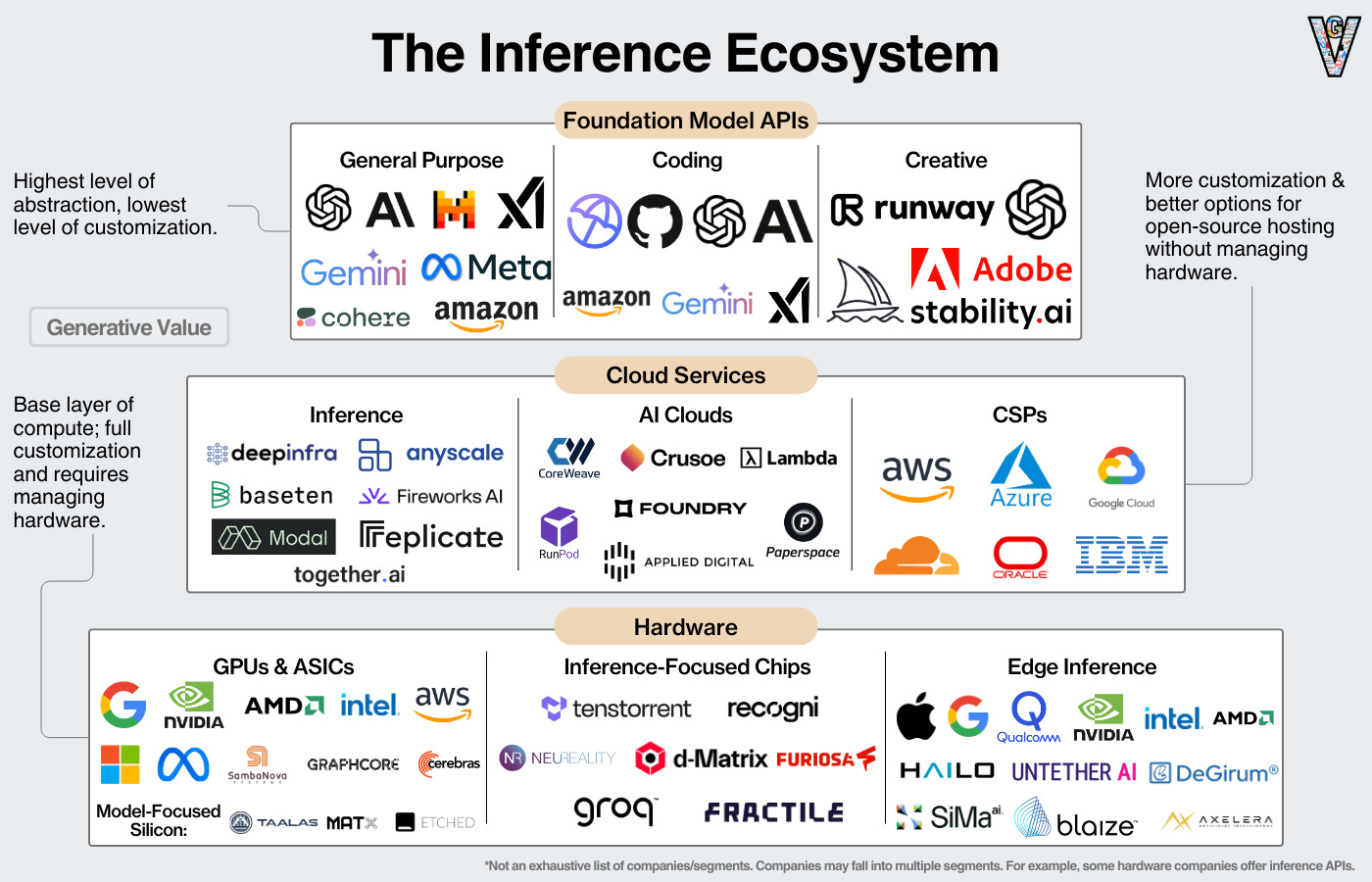

Visualizing some of those companies below:

The Data Center Semiconductor Market

Put simply, Nvidia dominates data center semiconductors, with AMD as the only legitimate general-purpose alternative. The hyperscalers develop in-house chips, and most startups focus on inference or specialized hardware for specific architectures.

Nvidia will sell $100B+ in AI systems in 2024. AMD is in clear second place, expecting $5B in revenue in 2024.

Across all data center processors, we can see market share at the end of 2023 here:

Google offers the most advanced accelerators of the hyperscalers, with their TPUs. TechInsights estimated they shipped 2 million TPUs last year, putting it behind only Nvidia for AI accelerators.

Amazon develops in-house networking chips (Nitro), CPUs (Graviton), inference chips (Inferentia), and training chips (Trainium). TechInsights estimated they “rented out” 2.3M of these chips to customers in 2023.

Microsoft recently announced both a CPU (Cobalt) and a GPU (Maia). They’re too new to tell any real traction yet.

Finally, I’ll point out that Intel was initially expected to sell ~$500M worth of its Gaudi 3 this year, but reported last earnings call that they will not achieve that target.

Inference provides a more interesting conversation!

4. The Inference Landscape Today

There’s no shortage of options for a company to run inference. From the easiest to manage & least customization to the hardest to manage and most customization, companies have the following options for inference:

Foundation Model APIs - APIs from model providers like OpenAI. The easiest and least flexible option.

Inference Providers - Dedicated inference providers like Fireworks AI and DeepInfra who aim to optimize costs across various cloud and hardware providers. A good option for running and customizing open source models.

AI Clouds - GPUs or inference as a service from companies like Coreweave and Crusoe. Companies can rent compute power and customize to their needs.

Hyperscalers - Hyperscalers offer compute power, inference services, and platforms that companies can specialize models on.

AI Hardware - Companies buy their own GPUs and optimize to their specific needs.

Bonus #1: APIs to AI Hardware - Companies like Groq, Cerebras, and SambaNova have started offering inference clouds allowing customers to leverage their hardware as an inference API. Nvidia acquired OctoAI, an inference provider, presumably to create their own inference offering.

Bonus #2: Inference at the Edge - Apple, Qualcomm, and Intel want to offer hardware and software allowing inference to occur directly on device.

RunPod published an interesting comparison between the Nvidia H100 and the AMD MI300X, and found that the MI300X provides more cost-advantageous inference in very large batch sizes and very small batch sizes.

We also have several hardware startups that have raised a lot of money to capture a piece of this market:

An interesting trend emerging in this batch of startups is the expansion up stack to software. Three of the most notable startups in the space (Groq, Cerebras, and SambaNova) are all offering inference software. This vertical integration, in theory, should provide cost and performance benefits to end users.

The final piece of the AI semiconductor market that everyone is thinking about is AI at the edge.

5. AI on the Edge?

Training the largest and most capable AI models is expensive and can require access to entire data centers full of GPUs. Once the model is trained, it can be run on less powerful hardware. In fact, AI models can even run on “edge” devices like smartphones and laptops. Edge devices are commonly powered by an SoC (System on a Chip) that includes a CPU, GPU, memory, and often an NPU (Neural Processing Unit).

Consider an AI model on a smartphone. It must be compact enough to fit within the phone's memory, resulting in a smaller, less sophisticated model than large cloud models. However, running locally enables secure access to user-specific data (e.g., location, texts) without transferring it off the device.

The AI model could technically run on the phone’s CPU, but matrix multiplication is better suited to parallel processors like GPUs. Since CPUs are optimized for sequential processing, this can lead to slower inference, even for small models. Running inference on the phone’s GPU is an alternative.

However, smartphone GPUs are primarily designed for graphics. When gaming, users expect full GPU performance for smooth visuals; simultaneously allocating GPU resources to AI models would likely degrade the gaming experience.

NPUs are processors tailored for AI inference on edge devices. A smartphone can use the NPU to run AI workloads without straining the GPU or CPU. Given that battery life is crucial for edge devices, NPUs are optimized for low power consumption. AI tasks on the NPU may draw 5–10x less power than the GPU, making them far more battery-friendly.

Edge inference is applied in sectors like industrial and automotive, not just consumer devices. Autonomous vehicles rely on edge inference to process sensor data onboard, allowing the quick decisions needed to maintain safety. In industrial IoT, local AI inference of sensor and camera data enables proactive measures like predictive maintenance.

With more available power than consumer devices, industrial and automotive applications can deploy high-performance computing platforms, like Nvidia’s Orin platform, featuring a GPU similar to those in data centers. Use cases that benefit from remote hardware reprogrammability can leverage FPGAs, for example from Altera.

6. Final Thoughts on the Market

To end with some musings on the market, I’ll touch on three questions I find most interesting in the space:

How deep is Nvidia’s moat?

Nvidia has sustained a 90%+ market share in data center GPUs for years now. They’re a visionary company that has made the right technical and strategic moves over the last two decades. The most common question I hear asked in this market is about Nvidia’s moat.

To that, I have two conflicting points: First, I think Nvidia is making all the right moves, expanding up the stack into AI software, infrastructure, models, and cloud services. They’re investing in networking and vertical integration. It’s incredible to see them continue to execute.

Secondly, everyone wants a piece of Nvidia’s revenue. Companies are spending hundreds of billions of dollars in the race to build AGI. Nvidia’s biggest customers are investing billions to lessen their reliance on Nvidia, and investors are pouring billions into competitors in the hopes of taking share from Nvidia.

Summarized: Nvidia is the best-positioned AI company on the planet right now, and there are tens of billions of dollars from competitors, customers, and investors trying to challenge them. What a time to be alive.

What’s the opportunity for startups?

As Paul Graham said, “competing against Nvidia is an uphill climb.” When you’re competing against Nvidia, that feels extra true. That said, there’s always a trade-off between generality and specificity. Companies that can effectively specialize in large enough markets can create very large businesses. This includes both inference-specific hardware and model-specific hardware. But semiconductors are hard, and it will take time and several generations for these products to mature.

I’m particularly interested in approaches that can enable faster development of specialized chips. This helps lower the barrier to entry of chip development while taking advantage of the performance benefits of specialization.

Will we get AI at the edge?

If we look at the history of disruption, it occurs when a new product offers less functionality at a much lower price that an incumbent can’t compete with. Mainframes gave way to minicomputers, minicomputers gave way to PCs, and PCs gave way to smartphones.

The key variable that opened the door for those disruptions was an oversupply of performance. Top-end solutions solved problems that most people didn’t have. Many computing disruptions came from decentralizing computing because consumers didn’t need the extra performance.

With AI, I don’t see that oversupply of performance yet. ChatGPT is good, but it’s not great yet. Once it becomes great, then the door is opened for AI at the edge. Small language models and NPUs will usher in that era. The question then becomes when, not if, AI happens at the edge.

Visit Generative Value’s top posts here.

🔮 Generative Value Articles

SpaceX: The Story of Space Disruption

The Current State of AI Markets

Nuclear's AI Opportunity

A Primer on Data Warehouses

AI Data Centers, Part 2: Energy

And so many more good topics.

Great article. Thanks for sharing

What a great piece !!! WOW !!!