Nvidia is the Fish that Learned to Drive a Bicycle

Deepdive on Nvidia's value, AMD's latest AI chip and the semiconductor era of AI.

For those of you who are investors in AI chip companies and in Nvidia in particular, this one is for you.

How did Nvidia go from making hardware for gaming and Bitcoin to the most powerful AI chip maker in just a few years? Nvidia's evolution from a graphics card company to the backbone of the AI revolution is one of the most remarkable business transformations in tech history.

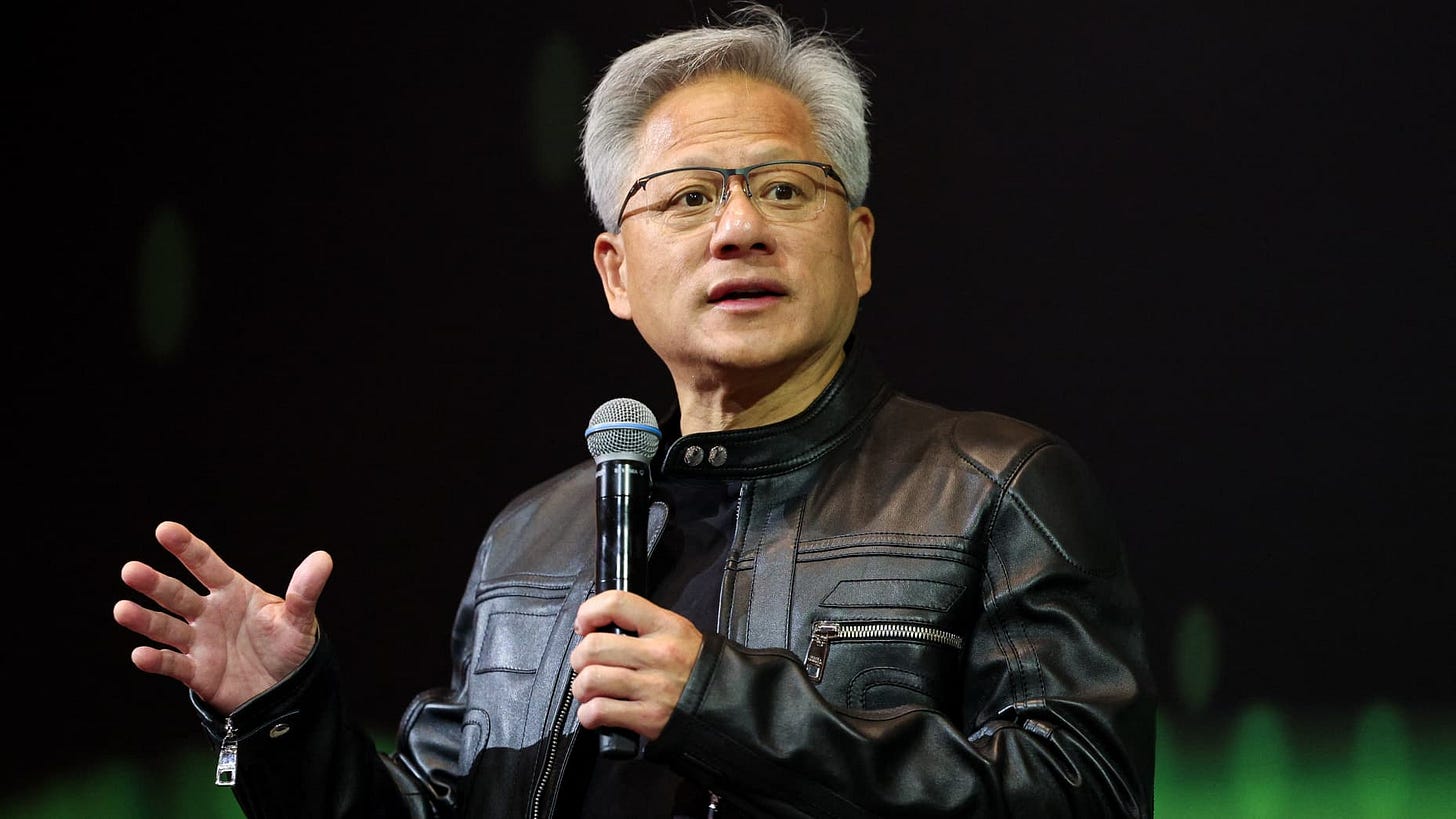

Nvidia was founded in 1993 by three engineers - Jensen Huang, Chris Malachowsky, and Curtis Priem - who met at a Denny's restaurant in San Jose. One of the most critical decision in Nvidia's transformation was launching CUDA (Compute Unified Device Architecture) in 2006. This parallel computing platform allowed developers to use Nvidia's GPUs for general-purpose computing beyond graphics. For 10 years, Wall Street questioned this Nvidia building CUDA, valuing CUDA at essentially $0 in Nvidia's market cap.

Well, not anymore. Nvidia market cap today stands at $3.46 Trillion, and the DeepSeek scare in the stock market five months later is history, Nvidia’s stock is positive in the green for the year and it has overtaken Microsoft yet again to be the most valuable company in the world. Nvidia is also the company the best defines the Generative AI era. Along with TSMC, Nvidia it is the center of the semiconductor and AI chip revolution that makes AI datacenters and LLMs possible today and every application like ChatGPT and whatever comes next.

How do you describe their financial trajectory or even try to describe the stock’s value in a world where the demand for AI compute is literally hard to keep up with?

Demand for AI Compute and AI chips is insatiable

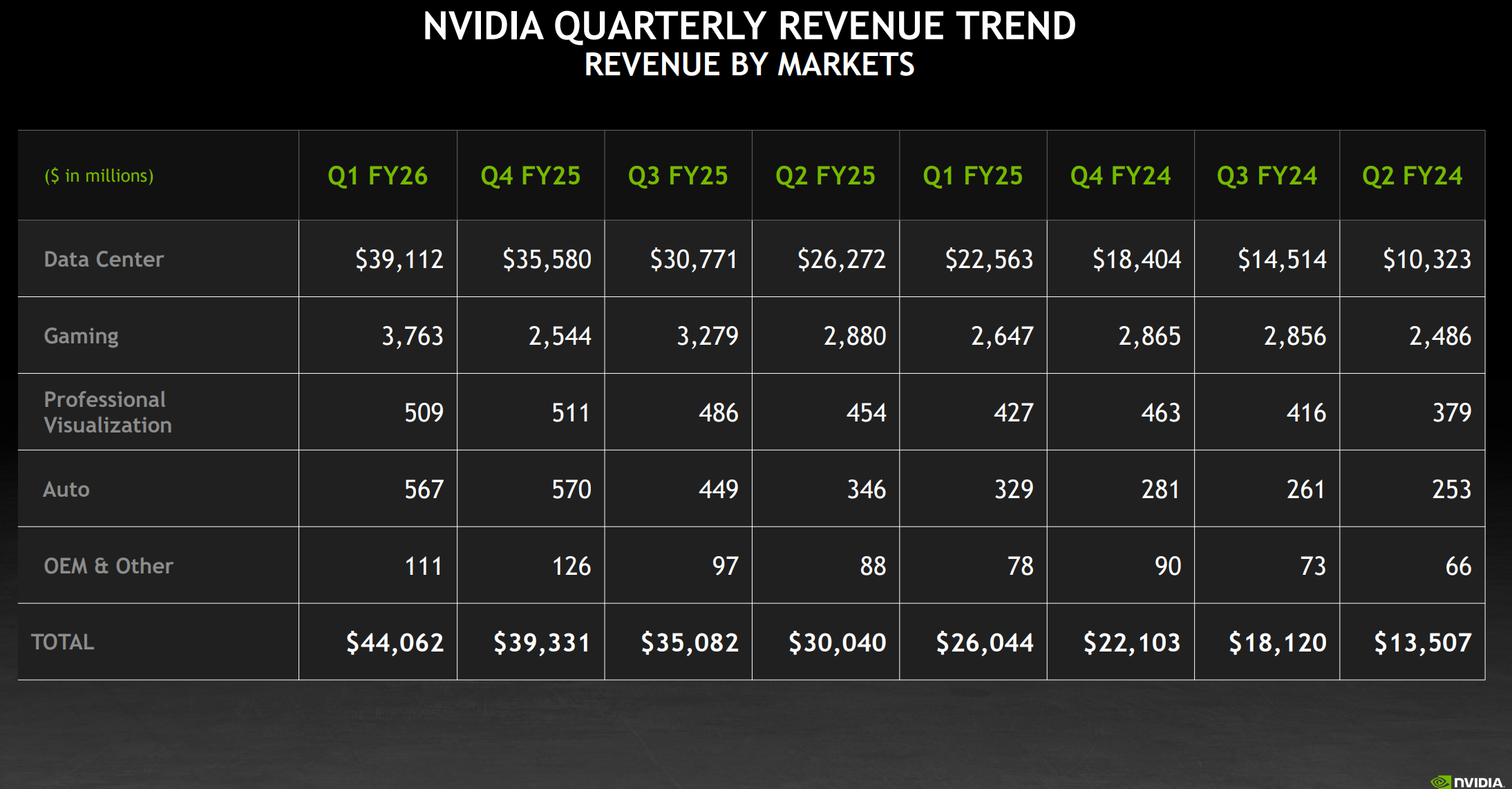

Nvidia’s revenue grew 69% during the last quarter, and sales in the company’s data center division, which includes AI chips and related parts, grew 73%.

Considering Nvidia’s growth in the last few years, it’s hard to imagine that they are still growing at this rate and it might be the key generational company of our times.

Hardware and the semiconductor have taken on greater importance in the era of Generative AI. All those reasoning models and inference demands means Nvidia’s chips are the key. It’s getting difficult for anyone to catch them.

Nvidia has an estimated marketshare in the datacenter and AI chip market of is high, very high. In June, 2024 Mizuho Securities estimated that Nvidia controls between 70% and 95% of the market for AI chips used for training and deploying models like OpenAI’s GPT. In 2025 I think we can roughly say that Nvidia dominates the AI chip market with over 80% share and plans to invest up to $500 billion in U.S. AI infrastructure over the next four years.

Its R&D is years ahead of the competition and its partnership with TSMC is very close. Nvidia even plans a global HQ in Taipei. Recent deals with the Middle East and Europe make Nvidia look like the present and future of AI Infrastructure rollouts. Many believe Nvidia will be the first company to reach a market valuation of over $4 Trillion.

I asked Nicolas Baratte - TechStock01 who is a financial and technology analyst mostly covering Global Semiconductors and the Asian supply chain for his analysis of Nvidia’s tremendous moment in 2025.

In recent times Nvidia has done huge deals with the Middle East and now with Europe. Jensen Huang managed to convince his audience on the recent trip that Nvidia is the company that can help Europe build its artificial intelligence infrastructure so the region can take control of its own destiny with the transformative technology.

As staggering as Nvidia’s role in the future of AI Infrastructure has become and the Sovereign AI manifestor for many countries, and as gigantic their lead in AI chips, we’ve written about Huawei (Grace Shao) and now AMD has a new chip.

AMD’s new AI Chip

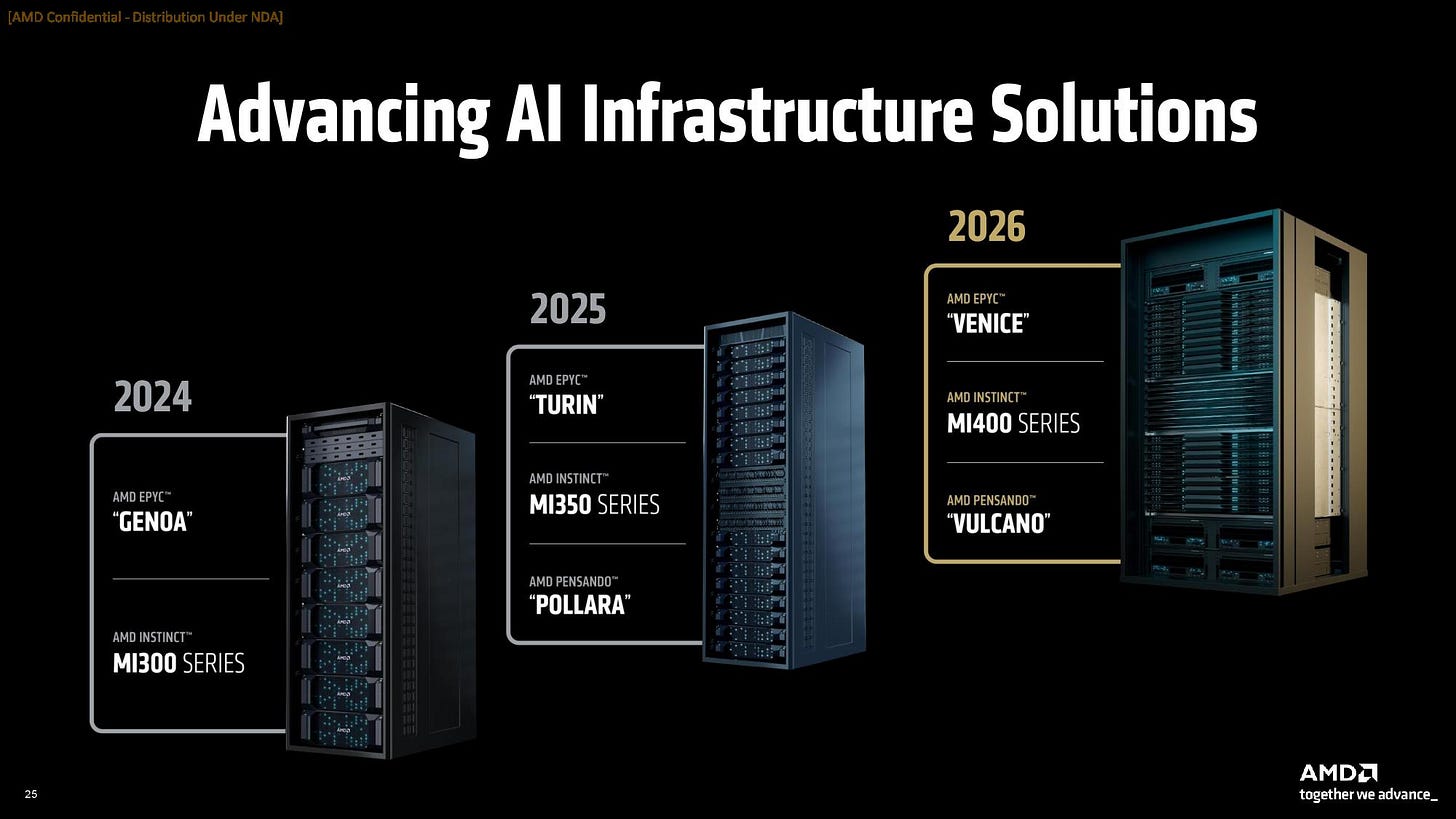

AMD on Thursday June 12th unveiled new details about its next-generation AI chips, the Instinct MI400 series, that will ship next year in 2026.

AMD says the MI350 Series, consisting of both Instinct MI350X and MI355X GPUs and platforms, delivers a 4x, generation-on-generation AI compute increase and a 35x generational leap in inferencing, paving the way for transformative AI solutions across industries. The chips will be able to be used as part of a “rack-scale” system, AMD said. That’s important for customers that want “hyperscale” clusters of AI computers that can span entire data centers.

AMD also previewed its next generation AI rack called “Helios.” It will be built on the next-generation AMD Instinct MI400 Series GPUs – which compared to the previous generation are expected to deliver up to 10x more performance running inference on Mixture of Experts models, the “Zen 6”-based AMD EPYC “Venice” CPUs and AMD Pensando “Vulcano” NICs. More details are available in this blog post.

AMD says Instinct MI400X GPU is 10X faster than MI300X, will power Helios rack-scale system with EPYC 'Venice' CPUs

AMD’s flagship Instinct MI400-series AI GPU roughly doubles performance from its Instinct MI355X and increases memory capacity by 50% and bandwidth by more than 100%. While Nvidia leads, AMD and Huawei are making rapid progress in their own right.

Jensen Huang knows that export controls on Nvidia chips have effectively ceded control of China to Huawei/SMIC. Huang said that U.S. chip restrictions on China have failed to achieve their goals. He’s done some really fantastic interviews in 2025 about this topic in particular.

A U.S. $400 Billion Market

In May, IDTechEx forecasted that by 2030, that the deployment of AI data centers, commercialization of AI, and the increasing performance requirements from large AI models will perpetuate the already soaring market size of AI chips to over US$400 billion.

NVIDIA annual revenue for 2024 was $60.922B, a 125.85% increase from 2023. But when LLMs exploded - so did their revenue. Nvidia's most recent annual revenue for the fiscal year ending January 26, 2025, was $130.5 billion. This represents a significant increase of 114% compared to the previous year. They don’t really have a peer competitor in AI chips. Nvidia dominates the AI chip market with over 80% share and plans to invest up to $500 billion in U.S. AI infrastructure over the next four years.

Nvidia Foresaw the AI chip Opportunity

One year after the Google DeepMind paper that made LLMs possible, in 2018, Nvidia launched the GeForce RTX series, which marked one of the major revolutionary moments for this company. These cards featured dedicated AI processing units (Tensor Cores) and real-time ray tracing capabilities, effectively merging gaming, AI, and advanced graphics rendering. Seven years later, Nvidia’s status in the world is almost unrecognizable 🌟.

Nicolas Baratte writes TechStock01

He’s been a financial analyst since 2000, covering the Technology sector in Asia, and Global Tech in a couple of jobs. Nicolas has worked on the sell-side for JPMorgan, CLSA and Macquarie. On the buy-side for a Tiger fund and Prudential.

Nicolas has covered Asian semiconductors, Asian hardware and components, and the global Semi supply chain. In addition to stock coverage, he also publishes research on sectors as that often shows clearer trends and pinpoints inflection points in a sharper way. Nicolas lives mostly in Taiwan, sometimes in France and is in New York regularly.

Understanding the semiconductor industry is becoming a lot more important to understand how Generative AI and datacenters will scale, especially for investors.

Recent Articles

AMD claims that its new GPU MI350-355 is on par with Nvidia

SK Hynix and Micron HBM4 qualification by Nvidia are a done deal

Broadcom results good. 55-60% AI growth in 2025, same in 2026

Semiconductor Shopping Season Has Arrived

Semiconductor Voices on Subsack

Please immerse yourself in the thought leadership of the semiconductor industry, it will make you a better investor and give you a better grounding in understanding what makes AI possible.

Dylan Patel (the GOAT)

SEMI VISION (an Institute in Taiwan, Jett C. and Eddy T.)

Judy Lin 林昭儀 (TechSoda)

Babbage, and many others.

The Nvidia Empire

By N. Baratte - TechStock01 May | June, 2025.

Jensen’s wizardry: the Nvidia empire

We have a very good analog to think about the future size and value of AI: Cloud Computing. Cloud revenues increased by 30x from 2011 to 2024.

Like Cloud, AI enables new types of data crunching, new types of applications that are not possible without this tool. As Jensen said: LLM are cool but they’re just a text answer to a text query. AI will extend to the physical world, like factories or driving cars. The application domains are much broader than Cloud Computing.

The processor market is split into 1) multi-purpose GPU from AMD and Nvidia 2) custom-chips of the hyperscalers (AWS, Google, Meta, Microsoft) that only run their internal applications. Nvidia is extremely dominant with a ~80% revenue-share in 2024.

Based on TSMC revenue guidance, we can estimate that TSMC AI revenues will increase by 6.2x from 2024 to 2029, Nvidia’s data center revenues will increase by 4.2x.

Is it too much? That’s not the question. The question is: what’s the economics? If $1 spent on GPU generates $5 of AI Cloud revenue (ex. AWS), which in turn enables another $5 of application revenue (ex. Hugging Face) then it will happen. That’s the economics of Cloud Computing today: $1 of Capex supports $10 of applications revenue and $3 of operating profits.

To conclude with stocks, it’s quite simple. Nvidia are very dominant firms without which AI at scale will not happen. Both firms will generate tremendous growth and are trading at reasonable valuations. Buy and hold. The dark horse for the contrarian minds is AMD.

The skepticism over the future of AI is a repeat of not understanding Cloud Computing 15 years ago.

In 2010-20, I was working for a brilliant investment bank where I was the head of Technology research. In 2013, my team and I wrote a big report on the ascendency of Cloud Computing and I took a lot of shit for it. The argument was always along these lines: “my on-premise IT works fine, why would I pay a 3rd party to run my applications”.

Editor’s Note:

Other reports:

Nvidia 1Q26: the good times keep rolling, the stock is not expensive

Nvidia’s Jensen at Computex: 3 Big Announcements, and Smaller Ones

Is Huawei New AI Chip Is Bad for Nvidia? New US Export Licensing Is the Real Issue (Part 2)

Huawei New AI Processor Ascend 910C and the Long History Behind It (Part 1)

💼 Brief

The deep dive continues at some length and is a great primer for understanding Nvidia’s tremendous importance.

Nvidia’s stock is up 1,433% in the last five years. NVDA 0.00%↑

So, If you had invested $100 in Nvidia stock in 2020, it would be worth approximately $13,000 - $14,000 today.

Nicolas has a lot of stock experience and makes some important calculations for us. He also evaluates Nvidia’s current competitors and potential future competitors in the AI chip space.

His many infographics, charts and visuals in the piece that really allows his analysis to stand out.