Most Impactful Generative AI Papers of 2023

Guest post 🥂: The papers that shaped AI research and industry in 2023 and beyond, from Meta's LLaMA to Stanford's ControlNet and Microsoft Orca

☝ Image Created: Sahar Mor, January, 2024.

Hey Everyone,

I’ve really enjoyed reading AI papers in 2023 more than ever, and I hope you have as well! One of the best people to follow on breaking news in Generative A.I. is actually Sahar Mor , his LinkedIn posts have improved as his Newsletter has grown and matured in its coverage in recent months.

Formerly at Stripe, Sahar thinks like a product manager but has the skills of an engineer and researcher going through papers and GitHub repos. The infographics he creates are also great. AI Tidbits used to be more about news summaries but recently he’s gone into deep dives and it has made all the difference for me as a reader.

If you value breaking news in 2024, this is the one Newsletter and professional to follow. His AI Tidbits 2023 SOTA Report really impressed and stood out to me.

🤔💭 How and Where to Read Summaries of AI Papers?

Sahar has also sent me a ton of recommendations via his LinkedIn virality and excellent breaking news summaries. Meanwhile I’m a huge fan of machine learning researchers and educators who cover A.I. papers. I can only hope in 2024 this number grows.

While A.I. papers have become more accessible, they have also become way more numerous and thus I’m always on the lookout for succinct summaries.

If you enjoy my topics and deep dives, get access to the full publication.

📑 For AI Papers Go to 🚀

AK ’s Daily AI Papers at Hugging Face

AK at AK’s Substack

So speaking of the best papers of 2023 we also have some additional tips.

Ten Noteworthy AI Research Papers of 2023

By Ahead of AI

My Five Favorite AI Papers of 2023

By TheSequence

Top Newsletters for Paper Summaries

Sahar and I decided to co-release this piece this weekend. A guest post by Sahar Mor, January, 2024.

🔖 Bookmark this page 🌿

A Look Back at 2023 Machine Learning Papers

Over 1,100 curated announcements and papers were featured in AI Tidbits in 2023. Only a handful of them changed the trajectory of AI research in the years to come, powering the products we use daily.

From Meta’s Llama to Stanford’s ControlNet and Microsoft phi—listing the 48 papers just as 2024 ushers in a fresh wave of groundbreaking discoveries.

Defining "most impactful" is a nuanced task, so I adopted a blend of objective and subjective criteria for selection:

Objective - the paper’s number of citations and GitHub repository stars

Subjective - papers I identified as having a significant influence across various modalities and applications

❄️ January

BLIP-2, Salesforce - a novel pre-training approach for vision-language tasks, outperforming what was back then the state-of-the-art like Flamingo 80B in efficiency and zero-shot performance with significantly fewer parameters and ushering a new wave of vision language models throughout 2023

InstructPix2Pix, Berkely - a conversational UI allowing image editing via textual prompts, enabling further research in image, and later video, editing using natural language

MusicLM, Google - a transformer-based text-to-audio model capable of producing tracks of varying genres, instruments, and concepts with superior audio quality, piquing both professional and amateur musicians’ imagination and serving as a bedrock for further generative audio research

🌹 February

LLaMA, Meta - an open 65B-parameter LLM trained on 1.4 trillion tokens, outperforming larger state-of-the-art LLMs like GPT-3 and PaLM-540B on most benchmarks, enabling the likes of Alpaca and Vicuna and sending the open-source LLM community off to the races

ControlNet, Stanford - a groundbreaking architecture that robustly integrates spatial conditioning into text-to-image diffusion models, offering enhanced controllability and wide-ranging applicability

Toolformer, Meta - a language model capable of teaching itself when and which external tools such as calculators and Wikipedia to use to generate accurate answers

KOSMOS-1, Microsoft - a transformer-based multimodal LLM supporting a wide range of perception tasks such as image captioning, visual question answering, and zero-shot image classification

The state-of-the-art today compared to December 2022 across generative AI verticals. From LLMs to generative video, image, and audio - the generative AI space has leapfrogged in 2023 across commercial companies and the open-source community.

AI Tidbits 2023 SOTA Report

🍀 March

Alpaca, Stanford - the first paper that mined instructions from large proprietary language models like ChatGPT to power smaller instruction-following language models

PaLM-E, Google - a 562B-parameter multimodal AI that uses visual data to enhance its language processing capabilities, achieving exceptional performance in both robotic applications and visual-language tasks

GPTs are GPTs, OpenAI - detailing the influence of LLMs on the American workforce, revealing its potential to affect 80% of American workers

⭐ AI Tidbits 🧷

Become a premium member to get full access to my content and $1k in free credits for leading AI tools and APIs. It’s common to expense the paid membership from your company’s learning and development education stipend.🌷 April

Segment Anything, Meta - the Segment Anything project, which includes both the Segment Anything Model (SAM) and SA-1B dataset, revolutionized image segmentation and was the bedrock to further novel papers like EdgeSam, MobileSAM, and EfficientSAM

Generative Agents, Stanford - using language models to power autonomous agents and simulate realistic human behavior, leading to the creation of AutoGPT, BabyAGI, and many agent-powered applications

Pythia, EleutherAI - one of the first fully open-source family of language models, Pythia is a suite of 16 LLMs from 70M to 12B parameters, facilitating research into model training dynamics, bias reduction, and performance

Vicuna, LMSYS - Vicuna-13B is a LLaMA fine-tuned model that achieved outstanding performance, reportedly competitive with ChatGPT and Bard, at a training cost of just $300

LLaVA, Microsoft - one of the first capable large multimodal models and the seed for further innovative research like LLaVA-1.5 and Qwen

🐝 May

QLoRA, University of Washington - QLoRA fine-tunes large language models with reduced memory usage, resulting in high-performing models that are extremely efficient

DragGAN, Max Planck Institute - a new model that took the internet by storm with its ability to manipulate the pose, shape, expression, and layout of any image

Direct Preference Optimization (DPO), Stanford - a new algorithm for fine-tuning language models to align with human preferences, surpassing traditional complex RLHF methods

Gorilla, Berkeley - a fine-tuned LLaMA model explicitly designed for API calls, surpassing the performance of GPT-4 in writing API calls and powering further research such as ToolLLM and AutoGen

Voyager, Nvidia - an LLM-powered Minecraft agent that made the rounds thanks to its innovative approach of learning skills by generating code routines, later storing them in a database and retrieving them when needed

Med-PaLM 2, Google - Med-PaLM 2 was the first LLM to perform at an expert test-taker level on the MedQA, reaching 85%+ accuracy. It was also the first AI system to reach a passing score on the MedMCQA dataset, scoring 72.3%

🌞 June

MusicGen, Meta - a simple and controllable model for music generation using text prompts and input melodies. Also, one of the first audio models to be widely used by musicians for producing music.

Textbooks are All You Need (phi-1), Microsoft - this paper proved (again) that high-quality data can enable smaller models to punch above their weight and outperform larger LMs. Since phi-1, Microsoft released phi-1.5 and phi-2 - a 2.7B model rivaling models up to 25x larger.

WizardLM, Microsoft - one of the first papers to utilize LLM-generated instruction over human-generated ones, resulting in multiple powerful models: WizardLM, WizardCoder, and Wizard Math

InstructBLIP, Salesforce - one of the first papers to explore vision-language instruction tuning, achieving SOTA zero-shot performance across diverse tasks

LLaMA-Adapter, Shanghai AI Laboratory - the first efficient fine-tuning method for LLaMA-based models, adding minimal parameters to create high-quality instruction-following models in under an hour and the stem to further innovative papers like LLaMA2-Accessory, LLaMA-Adapter V2 multimodal, and ImageBind-LLM

📜 Top Articles by Sahar Mor of 2023

Read more Archives. 🗃️ Sahar has been an operator and a founder in the AI space for more than a decade, recently at Stripe. He’s the author of AI Tidbits where he helps AI researchers and builders make sense of the latest in the space.

💼 LinkedIn https://www.linkedin.com/in/sahar-mor/

𝕏-Twitter https://twitter.com/theaievangelist

🍉 July

Llama 2, Meta - Meta’s first commercially permissive LLM enabled a host of new SOTA models from Code Llama to OpenHathi (the first Hindi LLM)

Robotic Transformer 2 (RT-2), Google - a groundbreaking vision-language-action (VLA) model that combines web and robotics data to provide generalized instructions for robotic control, cited by subsequent novel papers like Robogen and JARVIS-1

☀️ August

Code Llama, Meta - a commercially permissible SOTA model built on top of Llama 2, fine-tuned for generating and discussing code

3D Gaussian Splatting, Inria - Radiance Field methods have revolutionized novel-view synthesis of scenes in 2023 and this paper from Inria was the first one achieving state-of-the-art visual quality while maintaining competitive training times and allow real-time generation at 1080p resolution

ModelScope, Alibaba - a powerful and commercially permissible text-to-video model that outperformed existing models with only 1.7B parameters

OpenFlamingo, University of Washington - an open-source alternative to DeepMind's multimodal Flamingo, achieving up to 89% of its vision-language performance

🍂 September

Mistral 7B, Mistral - a fully open-source model that outperformed all available open-source models up to 13B parameters. Mistral 7B’s edge is its efficiency, delivering strong performance with less computational demand than larger LMs, powering a suite of SOTA LLMs such as Solar 10.7B, Zephyr, OpenHermes, and even the multimodal Nous-Hermes-2-Vision

Language Modeling Is Compression, DeepMind - discovering that LLMs are powerful lossless compressors, outperforming domain-specific counterparts (PNG, gzip, FLAC) in compressing data

LongLoRA, MIT - leveraging LoRA to extend LLMs' context window, demonstrating significant computational savings with strong performance on various tasks and models

Qwen, Alibaba - this suite of powerful multilingual LLMs, including versatile base models and specialized chat models in coding and mathematics, substantially outperformed Llama 2

Large Language Models as Optimizers (OPRO), DeepMind - OPRO took the field of prompt engineering to the next level by leveraging LLMs to create prompts, outperforming human-designed prompts

🎃 October

SteerLM, Nvidia - a technique that enables real-time customization of LLMs during inference, addressing the two major limitations of RLHF: (1) complex training setup and (2) static values that end users cannot control at run-time

Zephyr, Hugging Face - a series of Mistral-based chat models with comparable performance to Anthropic's Claude 2 on AlpacaEval. The key innovation is distilled supervised fine-tuning, a method involving using the output from a larger, more capable ‘teacher’ model to train a smaller ‘student’ model

MiniGPT-v2, KAUST - a unified interface for diverse vision-language tasks, enhancing image description, visual question answering, and visual grounding

Set-of-Mark (SoM), Microsoft - a technique that boosts the performance of multimodal models like GPT-4V by segmenting objects in an image before passing it to the model

🍁 November

Orca + Orca 2, Microsoft - Orca was one of the first to create tailored and high-quality synthetic data to equip smaller LMs with enhanced reasoning abilities, typically found only in much larger models. Orca progressively learns from complex explanation traces of GPT-4, with Orca-2 teaching small language models how to reason by employing advanced training methods and achieving reasoning levels comparable to models 5-10 times its size

CogVLM, Tsinghua University - a novel model combining vision and language features, incorporating a trainable visual expert module, and achieving state-of-the-art performance across various multimodal benchmarks, rivaling larger models like PaLI-X 55B

Medprompt, Microsoft - demonstrating how novel prompt engineering strategies can enable generalized models like GPT-4 to outperform specialist models like Med-PaLM 2 with significant efficiency gains

Latent Consistency Models-LoRA, Tsinghua University - a distillation method that enables real-time image generation with Stable Diffusion

🎄 December

LLM in a flash, Apple - on-device LLMs hold a big promise for AI and personal agents. Apple achieved up to 25x faster LLM inference thanks to dynamic storage and memory management, windowing for efficient data transfer, and a unique memory allocation algorithm.

VideoPoet, Google - an innovative LLM that excels in diverse video generation tasks supporting multimodal inputs, diverse motion, and style generation, various video orientations, audio generation, and integration with other modalities

FunSearch, DeepMind - in the pursuit of an AI capable of scientific breakthroughs, FunSearch represents a pioneering stride. It suggested a correct and previously unknown solution to the cap set problem and showcased the power of LLMs for making discoveries in mathematical and computer sciences.

MagicAnimate, National University of Singapore - MagicAnimate is considered a step change in human image animation thanks to its improved quality over previous models. It is still far from an indistinguishable generated video, but its diffusion framework lays the foundation for progress in this space in 2024

Recent Deep Dives on AI Tidbits

Harnessing research-backed prompting techniques for enhanced LLM performance

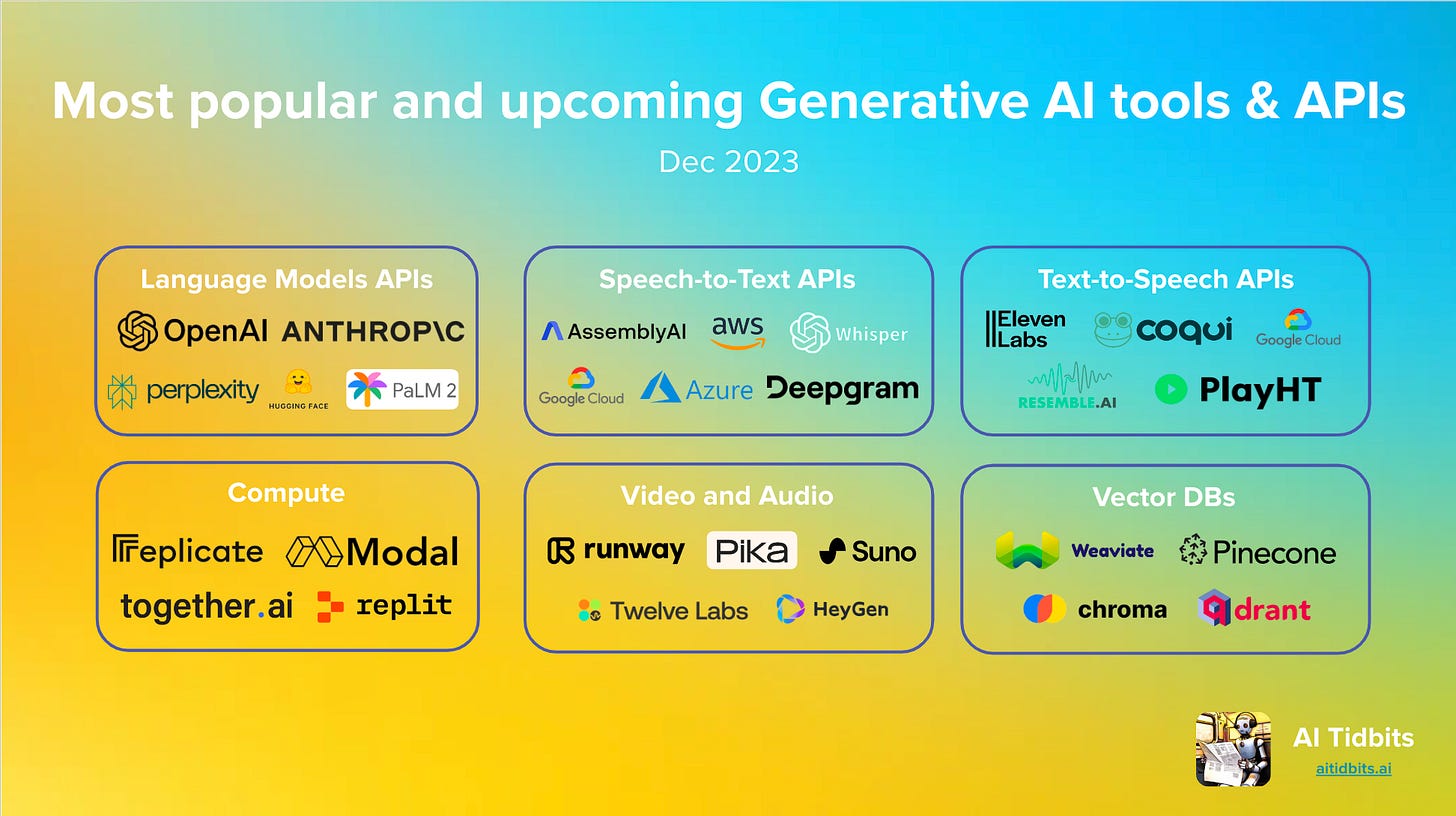

Most popular and upcoming Generative AI tools and APIs

🚀🌌 Further Reading

By Future History and Daniel Jeffries

9 Prediction for Generative AI in 2024

Most popular and upcoming Generative AI tools and APIs

Top Course for Generative AI in 2023

By Maxime Labonne 👇

The Future of Generative AI in 2024

By Donato Riccio of Text Generation

Stuff we figured out about AI in 2023

via Simon Willison’s blog.

Thanks for reading!

We've been alerted to another writer who writes on AI papers: https://recsys.substack.com/

Great sources for insights on AI papers also from Elvis Saravia, Sebastian Raschka, PhD, Davis Blalock, Ahsen Khaliq, Santiago Valdarrama and so many other great ML scientists like Nathan Lambert. Find them on LinkedIn, X and Threads.