DeepSeek’s Next Move: What V4 Will Look Like 👀

China's open-source AI momentum will push the frontier in 2026 and 2027.

Good Morning,

We are on the cusp of Chinese New Year and something is happening that’s a bit dark on the U.S. frontier.

Silicon Valley has adopted a 996 lifestyle for AI research. As models get more fine tuned to agentic capabilities, something remarkable is happening. A few Chinese AI startups are now showing signs of being more innovative at the frontier of open-weight models (than Silicon Valley and the rest of the world), this - with a fraction of the capital and with significantly less access to the best AI chips. How could this be?

After the DeepSeek moment of last year (January, 2025), China has surged ahead in Open-source AI models. A flurry of Chinese IPOs means the following AI startups and open-source incumbents are going to be a big deal:

It’s not just the quality of their models, it’s the pace of iteration. 🚀 The DeepSeek moment of last year snowballed into a very different open-source AI reality.

One year after Chinese startup DeepSeek rattled the global tech industry with the release of a low-cost artificial intelligence model, its domestic rivals are better prepared, vying with it to launch new models, some designed with more consumer appeal. - Reuters

There’s a buzz for what DeepSeek is ready to announce, and high expectations. There’s software, reinforcement learning, LLM training and hardware innovations that DeepSeek is making that has rubbed off on China’s vibrant open-weight LLM ecosystem. (The U.S. has no equivalent).

The bifurcation of closed models and open-models has never been this intense. While American companies get the hype, Chinese models are helping developers build real products.

DeepSeek as a Research Lab is taking a different approach

There is a sense of anticipation because DeepSeek is currently on the verge of its next major release cycle. As of February 2026, the primary focus is on DeepSeek V4, which is expected to launch around the Lunar New Year (Tuesday, February 17th begins).

Rumored to be codenamed "MODEL1," DeepSeek V4 is expected to be a total architectural overhaul rather than just a minor update. Meanwhile its next flagship model DeepSeek-R2 has been significantly delayed and is still likely perhaps weeks away.

What will be DeepSeek’s next play?

Engram: A breakthrough “conditional memory” system that separates factual recall from reasoning. This allows the model to access vast amounts of information (over 1 million tokens) without the usual performance “amnesia.”

mHC (Manifold-Constrained Hyper-Connections): A technique designed to stabilize training and improve scalability while reducing energy consumption.

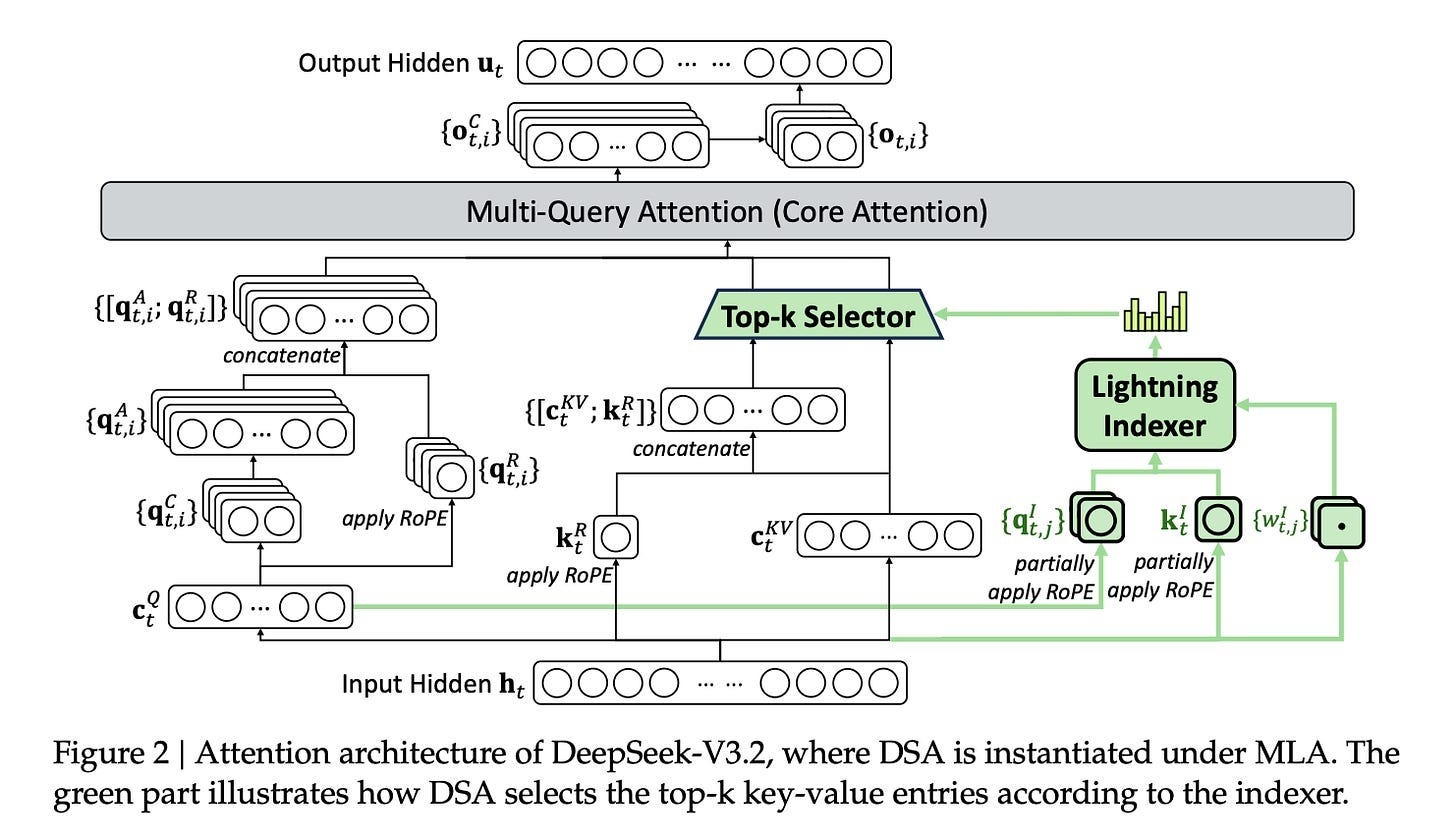

DeepSeek Sparse Attention (DSA): This enables an massive 1 million+ token context window. It allows the model to “read” an entire codebase—thousands of files—in one go, enabling true multi-file debugging and refactoring.

MODEL1 Architecture: A tiered storage system for the KV cache that reduces GPU memory consumption by 40%, making it much cheaper to run than previous frontier models.

I asked China AI blogger Tony Peng to take a deeper look into DeepSeek’s expected moves in the days and weeks ahead. DeepSeek has been busy behind the scenes the past year and we don’t know its full capabilities as it comes out of stealth so to speak with major releases.

Join Recode China AI for China AI spotlights and deeper resources, trends and stories around the ecosystem.

Zhipu AI and MiniMax Just Went Public, But They’re Not China’s OpenAI

DeepSeek-R1 and Kimi k1.5: How Chinese AI Labs Are Closing the Gap with OpenAI’s o1

The Most Important AI Panel of 2026: Can China Lead the Next Paradigm?

While Western closed-source models double down on AI coding capabilities, Chinese open-source models are showing more agentic, browser and fundamental capabilities. Major IPOs and consolidation is occurring and China’s AI engineering prowess is starting to show ultra competitive results. The convergence of China’s AI ecosystem and AI chip makers hurrying to catch up with the rest of the world in semiconductors and HBM, China’s place in the world of AI is likely on the ascent.

I try my best to give global coverage around AI and also to call upon people with “boosts on the ground” in East Asia. That’s why I respect people like Tony Peng, Grace Shao and Poe Zhao so much, they are the ones immersed and connected in the latest AI news in China. You can also get full access to this post soon on Tony’s blog.

But how good will DeepSeek’s latest models be? 🤔

“I believe V4 and R2 will remain among the best open-source LLMs available, potentially even narrowing the gap with leading proprietary models. “ - Tony Peng (former AI reporter for Synced)

That being said let’s try to dig into that anticipation and those key details of what we know before the unveiling event:

👀DeepSeek’s Next Move: What V4 Will Look Like

By Tony Peng

Sparsity is DeepSeek’s sauce to scale intelligence under hard constraints

The widely anticipated DeepSeek V4 large language model (LLM) is expected to meet the public in mid-February, right before the Chinese New Year. V4, DeepSeek’s upcoming generation base model, is supposed to be the largest challenge of open-source models to the proprietary models, and the most anticipated releases in the AI space for early 2026.

According to Reuters and The Information, DeepSeek V4 is optimized primarily for coding and long-context software engineering tasks. Internal tests (per reporting) suggest V4 could outperform Claude and ChatGPT on long-context coding tasks.

2025 was a watershed moment for DeepSeek and the following open-source movement fueled by Chinese AI labs. The release of V3 and R1 upended multiple AI narratives: that only spending hundreds of millions could produce a frontier LLM, that only Silicon Valley companies had talents to train competitive models, that the U.S.-China gap in AI was widening due to chip shortages.

Throughout the rest of 2025, DeepSeek continued churning out notable models, including DeepSeek-V3.2-Thinking and DeepSeek-Math-V2, which won the International Olympiad in Informatics. Yet the widely anticipated DeepSeek-V4 and DeepSeek-R2, which were reportedly slated for release in the first half of 2025, were delayed. According to reports, DeepSeek CEO Liang Wenfeng was dissatisfied with the results and chose to delay the launch.

The Financial Times offered an alternative explanation: DeepSeek initially attempted to train R2 using Huawei’s Ascend AI chips rather than Western silicon like Nvidia’s GPUs, partly due to pressure from the Chinese government to reduce reliance on U.S.-made hardware. The training runs encountered repeated failures and performance issues stemming from stability problems, slow chip-to-chip interconnect speeds, and immature software tooling for Huawei’s chips. Ultimately, DeepSeek had to revert to Nvidia hardware for training while relegating Huawei chips to inference tasks only. This back-and-forth process and subsequent re-engineering significantly delayed the timeline.

Sparsity Through Iteration

DeepSeek’s architectural evolution has been driven by a consistent principle: Sparsity.

While computation can be scaled relatively easily by adding more data, more parameters of the model, and more chips, sparsity is the most straightforward way DeepSeek can scale intelligence under constraints, including compute, memory bandwidth, and chips. These constraints shaped every iteration of their model architecture, from DeepSeekMoE’s initial Mixture of Experts (MoE) through the attention optimizations in V2, V3, and V3.2.

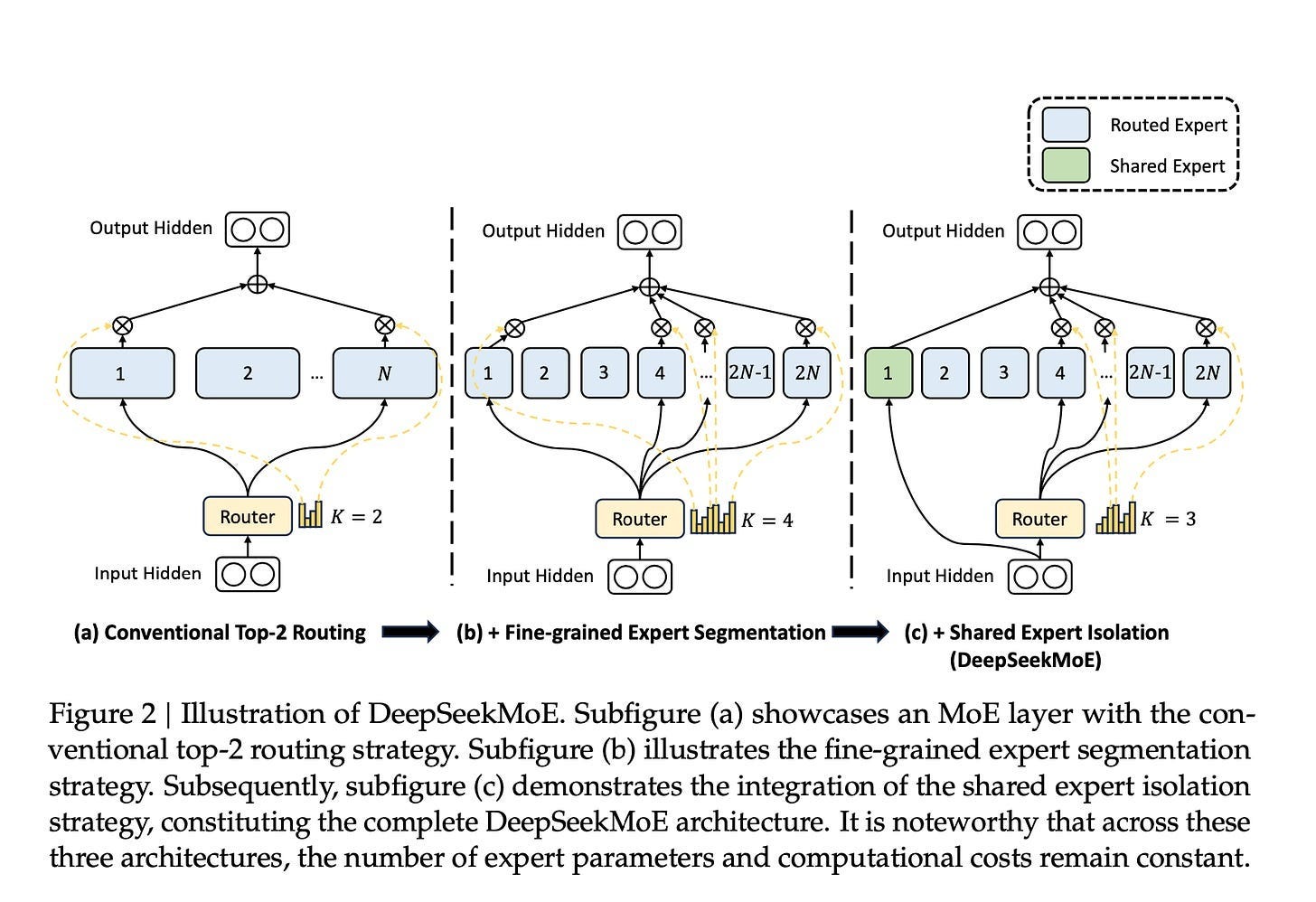

In a Transformer, you have two main computational blocks: Attention and Network (specifically Feed-Forward Network). DeepSeek started by making the network layers sparse using MoE. Instead of every token going through the entire network, they route each token to only a small, relevant subset of parameters as experts.

DeepSeekMoE laid the groundwork with a MoE architecture containing 64 specialized experts and 2 shared experts. For each input, the model would route it to the 6 most relevant experts (topk=6).

DeepSeek-V2 expanded the expert pool to 160 specialized experts while keeping 2 shared experts and the same topk=6 routing, with improvements focused on distribution and efficiency.

DeepSeek-V3 scaled further to 256 experts with 1 shared expert, and increased routing to topk=8. The architecture became more sophisticated in how it managed experts, and introduced a new communication system called DeepEP that makes expert coordination more efficient.

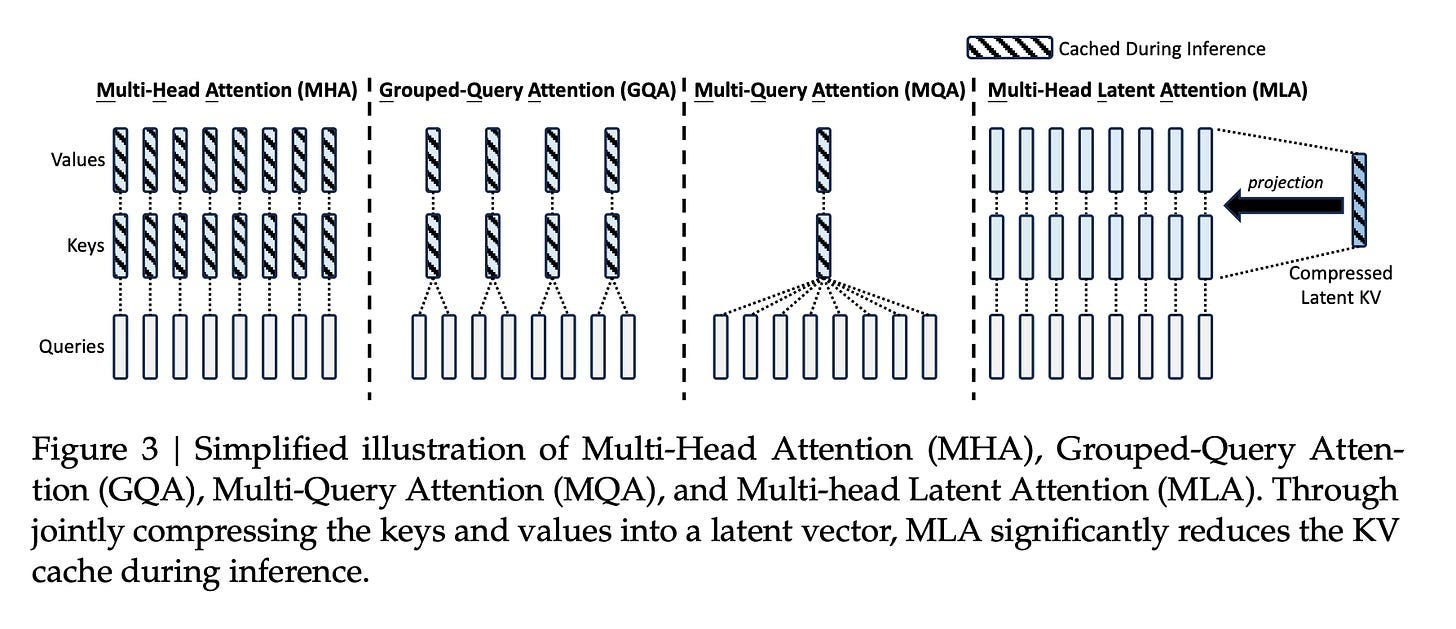

Once the network sparsity was well-optimized, attention became the next target for improvement. As the core module of the Transformer architecture, attention is where each token in a sequence analyzes and weights the importance of every other token to understand context and relationships. Attention presents a different challenge as computational complexity exponentially grows along with the increasing context window.

Multi-head Latent Attention (MLA) was DeepSeek’s first major attention innovation introduced in V2. Instead of storing complete key-value information for every token (as standard Multi-Head Attention does), MLA compresses this information into a smaller representation. This compression reduces how much data needs to be moved in and out of memory.

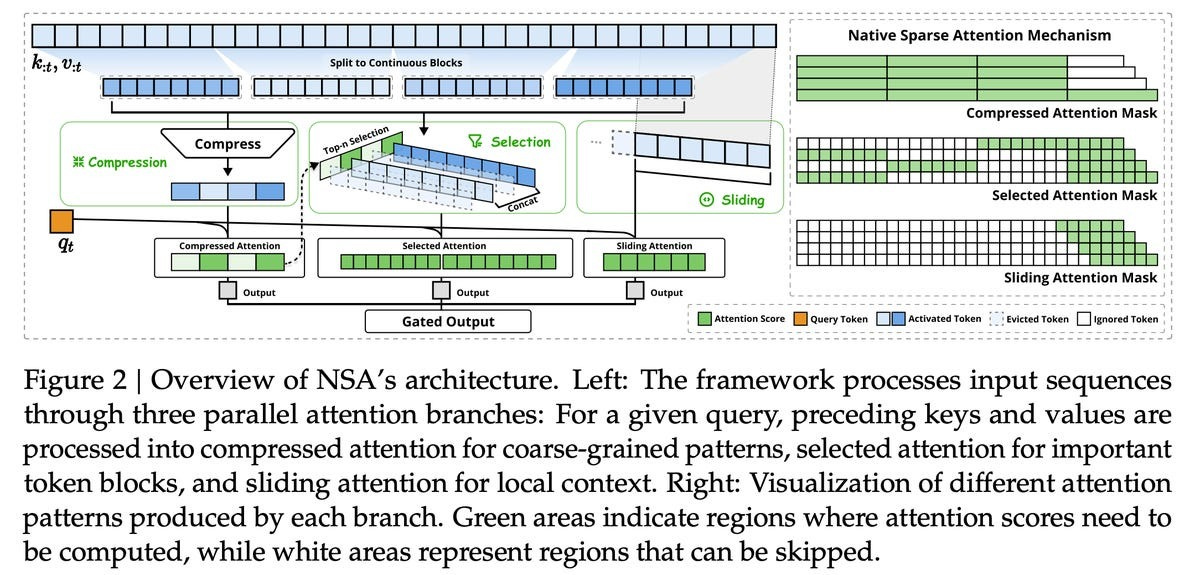

Then in a February 2025 paper, DeepSeek introduced Native Sparse Attention (NSA) with optimized design for modern hardware. Tokens are processed through three attention paths: compressed coarse-grained tokens, selectively retained fine-grained tokens, and sliding windows for local contextual information.

DeepSeek Sparse Attention (DSA), introduced in V3.1 and V3.2, streamlined the NSA design. Instead of selecting blocks of tokens, DSA selects individual tokens. It uses a lightweight indexer model to identify the 2,048 most relevant tokens from the full context. This indexer is trained through a process where it learns to mimic the full attention pattern—the model first trains with full attention, then the indexer learns to predict which tokens the full attention would focus on. DSA can work with MLA.

DSA+mHC+Engram

With the foundation of DSA established in V3.2, DeepSeek appears ready to push optimization further in V4. The evidence points to two other papers that DeepSeek released over the past few months.

Manifold-Constrained Hyper-Connections (mHC)

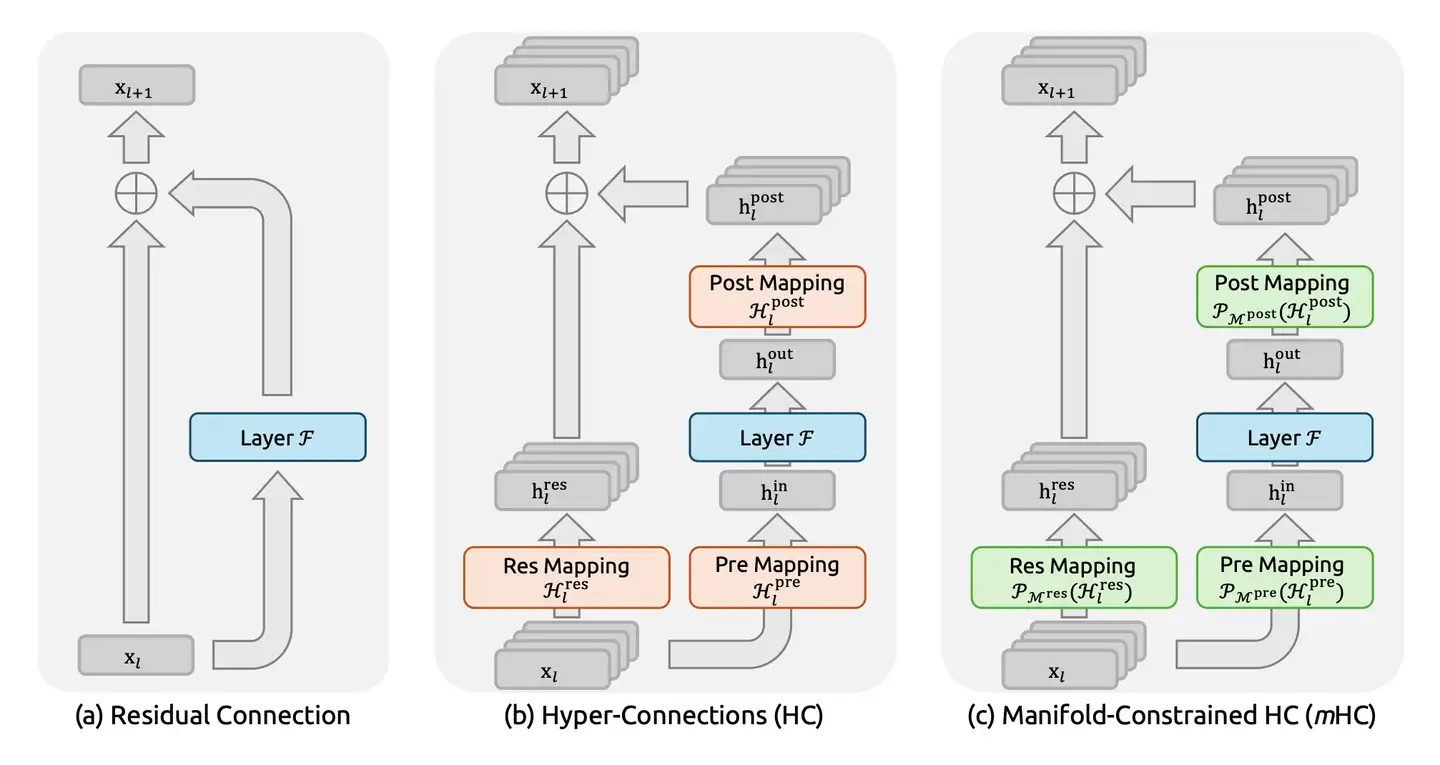

mHC represents a rethinking of how information flows through deep neural networks. While traditional networks pass information sequentially from one layer to the next, mHC introduces richer connectivity patterns between layers—essentially creating multiple pathways for information to flow across the model’s depth.

To understand mHC’s, we must first revisit residual connections, the backbone of modern deep neural networks regardless of architecture (CNN or Transformer). Proposed in the landmark 2015 paper Deep Residual Learning for Image Recognition, residual connections add the input (x) of a block directly to its output F(x), typically formatted as y = F(x) + x.

This simple shortcut proved revolutionary. By allowing gradients to bypass layers, residual connections solved the vanishing gradient problem that had plagued deep networks, enabling effective training of architectures with hundreds or even thousands of layers.

Then in 2024, ByteDance researchers proposed hyper-connections (HC) as an alternative approach. Rather than simple additive shortcuts, HC creates richer connectivity patterns that allow information to flow more freely between non-adjacent layers. This architectural flexibility offers advantages over standard residual connections, particularly in avoiding the gradient-representation tradeoffs.

But HC introduced its own challenge. As training scales increase and connectivity grows richer, the risk of training instability rises. More pathways for information flow means more opportunities for gradients to either explode (grow uncontrollably large) or vanish (shrink to near-zero) during training.

This is where DeepSeek mHC’s innovation emerges. The approach treats the model’s parameter space as existing on a high-dimensional geometric structure, a manifold. By imposing mathematical constraints based on this manifold geometry, mHC creates a guardrail system that maintains stable information flow even as connectivity increases.

Think of it as a multi-lane highway with intersections. Vehicles can change lanes, merge, or split—increasing routing flexibility. But the total traffic flow must remain constant and balanced. Information can take different paths through the network, but the overall flow is constrained to prevent either congestion (gradient explosion) or emptiness (gradient vanishing).

The mathematical formulation ensures that information transformations remain well-behaved across the manifold. This prevents the instabilities that would otherwise emerge from unconstrained hyper-connections, enabling deeper and more flexible architectures while maintaining training stability.

For V4, mHC could appear strategic. As DeepSeek pushes toward increasingly sparse and selective processing through DSA, Engram, and other mechanisms, having stable hyper-connections allows these components to interact more effectively across layers.

Engram

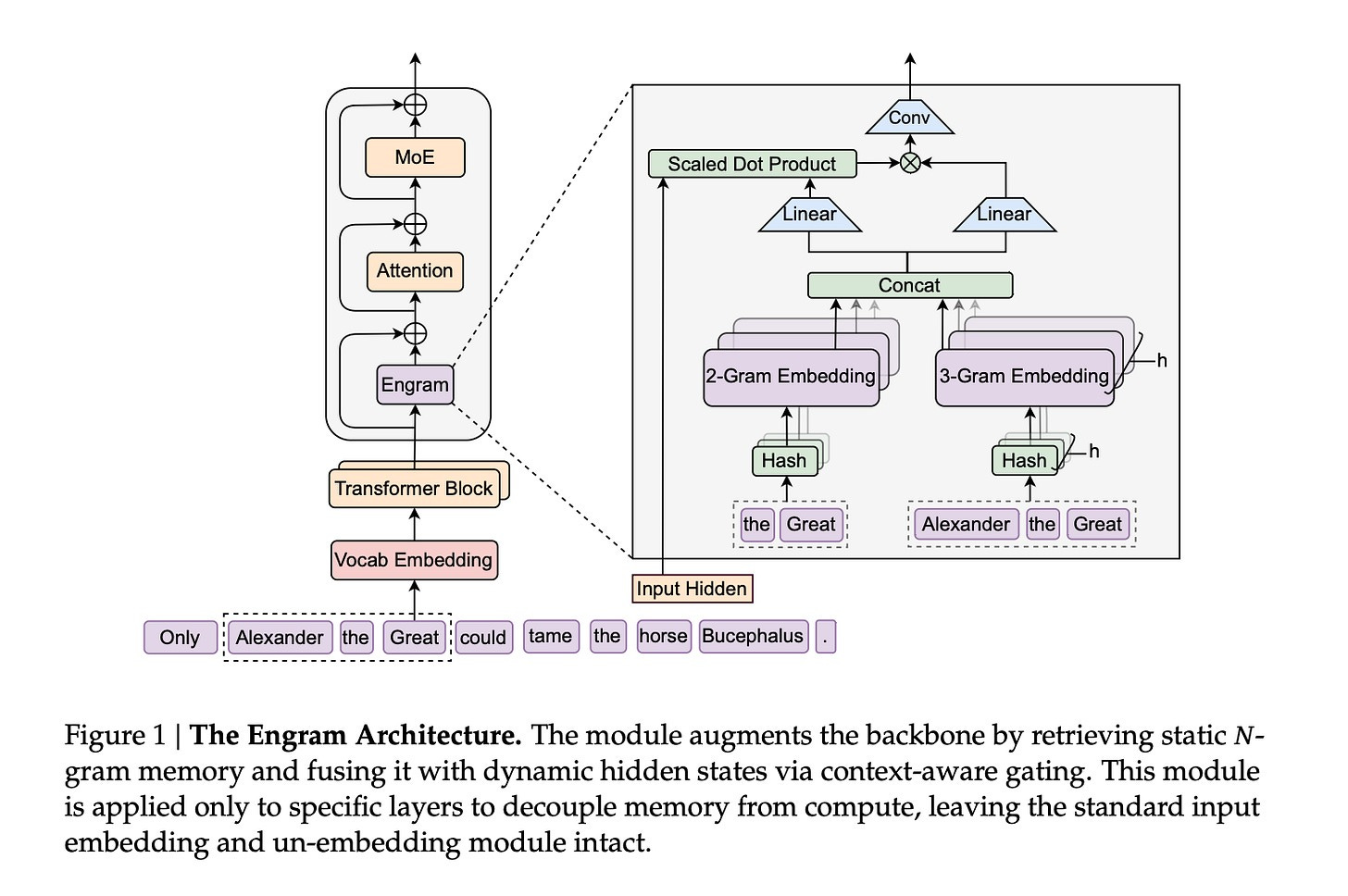

Engram, detailed in DeepSeek’s January 2026 paper Conditional Memory via Scalable Lookup: A New Axis of Sparsity for Large Language Models, introduces a new dimension to model architecture by adding conditional memory to the Transformer through efficient lookup mechanisms.

Traditional Transformer-based LLMs compress all learned knowledge into neural network weights. Whether answering a simple factual query like “Barack Obama was a U.S. president” or solving a complex mathematical proof, the model must route every computation through the same expensive neural processing.

Consider the phrase “New York City.” A standard Transformer must learn that “New,” “York,” and “City” together form a specific entity, then rebuild that relationship through attention computation every single time. This is static knowledge that never changes, yet the model treats it like novel information requiring full neural processing each time.

Engram’s premise is elegantly simple: not all knowledge requires neural computation. Static facts and established patterns can be stored in a complementary memory system and retrieved efficiently when needed. This mirrors biological memory: You don’t re-calculate that 2+2=4 each time; you simply recall it.

Engram implements this through a modernized N-gram lookup module operating in constant time (O(1))—retrieval speed remains constant regardless of how much information is stored. This creates a fundamental architectural separation:

Neural computation (attention and MoE): Complex reasoning, novel synthesis, context-dependent processing

Memory lookup (Engram): Static knowledge, established patterns, factual recall

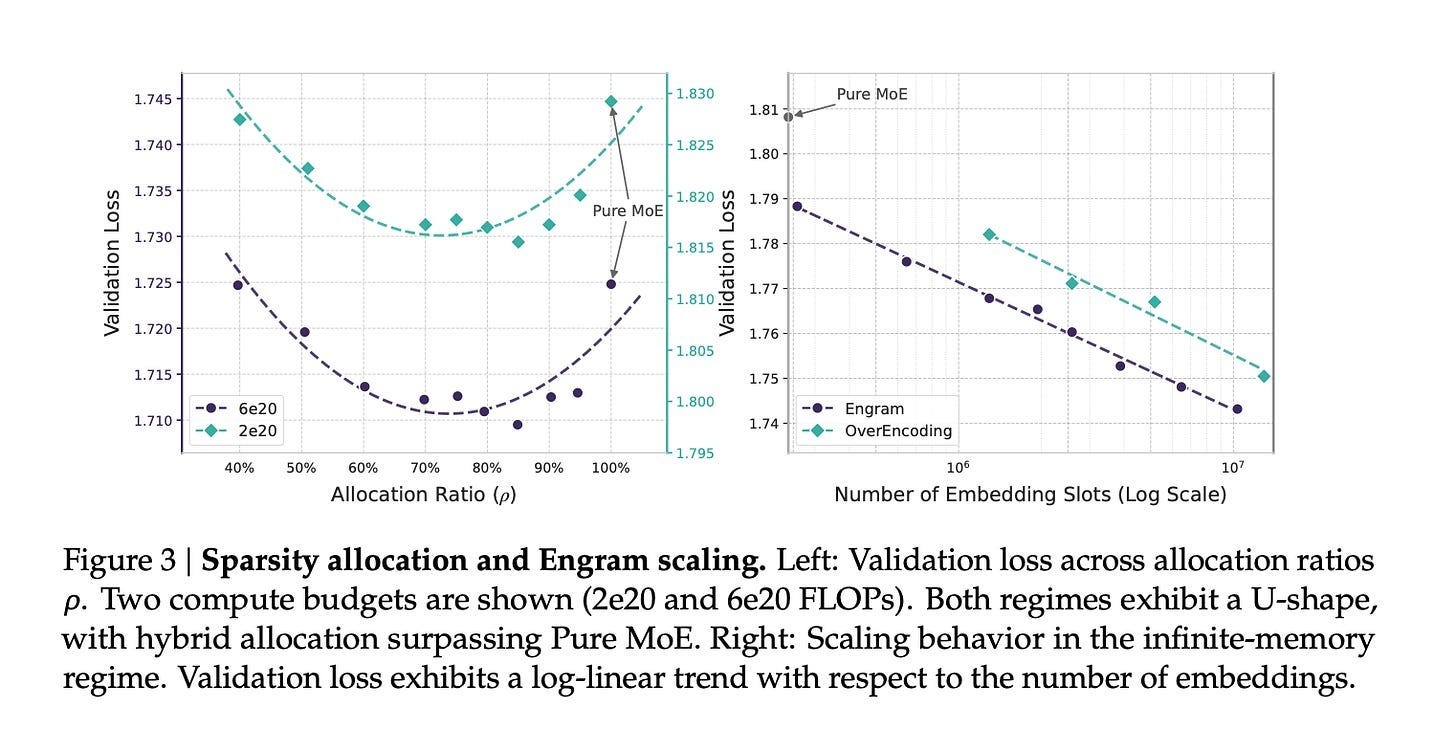

The architecture optimizes the balance using a U-shaped scaling law—a mathematical framework determining ideal parameter allocation between neural computation and memory lookup at different model scales. This is crucial because the tradeoff isn’t straightforward: too much reliance on lookup tables risks brittleness; too much neural computation wastes resources on static knowledge. The U-shaped law identifies the sweet spot where both systems work synergistically.

Memory efficiency improves dramatically because Engram offloads static knowledge from expensive GPU memory to host CPU memory. Empirical results demonstrate that offloading a 100B-parameter lookup table to host memory incurs negligible overhead (less than 3%). This enables massive knowledge bases without proportional GPU memory costs, while supporting much longer effective context windows.

For reasoning and knowledge-intensive tasks, Engram delivers measurable improvements over comparable MoE-only architectures. Benchmarks show particular gains in multi-hop reasoning and long-context understanding—tasks that benefit from quick access to established knowledge while applying neural computation to novel reasoning steps.

Model1: New Clues to DeepSeek V4?

Just over a year after DeepSeek-R1 became the most-liked model on Hugging Face, sharp-eyed developers have spotted a mysterious “Model1” in recent code updates to the FlashMLA library. The timing is suggestive—could Model1 be the codename for DeepSeek V4?

Analysis of recent commits reveals several architectural signatures suggesting Model1 is an entirely new flagship model:

The 512-Dimensional Shift: Model1 switches from V3.2’s 576-dimensional configuration to 512 dimensions, likely optimizing for NVIDIA’s Blackwell (SM100) architecture where power-of-2 dimensions align better with hardware.

Blackwell GPU Optimization: New SM100-specific interfaces, CUDA 12.9 requirement, and performance benchmarks showing 350 TFlops on B200 for sparse MLA operations represent deep integration with next-generation hardware.

Token-Level Sparse MLA: Separate test scripts for sparse and dense decoding indicate parallel processing pathways. The implementation uses FP8 for storing KV cache and bfloat16 for matrix multiplication, suggesting design for extreme long-context scenarios.

Value Vector Position Awareness (VVPA): This new VVPA mechanism likely addresses a known weakness in traditional MLA—positional information decay over long contexts. As sequences extend into hundreds of thousands of tokens, compressed representations can lose fine-grained positional details. VVPA appears designed to preserve this spatial information even under aggressive compression.

Engram Integration: References to Engram throughout the codebase suggest deep integration into Model1’s architecture.

Concluding Remarks

I believe V4 and R2 will remain among the best open-source LLMs available, potentially even narrowing the gap with leading proprietary models. However, since DeepSeek R1’s release over a year ago, the competitive race to push LLM capabilities forward has only intensified. The “DeepSeek effect” has motivated several other Chinese AI labs—including Moonshot AI, MiniMax, and Zhipu—to redouble their efforts in releasing top-tier LLMs. This doesn’t even account for tech giants Alibaba and ByteDance, both of which are producing frontier models while simultaneously expanding into chatbots, AI cloud services, chips, and hardware.

Given this rapidly evolving landscape, expecting another watershed “DeepSeek moment” like the one in early 2025 seems nearly impossible.

DeepSeek appears fairly transparent in their papers and functioning as a legit Research lab, without just chasing commercial profits. Their founder is a unique force in the Chinese ecosystem.