AI risk and global order: will AI governance survive a new Tech Cold War?

A guest post by Eugenio V Garcia: Deputy Consul of Brazil in San Francisco 🌎

Hello Everyone,

This Newsletter, A.I Supremacy (archives), in addition to considering the business, technological and society implications of A.I, was set up to also explore the political ramification of A.I. regulation, A.I. governance, risk and impact on diplomacy and geopolitics, or in some cases, the lack of it.

Recently I have been having discussion with a number of A.I. Risk thinkers and tech diplomacy analysts. I came across the work of Eugenio on Medium (see appendix for links), and asked him to do a guest post for us. He is a Tech Diplomat so his insights are professional and personal with considerable experience with the U.N. and Silicon Valley as well as Brazil and so forth. He is a Researcher in A.I. Governance, see his bio at the end for more details.

If you think these topics are important, consider upgrading your subscription for the full coverage and analysis.

~ Michael

11 February 2023

AI risk and global order: will AI governance survive a new Tech Cold War?

Eugenio V Garcia

Are we heading toward a new Tech Cold War? If artificial intelligence is set to become a game changer in this century, profoundly affecting technological progress, competitiveness, and power, will long-term risks grow into a disaster foretold brought by a relentless struggle for AI supremacy?

The age of Broad AI

Over the last few months, a Cambrian explosion of Generative AI applications has been underway. The time between the development of new systems at AI labs and their release to the general public has been shrinking. Dazzled by all these massive novelties, ordinary citizens believe artificial general intelligence (AGI) is just around the corner (it is not) and dystopian futures are (again) the subject of everyday conversations.

In reality, the traditional distinction between Narrow AI and AGI no longer holds. Narrow AI deals with particular tasks within predictable parameters, such as playing chess, recognizing faces, and so on. AGI does not exist for the moment and no one really knows if and when it will be achieved.

But new software-generated prediction models will soon have the capacity to perform multiple tasks and, in practice, become some sort of “generalist” (“broad” is a better term) platforms with several simultaneous uses. Powerful large language models will converge and integrate with image and video generation, giving rise to multipurpose chatbots capable of searching, chatting, and creating at scale in a way that makes “narrow” a poor definition. And this is just an example of many other Broad AI applications that will be developed in the years to come.

A normative vacuum in AI governance

Are these developments a foreign policy priority for most states? We can safely say that AGI is currently not on the diplomatic agenda. As far as Narrow AI is concerned, near-term risks have not been alarming enough to mobilize national governments to seriously negotiate a common international approach to set consistent standards and address pressing challenges. It remains to be seen if Broad AI would change this state of affairs.

There is a more polarized world order today and indeed many obstacles prevent the international governance of AI from gaining traction, including (but not only) power politics, the ideological divide, growing competition, and confrontational nationalism. Ongoing geopolitical tensions do not seem conducive to meaningful agreements in this field within a short time. Both the absence of collaborative governance and mistrust toward multilateralism make it harder to manage present and future AI risks.

Despite significant progress on many fronts, the international governance of AI lacks commonly agreed norms, policies, and safety measures. A decentralized landscape appears to be the norm. A large number of governance tools are missing, such as normative instruments, institutions, and technical standards.

Certainly, there is an emerging consensus on some principles that might govern AI: human-centered AI, transparency, accountability, safety, non-discrimination, reducing bias, and many others. At the global level, however, no international regime goes beyond these high-level principles.

The overall impact of AI technologies will not be limited to national borders. If states fail to coordinate properly, global governance could become Balkanized. A fragmented landscape may lead to a “splinternet” scenario, in which opposing blocs have mutually incompatible rules.

Do-nothing is not an option. Driven by a race to the bottom, governments, private companies, and other actors can push for rapid AI development in a Wild West, normative vacuum, with little regard to ethical concerns or unintended consequences.

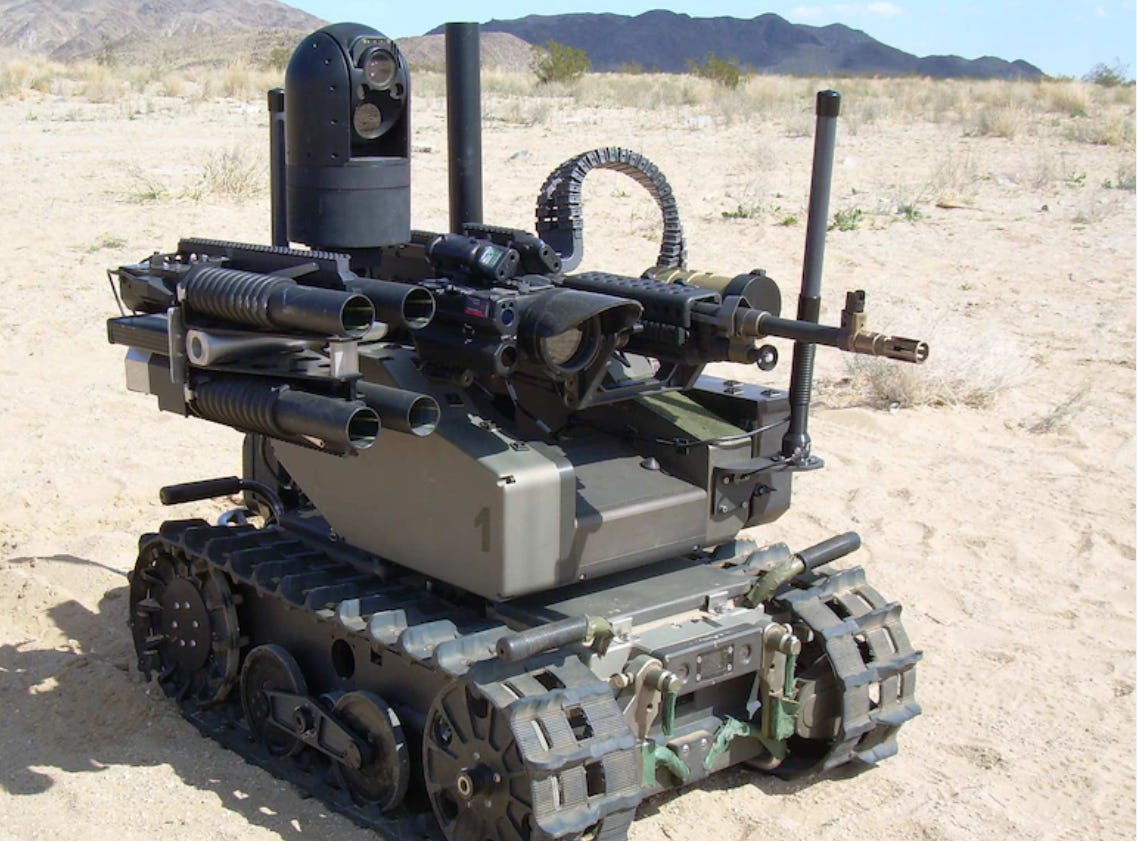

The US military have made developing AI robots a focus in recent years. (Ed).

AI for peace… or war?

Take military uses of machine learning capabilities as an example of what might happen if we do not act expeditiously. Great-power competition has been the justification for the weaponization of AI in what many see as a free-for-all, unconstrained arms race.

Actually, in the military domain, the genie is already out of the bottle. Remote, dehumanizing warfare could become commonplace on the future high-speed battlefield. And yet, we are still far from building any AI system flexible enough to understand the broader context in real-life situations and reliably adapt its behavior under changing circumstances.

Allowing self-learning and unpredictable systems to make critical decisions may put humanity on a dangerous path. The most compelling long-term risk is losing human control over the use of force.

Despite all these concerns and lengthy discussions in Geneva on lethal autonomous weapons systems, states have been so far unable to agree on the modalities of a mandatory legal framework for these emerging technologies.

This is why drawing the line somewhere must be of utmost priority before it is too late. Not only the principle of peaceful uses of AI systems should be made a cornerstone of international law. Also, most importantly, the foundational moral standpoint that life and death decisions should not be delegated to machines is vital to avoid nightmare scenarios hardly anyone wishes to see unfolding.

How about the Global South?

There is no shortage of articles, books, and reports about rivalry among great powers and their quest for technological leadership. Much less is written about the majority of the world’s population, their problems, and their expectations.

For developing countries, global inequality is a central theme. Many countries do not have the computing capacity necessary to train and develop large-scale AI models within their borders. Instead, they have to import, consume, and eventually pay royalties for technologies developed elsewhere.

Developing countries without basic digital infrastructure and expertise to reap the benefits of AI will be the most affected by a growing gap in wealth and prosperity. Lacking resources, technical skills, or policy mechanisms to counter tech-leading countries or powerful Big Tech companies effectively, their data may be up for grabs.

With or without a Tech Cold War, a new international division of labor could emerge, not necessarily benign for everyone. Will underpaid annotators from the Global South always be associated with the “human” behind RLHF - Reinforcement Learning from Human Feedback?

If stakes are high and will likely result in cross-border externalities, all should be involved in the discussions and have their say. Underrepresented regions, such as Africa or Latin America and the Caribbean, cannot be ignored. International cooperation on AI policymaking is a crucial task that should not be restricted to experts from the most technologically advanced nations.

So what is next?

As AI technologies become widespread across the globe, the need for AI governance will probably increase accordingly. Norm-setting can lead to responsible strategies that encourage collective action, minimize risks, and prevent harm.

For proper governance to be effective, especially when enforcement is needed, the active participation of states will be required sooner rather than later. The leading powers in technological development have the most leverage to establish global standards and legally binding instruments to govern AI. Ironically, they seem to presently be the least interested in doing so.

Although there are many initiatives by governments at both the national and regional levels, as well as by civil society organizations and the private sector, many of them engage few partners or exclude others. Like-minded groups can agree on fundamental principles (and this should be welcomed), but ultimately cross-cultural dialogue must include other countries from different ideological, religious, or cultural contexts.

In a multicultural, diverse, and heterogeneous world, with distinct societies coexisting in a complex and interconnected global network, building consensus calls for flexibility to negotiate with a wide range of partners in a changing spectrum of values, not always identical and often regarded in different ways by people, depending on their mindset, ideology, and cultural background.

State behavior happens to be frequently guided not by antagonistic values, but by concrete needs and interests at stake in a given negotiation. Outreach and engagement should pragmatically gather all countries that support a rules-based international order, regardless of perceived views of what their values are supposed to be.

In other words, national positions are built around interests. Seek creative solutions to reconcile those interests, even if that means leaving your comfort zone.

Give diplomacy a chance

Whenever possible, we should leave the door open for diplomacy and refrain from a binary way of thinking typical of bloc politics and zones of influence. A world in “black and white” obliterates grey areas, silences moderate views, and polarizes positions. Countries should try to overcome the Us vs. Them divide and embrace heterogeneity in a multicentric world.

Individual states or small groups of states, however powerful, cannot make unilateral decisions on behalf of the international community. If any norms are to be adopted, or if someday we reach the point of negotiating an international treaty on AI, a neutral, legitimate, and inclusive platform will be necessary to be the locus for it.

Computer scientists, developers, and software engineers have a key role to play in this regard. Sound AI governance would benefit from further dialogue between technologists and political leaders, parliamentarians, and governmental officials. Inputs from multi-stakeholders are essential to ensure that technology will be safe, trustworthy, and accessible to as many people as possible. The best time to begin is now.

About the author

Eugenio V Garcia - Tech Diplomat, Brazilian Deputy Consul General in San Francisco. Focal point for Silicon Valley and the Bay Area innovation ecosystem. PhD International Relations. Researcher on AI governance. The views expressed here are those of the author.

Email: egarcia.virtual@gmail.com, Medium, LinkedIn.

Many thanks to Eugenio for his contribution. Upon discussion we realized we shared many points of view and concerns.

Tech Diplomacy: Further Reading by the Author

To dive deeper please see for further reading:

AI diplomacy: five recommendations to developing countries, published on Medium, 22 October 2022. Available at: https://medium.com/@egarcia.virtual/ai-diplomacy-five-recommendations-to-developing-countries-163e57f85a48

What is tech diplomacy? A very short definition, published on Beyond the Horizon, International Strategic Studies Group (ISSG), Belgium, 14 June 2022. Available at: https://behorizon.org/what-is-tech-diplomacy-a-very-short-definition/

Multilateralism and artificial intelligence: what role for the United Nations? The global politics of artificial intelligence, Taylor & Francis, Chapman & Hall/CRC AI and Robotics Series, 2022. Preprint as SSRN Paper, posted on 19 March 2021. Available at: http://ssrn.com/abstract=3779866

The technological leap of AI and the Global South: deepening asymmetries and the future of international security. Book chapter for Handbook on warfare and artificial intelligence, Edward Elgar Publishers, forthcoming. Link to SSRN paper: http://ssrn.com/abstract=4304540

Living under AI supremacy: Five lessons learned from chess. Published on LinkedIn, 4 August 2021. Available at: https://www.linkedin.com/pulse/living-under-ai-supremacy-five-lessons-learned-from-chess-garcia

AI is too important to be left to technologists alone. In: AI Governance in 2020 - A Year in Review: Observations from 52 Global Experts, Shanghai Institute for Science of Science, June 2021. Available at: https://www.aigovernancereview.com/

The peaceful uses of AI: an emerging principle of international law. The Good AI online platform, News & Analysis, 15 June 2021. Available at: https://thegoodai.co/2021/06/15/the-peaceful-uses-of-ai-an-emerging-principle-of-international-law/

Tué par des algorithmes : Les armes autonomes présentent moins de risques? Beyond the Horizon, International Strategic Studies Group (ISSG), Belgique, 22 avril 2021. Disponível em: https://behorizon.org/tue-par-des-algorithmes-les-armes-autonomes-presentent-moins-de-risques [English version also available = Killed by algorithms: Do autonomous weapons reduce risks? https://behorizon.org/killed-by-algorithms-do-autonomous-weapons-reduce-risks]

The international governance of AI: where is the Global South? The Good AI online platform, News & Analysis, 28 January 2021. Available at: https://thegoodai.co/2021/01/28/the-international-governance-of-ai-where-is-the-global-south

The militarization of artificial intelligence: a wake-up call for the Global South. Paper, SSRN, 16 September 2019. Available at: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3452323

The author listed his interests on this academic page as: RESEARCH INTERESTS

International Relations

Artificial Intelligence

United Nations

Diplomacy

New Technologies

Lethal Autonomous Weapons

Peace and Conflicts Studies

World History

Global Governance

History of International Relations

International Security

International organizations

United Nations Security Council

Emerging powers, Global power shift, Rise of BRICS Countries

History of Brazilian Foreign Relations

Brazilian Foreign policy

Diplomacia

História da Política Externa Brasileira

Political Anthropology

Foreign Policy Analysis

International Studies

UN peacekeeping operations

Emerging Powers

Peacekeeping

Strategic Studies

United Nations Peacekeeping Operations

Security Council, among many others.

It's difficult to predict the future with certainty, but it's possible that AI governance could survive a new tech cold war. The exact form that governance takes will depend on the specific geopolitical and technological context of the new tech cold war. However, as AI continues to play an increasingly important role in many areas of society, it's likely that governments and international organizations will continue to seek ways to regulate and govern its development and use, regardless of geopolitical tensions. Ultimately, the success of AI governance will depend on the willingness of countries to cooperate and collaborate on this important issue.

Congrats, we need people like you to change our image after four years of darkness during the Bolsonaro's presidency.