AI in Military Conflict has hit its own Inflection Point

Israel, Palantir, OpenAI, Scale AI - a bad omen of things to come.

Hey Everyone,

I’m not an effective altruistic but AI risk is real in a number of significant ways. The truth is, I have always been worried about something for some time and my concern has increased in recent years. That’s the impact of artificial intelligence on the Military, geopolitical conflict and AI providing advantages in asymmetric warfare capabilities, namely AI drones.

There are many sides to this story and the full extent of it won’t be told for some years. I will be sharing some candid thoughts. When in 2024, OpenAI quietly removed its ban on military use of its AI tools - it marked the beginning of an era where AI Supremacy would begin to translate into military advantages. The outcomes of which may become dire.

Ex-Google CEO Eric Schmidt quietly created a company called White Stork, which plans to build AI-powered attack drones, and Palantir recently got a new contract. Palantir, which was founded by the libertarian billionaire Peter Thiel with funding from the CIA’s venture capital fund has recently won $178M Army deal for TITAN artificial intelligence-enabled ground stations. It’s shareholders were cheering. Palantir’s stock is up PLTR 0.00%↑ nearly 58% this year alone as of March, 2024.

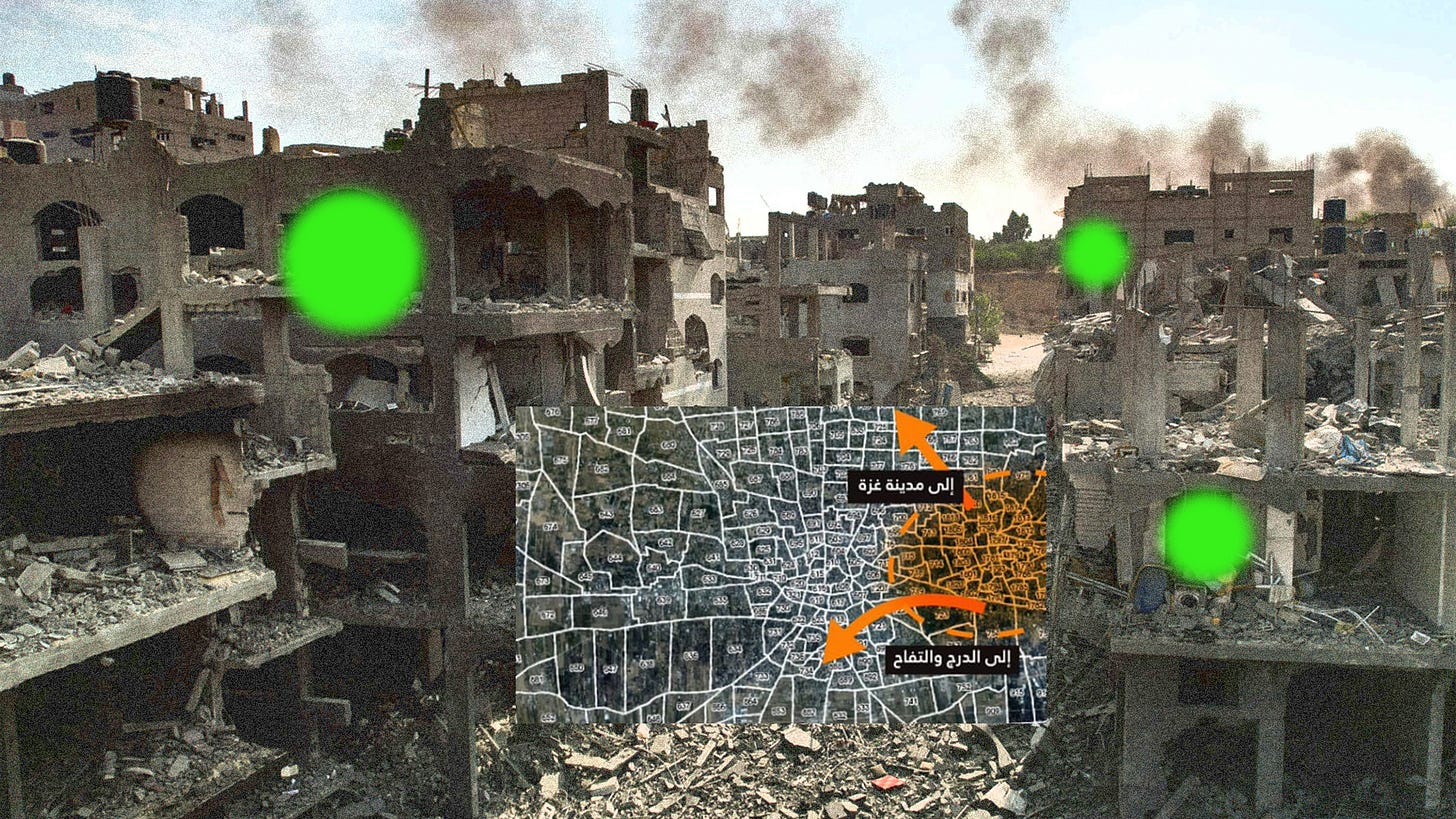

Meanwhile according to statements by the Israeli Defense Forces (IDF), Israel is using AI extensively in its military operations in Gaza. With the massacre of civilians in Gaza, the world is watching and it’s starting to impact how the AI industry as a whole is viewed by hundreds of millions of people all over the world.

This is not a use of the technology that is good for humanity. Israel is deploying new and sophisticated artificial intelligence technologies at a large scale in its offensive in Gaza. And as the civilian death toll mounts, regional human rights groups are asking if Israel’s AI targeting systems have enough guardrails. This does not seem to be a responsible use of the technology and it’s also impacting how the world views America.

Eric Schmidt, former CEO of Google, had previously told Wired that AI has the potential to revolutionize US military equipment. It seems like he is doing something about it. Several countries are trying to justify the use of AI in warfare and interstate conflict. In an official release published in October 2023, the British Army further explained its approach to AI, citing the benefits of having a competitive advantage and operating efficiency.

The normalization of AI and Generative AI in warfare and conflict scenarios and in National Defense, sets a dangerous precedent. The US Central Command (CENTCOM) chief technology officer Schuyler Moore said American forces are using computer vision algorithms to accurately identify targets for air strikes. People are dying due to these “efficiencies”.

OpenAI has quietly walked back a ban on the military use of ChatGPT and its other artificial intelligence tools, although its policies still state that users should not “use our service to harm yourself or others,” including to “develop or use weapons.” But how much can such a company be trusted if it partners with the Pentagon?

We are entering a period of dangerous geopolitical conflicts and times where technological competition boils over into more nefarious demonstrations of power, and AI will be leveraged for and against national interests. You might be affected.