AI can recognize race from X-rays — and nobody knows how

According to MIT and Harvard Scientists.

Explainable artificial intelligence (XAI) is a set of processes and methods that allows human users to comprehend and trust the results and output created by machine learning algorithms.

But what happens when A.I. can do things that researchers don’t understand? This is more common than you might think. If you think about YouTube or TikTok’s recommendation engine and A.I. that learns over experience, no one person can explain exactly how it works.

As artificial intelligence evolves in our human systems, institutions and decision making, this could become a real problem.

The case study of bones is pretty interesting, and weird. This is an old story and was originally covered by Wired but has been picked up again this week.

In Healthcare and A.I., I think XAI is a more serious problem than we are admitting it is in 2022. Let’s try to unpack some of this on a superficial level at least.

Who are you going to trust, your Doctor or their algorithm?

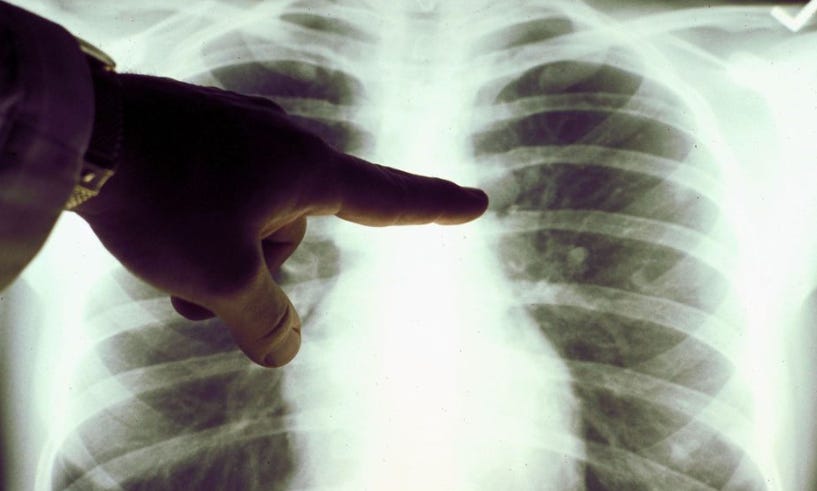

A doctor can’t tell if somebody is Black, Asian, or white, just by looking at their X-rays. But a computer can, according to a surprising new paper by an international team of scientists, including researchers at the Massachusetts Institute of Technology and Harvard Medical School.

I find it surprising that the researchers couldn’t figure out how the A.I. did it. What do you think?

For access to more premium content, consider going paid. Join 69 other paying subscribers who support this channel and are A.I. enthusiasts.

Or you can always just start a Free Trial. Mine is 14 days instead of the usual 7 day one that Substack usually offers.

The article continues below.